Difference between revisions of "Ubuntu L4T User Guide"

Daniel.hung (talk | contribs) |

Daniel.hung (talk | contribs) |

||

| Line 41: | Line 41: | ||

Log in with your nVidia developer account, and you can see the STEP 01 page. | Log in with your nVidia developer account, and you can see the STEP 01 page. | ||

| − | [[File:Tx2-sdk-step1.png|800px| | + | '''STEP 01:''' Set "''Target Hardware''" to "''Jetson TX2 P3310''", and select target OS you want to install. Here, we choose 4.2.2. |

| + | |||

| + | [[File:Tx2-sdk-step1.png|800px|Tx2-sdk-step1]] | ||

| + | |||

| + | '''STEP 02: '''Check the components you want, and continue. | ||

| + | |||

| + | [[File:Tx2-sdk-step2.png|800px|Tx2-sdk-step2]] | ||

| + | |||

| + | '''Note: '''Please <span style="color:#FF0000">DO NOT</span> check the "''Jetson OS''" item. It will generate and flash TX2 demo image into your device. | ||

| + | |||

| + | '''STEP 03:''' After download process is done, you need to input the IP address of your TX2 device. It will install SDK via network. | ||

| + | |||

| + | [[File:Tx2-sdk-step3-ip.png|400px|Tx2-sdk-step3-ip]] | ||

| + | |||

| + | It will take several minutes to finish the installation. | ||

| + | |||

| + | [[File:Tx2-sdk-step3-sdk.png|800px|Tx2-sdk-step3-sdk]] | ||

| + | |||

| + | '''STEP 04: '''When you go to this step, it's done! | ||

| + | |||

| + | [[File:Tx2-sdk-step4.png|800px|Tx2-sdk-step4]] | ||

= Demo = | = Demo = | ||

Revision as of 09:06, 3 December 2019

Contents

Getting Started

Host Environment

Ubuntu 18.04 (recommended) or 16.04

Force Recovery Mode

To enter force recovery mode, you can do:

- 1. Hold the Recovery key

- 2. Power on device

- 3. Wait for 5 seconds and you can release the Recovery key

Once it enters recovery mode successfully, the HDMI output should be disabled. Then, you have to connect a USB cable with TX2 device and PC. A new "nvidia apx" device will be detected on PC.

Flash Pre-built Image

First, make sure your TX2 device is already in Force Recover mode, and USB cable is connected.

Then, execute the TX2_flash.sh script which you can find it in the release folder.

$ sudo ./TX2_flash.sh

After script is done, the target device will boot into OS automatically.

Install SDK Components

Download the SDK Manager for Jetson TX2 series from JetPack website.

Note: You will need a nVidia developer account for access.

After download complete, install via dpkg.

$ sudo dpkg -i sdkmanager_0.9.14-4964_amd64.deb

Then, you're able to run SDK manager.

$ sdkmanager

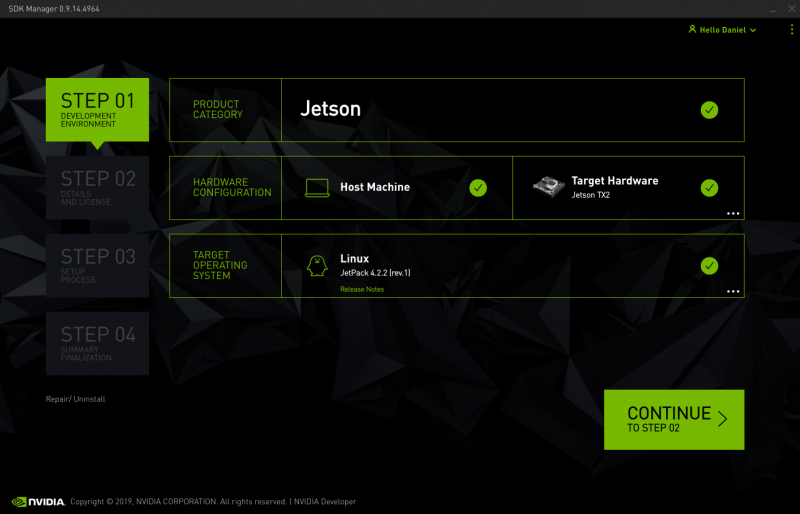

Log in with your nVidia developer account, and you can see the STEP 01 page.

STEP 01: Set "Target Hardware" to "Jetson TX2 P3310", and select target OS you want to install. Here, we choose 4.2.2.

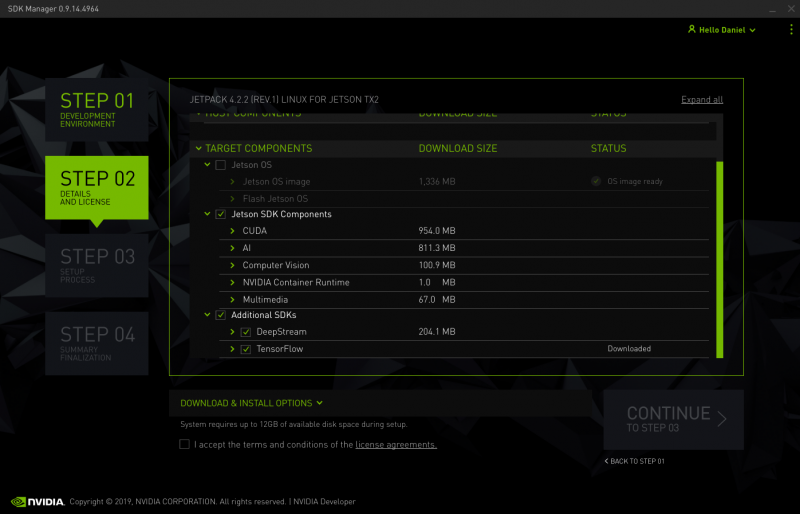

STEP 02: Check the components you want, and continue.

Note: Please DO NOT check the "Jetson OS" item. It will generate and flash TX2 demo image into your device.

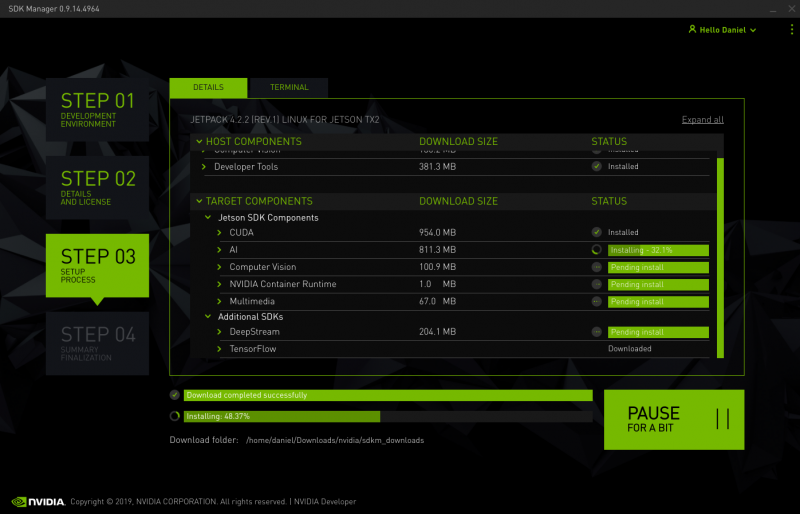

STEP 03: After download process is done, you need to input the IP address of your TX2 device. It will install SDK via network.

It will take several minutes to finish the installation.

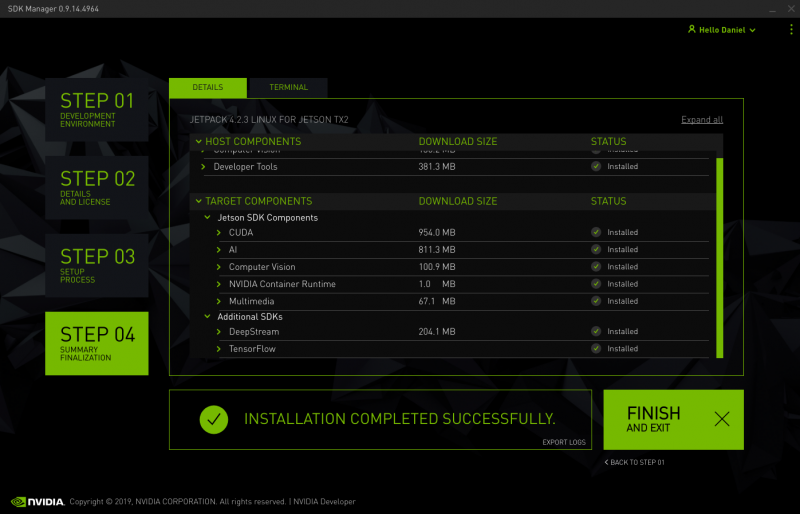

STEP 04: When you go to this step, it's done!

Demo

In this section, we setup and run demo applications on TX2 target device.

Export deepstream sdk root first.

$ export DS_SDK_ROOT="/opt/nvidia/deepstream/deepstream-4.0"

Deepstream Samples

There are 3 kinds of object detector demos in deepstream SDK.

To replace the video file, you can modify the corresponding config files. For example,

$ vim deepstream_app_config_yoloV3.txt uri=file:///home/advrisc/Videos/2014.mp4

FasterRCNN

Setup:

$ cd $DS_SDK_ROOT/sources/objectDetector_FasterRCNN $ wget --no-check-certificate https://dl.dropboxusercontent.com/s/o6ii098bu51d139/faster_rcnn_models.tgz?dl=0 -O faster-rcnn.tgz $ tar zxvf faster-rcnn.tgz -C . --strip-components=1 --exclude=ZF_* $ cp /usr/src/tensorrt/data/faster-rcnn/faster_rcnn_test_iplugin.prototxt . $ make -C nvdsinfer_custom_impl_fasterRCNN

Run:

$ deepstream-app -c deepstream_app_config_fasterRCNN.txt

SSD

Setup:

$ cd $DS_SDK_ROOT/sources/objectDetector_SSD $ cp /usr/src/tensorrt/data/ssd/ssd_coco_labels.txt . $ pip install tensorflow-gpu $ sudo apt-get install python-protobuf $ wget http://download.tensorflow.org/models/object_detection/ssd_inception_v2_coco_2017_11_17.tar.gz $ tar zxvf ssd_inception_v2_coco_2017_11_17.tar.gz $ cd ssd_inception_v2_coco_2017_11_17 $ python3 /usr/lib/python3.6/dist-packages/uff/bin/convert_to_uff.py \ frozen_inference_graph.pb -O NMS -p /usr/src/tensorrt/samples/sampleUffSSD/config.py -o sample_ssd_relu6.uff $ cp sample_ssd_relu6.uff ../ $ cd .. $ make -C nvdsinfer_custom_impl_ssd

Run:

$ deepstream-app -c deepstream_app_config_ssd.txt

Yolo

Setup:

$ cd $DS_SDK_ROOT/sources/objectDetector_Yolo $ ./prebuild.sh $ export CUDA_VER=10.0 $ make -C nvdsinfer_custom_impl_Yolo

Run:

$ deepstream-app -c deepstream_app_config_yoloV3.txt -OR- $ deepstream-app -c deepstream_app_config_yoloV3_tiny.txt

Deepstream Reference Apps

In this repository, it provides some reference applications for video analytics tasks using TensorRT and DeepSTream SDK 4.0.

$ cd $DS_SDK_ROOT/sources/apps/sample_apps/ $ git clone https://github.com/NVIDIA-AI-IOT/deepstream_reference_apps.git $ cd deepstream_reference_apps

back-to-back-detectors & anomaly

These two applications only support elementary h264 stream, not mp4 video file.

runtime_source_add_delete

Setup:

$ cd runtime_source_add_delete $ make

Run:

$ ./deepstream-test-rt-src-add-del <uri> $ ./deepstream-test-rt-src-add-del file://$DS_SDK_ROOT/samples/streams/sample_1080p_h265.mp4 $ ./deepstream-test-rt-src-add-del rtsp://127.0.0.1/video