Difference between revisions of "NXP eIQ"

Darren.huang (talk | contribs) |

Darren.huang (talk | contribs) |

||

| Line 7: | Line 7: | ||

*''The Neural Processing Unit (NPU) of i.MX 8M Plus operating at up to 2.3 TOPS''''' | *''The Neural Processing Unit (NPU) of i.MX 8M Plus operating at up to 2.3 TOPS''''' | ||

| + | |||

==== <span style="color:#0070c0">NXP Demo Experience </span> ==== | ==== <span style="color:#0070c0">NXP Demo Experience </span> ==== | ||

| Line 37: | Line 38: | ||

[[File:2023-09-27 162615.png|400px|2023-09-27 162615.png]] | [[File:2023-09-27 162615.png|400px|2023-09-27 162615.png]] | ||

| + | |||

| + | NNStreamer Demo: Object Detection | ||

| + | |||

| + | Click the "Object Detection " and Launch Demo | ||

| | ||

Revision as of 10:45, 27 September 2023

Contents

NXP i.MX series

The i.MX 8M Plus family focuses on neural processing unit (NPU) and vision system, advance multimedia, andindustrial automation with high reliability.

- The Neural Processing Unit (NPU) of i.MX 8M Plus operating at up to 2.3 TOPS

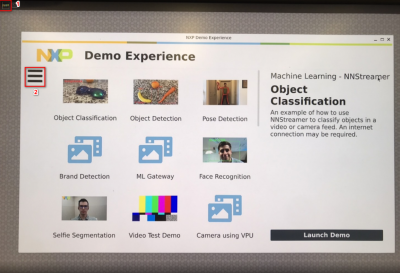

NXP Demo Experience

- Preinstalled on NXP-provided demo Linux images

- imx-image-full image must be used

- Yocto 3.3 (5.10.52_2.1.0 ) ~ Yocto 4.2 (6.1.1_1.0.0)

- Need to connect the internet

Start the demo launcher by clicking NXP Logo is displayed on the top left-hand corner of the screen

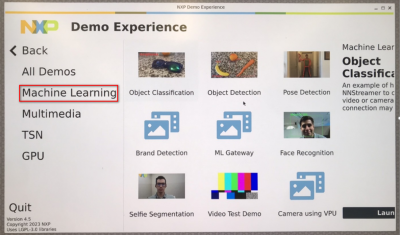

Machine Learning Demos:

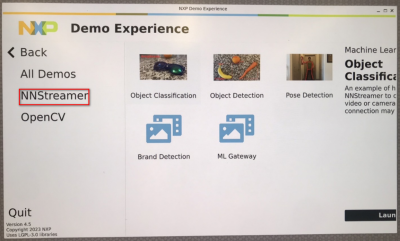

- NNStreamer demos

- Object classification

- Object detection

- Pose detection

- Brand detection

- ML gateway

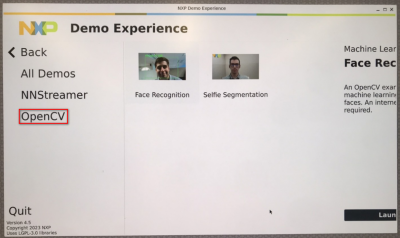

- OpenCV demos

- Face recognition

- Selfie segmentation

NNStreamer Demo: Object Detection

Click the "Object Detection " and Launch Demo

eIQ - A Python Framework for eIQ on i.MX Processors

PyeIQ is written on top of eIQ™ ML Software Development Environment and provides a set of Python classes

allowing the user to run Machine Learning applications in a simplified and efficiently way without spending time on

cross-compilations, deployments or reading extensive guides.

Installation

- Method 1: Use pip3 tool to install the package located at PyPI repository:

$ pip3 install eiq Collecting eiq Downloading [https://files.pythonhosted.org/packages/10/54/7a78fca1ce02586a91c88ced1c70acb16ca095779e5c6c8bdd379cd43077/eiq-2.1.0.tar.gz] Installing collected packages: eiq Running setup.py install for eiq ... done Successfully installed eiq-2.1.0

- Method 2: Get the latest tarball Download files and copy it to the board:

$ pip3 install <tarball>

Other eiq versions:

How to Run Samples

- Start the manager tool:

$ pyeiq

- The above command returns the PyeIQ manager tool options:

| Manager Tool Command | Description | Example |

| pyeiq --list-apps | List the available applications. | |

| pyeiq --list-demos | List the available demos. | |

| pyeiq --run <app_name/demo_name> | Run the application or demo. | # pyeiq --run object_detection_tflite |

| pyeiq --info <app_name/demo_name> | Application or demo short description and usage. | |

| pyeiq --clear-cache | Clear cached media generated by demos. | # pyeiq --info object_detection_dnn |

- Common Demos Parameters

| Argument | Description | Example of usage |

| --download -d | Choose from which server the models are going to download. Options: drive, github, wget. If none is specified, the application search automatically for the best server. |

/opt/eiq/demos# eiq_demo.py --download drive /opt/eiq/demos# eiq_demo.py -d github |

| --help -h | Shows all available arguments for a certain demo and a brief explanation of its usage. |

/opt/eiq/demos# eiq_demo.py --help /opt/eiq/demos# eiq_demo.py -h |

| --image -i | Use an image of your choice within the demo. |

/opt/eiq/demos# eiq_demo.py --image /home/root/image.jpg /opt/eiq/demos# eiq_demo.py -i /home/root/image.jpg |

| --labels -l | Use a labels file of your choice within the demo. Labels and models must be compatible for proper outputs. |

/opt/eiq/demos# eiq_demo.py --labels /home/root/labels.txt /opt/eiq/demos# eiq_demo.py -l /home/root/labels.txt |

| --model -m | Use a model file of your choice within the demo. Labels and models must be compatible for proper outputs. |

/opt/eiq/demos# eiq_demo.py --model /home/root/model.tflite /opt/eiq/demos# eiq_demo.py -m /home/root/model.tflite |

| --res -r | Choose the resolution of your video capture device. Options: full_hd (1920x1080), hd (1280x720), vga (640x480). If none is specified, it uses hd as default. If your video device doesn’t support the chosen resolution, it automatically selects the best one available. |

/opt/eiq/demos# eiq_demo.py --res full_hd /opt/eiq/demos# eiq_demo.py -r vga |

| --video_fwk -f | Choose which video framework is used to display the video. Options: opencv, v4l2, gstreamer (need improvements). If none is specified, it uses v4l2 as default. |

/opt/eiq/demos# eiq_demo.py --video_fwk opencv /opt/eiq/demos# eiq_demo.py -f v4l2 |

| --video_src -v | It makes the demo run inference on a video instead of an image. You can simply use the parameter “True” for it to run, specify your video capture device or even a video file. Options: True, /dev/video, path_to_your_video_file. |

/opt/eiq/demos# eiq_demo.py --video_src /dev/video3 /opt/eiq/demos# eiq_demo.py -v True /opt/eiq/demos# eiq_demo.py -v /home/root/video.mp4 |

Run Applications and Demos

- Applications

| Application Name | Framework | i.MX Board | BSP Release | Inference Core | Status |

| Switch Classification Image | TFLite:2.1.0 | RSB-3720 | 5.4.24_2.1.0 | CPU, GPU, NPU | PASS |

| Switch Detection Video | TFLite:2.1.0 | RSB-3720 | 5.4.24_2.1.0 | CPU, GPU, NPU | PASS |

- Demos

| Demo Name | Framework | i.MX Board | BSP Release | Inference Core | Status |

| Object Classification | TFLite:2.1.0 | RSB-3720 | 5.4.24_2.1.0 | GPU, NPU | PASS |

| Object Detection SSD | TFLite:2.1.0 | RSB-3720 | 5.4.24_2.1.0 | GPU, NPU | PASS |

| Object Detection YOLOv3 | TFLite:2.1.0 | RSB-3720 | 5.4.24_2.1.0 | GPU, NPU | PASS |

| Object Detection DNN | OpenCV:4.2.0 | RSB-3720 | 5.4.24_2.1.0 | CPU | PASS |

| Facial Expression Detection | TFLite:2.1.0 | RSB-3720 | 5.4.24_2.1.0 | GPU, NPU | PASS |

| Fire Classification | TFLite:2.1.0 | RSB-3720 | 5.4.24_2.1.0 | GPU, NPU | PASS |

| Fire Classification | ArmNN:19.08 | RSB-3720 | 5.4.24_2.1.0 | GPU, NPU | PASS |

| Pose Detection | TFLite:2.1.0 | RSB-3720 | 5.4.24_2.1.0 | GPU, NPU | PASS |

| Face/Eyes Detection | OpenCV:4.2.0 | RSB-3720 | 5.4.24_2.1.0 | GPU, NPU | PASS |

Applications Example - Switch Detection Video

This application offers a graphical interface for users to run an object detection demo using either CPU or GPU/NPU to perform inference on a video file.

- Run the Switch Detection Video demo using the following line:

$ pyeiq --run switch_video

- Type on CPU or GPU/NPU in the terminal to switch between cores.

- This runs inference on a default video:

Demos Example - Running Object Detection SSD

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos. Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance.

- Run the Object Detection Default Image demo using the following line:

$ pyeiq --run object_detection_tflite

* This runs inference on a default image:

- Run the Object Detection Custom Image demo using the following line:

$ pyeiq --run object_detection_tflite --image=/path_to_the_image

- Run the Object Detection Video File using the following line:

$ pyeiq --run object_detection_tflite --video_src=/path_to_the_video

- Run the Object Detection Video Camera or Webcam using the following line:

$ pyeiq --run object_detection_tflite --video_src=/dev/video<index>