RK Platform NPU SDK

Contents

Preface

NPU Introduce

RK3568

- Neural network acceleration engine with processing performance up to 0.8 TOPS

- Support integer 4, integer 8, integer 16, float 16, Bfloat 16 and tf32 operation

- Support deep learning frameworks: TensorFlow, Caffe, Tflite, Pytorch, Onnx NN, Android NN, etc.

- One isolated voltage domain to support DVFS

RK3588

- Neural network acceleration engine with processing performance up to 6 TOPS

- Include triple NPU core, and support triple core co-work, dual core co-work, and work independently

- Support integer 4, integer 8, integer 16, float 16, Bfloat 16 and tf32 operation

- Embedded 384KBx3 internal buffer

- Multi-task, multi-scenario in parallel

- Support deep learning frameworks: TensorFlow, Caffe, Tflite, Pytorch, Onnx NN, Android NN, etc.

- One isolated voltage domain to support DVFS

RKNN SDK

RKNN SDK (Baidu Password: a887)include two parts:

- rknn-toolkit2

- rknpu2

├── rknn-toolkit2

│ ├── doc

│ ├── examples

│ ├── packages

│ └── rknn_toolkit_lite2

└── rknpu2

├── doc

├── examples

└── runtime

rknpu2

'rknpu2' include documents (rknpu2/doc) and examples (rknpu2/examples) to help to fast develop AI applications using rknn model(*.rknn).

Other models (eg:Caffe、TensorFlow etc) can be translated to rknn model through 'rknn-toolkit2'.

RKNN API Library file librknnrt.so and header file rknn_api.h can be found in rknpu2/runtime.

Released BSP and images have already included NPU driver and runtime libraries.

Here are two examples built in released images:

1. rknn_ssd_demo

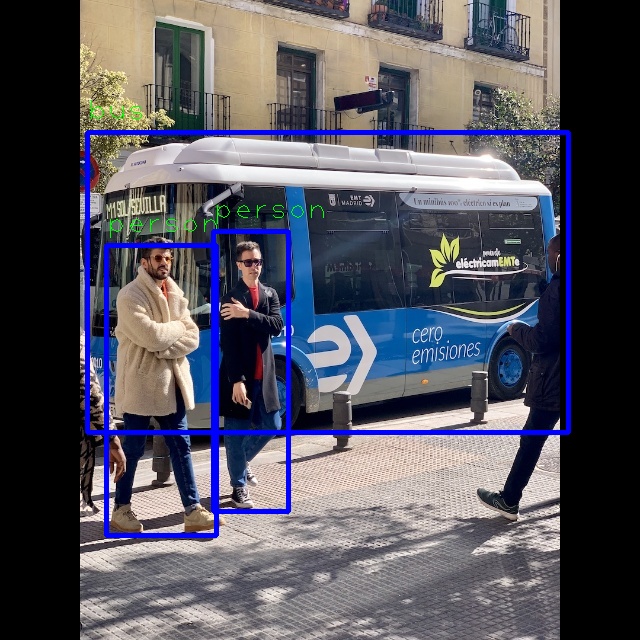

cd /tools/test/adv/npu2/rknn_ssd_demo ./rknn_ssd_demo model/ssd_inception_v2.rknn model/bus.jpg resize 640 640 to 300 300 Loading model ... rknn_init ... model input num: 1, output num: 2 input tensors: index=0, name=Preprocessor/sub:0, n_dims=4, dims=[1, 300, 300, 3], n_elems=270000, size=270000, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=0, scale=0.007843 output tensors: index=0, name=concat:0, n_dims=4, dims=[1, 1917, 1, 4], n_elems=7668, size=7668, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=50, scale=0.090787 index=1, name=concat_1:0, n_dims=4, dims=[1, 1917, 91, 1], n_elems=174447, size=174447, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=58, scale=0.140090 rknn_run loadLabelName ssd - loadLabelName ./model/coco_labels_list.txt loadBoxPriors person @ (106 245 216 535) 0.994422 bus @ (87 132 568 432) 0.991533 person @ (213 231 288 511) 0.843047

2. rknn_mobilenet_demo

cd /tools/test/adv/npu2/rknn_mobilenet_demo ./rknn_mobilenet_demo model/mobilenet_v1.rknn model/cat_224x224.jpg model input num: 1, output num: 1 input tensors: index=0, name=input, n_dims=4, dims=[1, 224, 224, 3], n_elems=150528, size=150528, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=0, scale=0.007812 output tensors: index=0, name=MobilenetV1/Predictions/Reshape_1, n_dims=2, dims=[1, 1001, 0, 0], n_elems=1001, size=1001, fmt=UNDEFINED, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003906 rknn_run --- Top5 --- 283: 0.468750 282: 0.242188 286: 0.105469 464: 0.089844 264: 0.019531

rknn-toolkit2

Tool Introduction

RKNN-Toolkit2 is a development kit that provides users with model conversion, inference and performance evaluation on PC platforms. Users can easily complete the following functions through the Python interface provided by the tool:

- Model conversion: support to convert Caffe / TensorFlow / TensorFlow Lite / ONNX / Darknet / PyTorch model to RKNN model, support RKNN model import/export, which can be used on Rockchip NPU platform later.

- Quantization: support to convert float model to quantization model, currently support quantized methods including asymmetric quantization (asymmetric_quantized-8). and support hybrid quantization.

- Model inference: Able to simulate NPU to run RKNN model on PC and get the inference result. This tool can also distribute the RKNN model to the specified NPU device to run, and get the inference results.

- Performance & Memory evaluation: distribute the RKNN model to the specified NPU device to run, and evaluate the model performance and memory consumption in the actual device.

- Quantitative error analysis: This function will give the Euclidean or cosine distance of each layer of inference results before and after the model is quantized. This can be used to analyze how quantitative error occurs, and provide ideas for improving the accuracy of quantitative models.

- Model encryption: Use the specified encryption method to encrypt the RKNN model as a whole.

System Dependency

OS Version : Ubuntu18.04(x64) / Ubuntu20.04(x64)

Python Version : 3.6 / 3.8

Python library dependencies :

Python 3.6

cat rknn-toolkit2/doc/requirements_cp36*.txt # if install failed, please change the pip source to 'https://mirror.baidu.com/pypi/simple' # base deps numpy==1.19.5 protobuf==3.12.2 flatbuffers==1.12 # utils requests==2.27.1 psutil==5.9.0 ruamel.yaml==0.17.4 scipy==1.5.4 tqdm==4.64.0 bfloat16==1.1 opencv-python==4.5.5.64 # base onnx==1.9.0 onnxoptimizer==0.2.7 onnxruntime==1.10.0 torch==1.10.1 torchvision==0.11.2 tensorflow==2.6.2

Python3.8

cat rknn-toolkit2/doc/requirements_cp38*.txt # if install failed, please change the pip source to 'https://mirror.baidu.com/pypi/simple' # base deps numpy==1.19.5 protobuf==3.12.2 flatbuffers==1.12 # utils requests==2.27.1 psutil==5.9.0 ruamel.yaml==0.17.4 scipy==1.5.4 tqdm==4.64.0 bfloat16==1.1 opencv-python==4.5.5.64 # base onnx==1.9.0 onnxoptimizer==0.2.7 onnxruntime==1.10.0 torch==1.10.1 torchvision==0.11.2

Installation

Create virtualenv environment. If there are multiple versions of the Python environment in the system, it is recommended to use virtualenv to manage the Python environment. Take Python3.6 for example:

- Install virtualenv 、Python3.6 and pip3

sudo apt-get install virtualenv sudo apt-get install python3 python3-dev python3-pip

- Install dependencies

sudo apt-get install libxslt1-dev zlib1g zlib1g-dev libglib2.0-0 libsm6 libgl1-mesa-glx libprotobuf-dev gcc

- Install requirements_cp36-*.txt

virtualenv -p /usr/bin/python3 venv source venv/bin/activate sed -i 's|bfloat16==|#bfloat16==|g' rknn-toolkit2/doc/requirements_cp36-*.txt pip3 install -r rknn-toolkit2/doc/requirements_cp36-*.txt sed -i 's|#bfloat16==|bfloat16==|g' rknn-toolkit2/doc/requirements_cp36-*.txt pip3 install -r rknn-toolkit2/doc/requirements_cp36-*.txt

- Install RKNN-Toolkit2

pip3 install rknn-toolkit2/packages/rknn_toolkit2*cp36*.whl

- Check whether RKNN-Toolkit2 install successfully,press ctrl+d to exit

python3 >>> from rknn.api import RKNN >>>

If install successfully, there is no error information.

Here is one of the failed informations:

>>> from rknn.api import RKNN Traceback (most recent call last): File "<stdin>",line 1,in <module> ImportError: No module named 'rknn'

Model Conversion Demo

Here gives an example to show how to convert tflite model(mobilenet_v1_1.0_224.tflite) to RKNN model (mobilenet_v1.rknn) ON PC.

cd rknn-toolkit2/examples/tflite/mobilenet_v1

python3 test.py

W __init__: rknn-toolkit2 version: 1.4.0-22dcfef4

--> Config model

W config: 'target_platform' is None, use rk3566 as default, Please set according to the actual platform!

done

--> Loading model

2023-08-10 16:01:46.252263: W tensorflow/stream_executor/platform/default/dso_loader.cc:64] Could not load dynamic library 'libcudart.so.11.0'; dlerror: libcudart.so.11.0: cannot open shared object file: No such file or directory; LD_LIBRARY_PATH: /media/jt/8T/aka/RK_NPU_SDK_V1.4.0/venv/lib/python3.6/site-packages/cv2/../../lib64:

2023-08-10 16:01:46.252283: I tensorflow/stream_executor/cuda/cudart_stub.cc:29] Ignore above cudart dlerror if you do not have a GPU set up on your machine.

done

--> Building model

I base_optimize ...

I base_optimize done.

I

I fold_constant ...

I fold_constant done.

I

I correct_ops ...

I correct_ops done.

I

I fuse_ops ...

I fuse_ops results:

I swap_reshape_softmax: remove node = ['MobilenetV1/Logits/SpatialSqueeze', 'MobilenetV1/Predictions/Reshape_1'], add node = ['MobilenetV1/Predictions/Reshape_1', 'MobilenetV1/Logits/SpatialSqueeze']

I convert_avgpool_to_global: remove node = ['MobilenetV1/Logits/AvgPool_1a/AvgPool'], add node = ['MobilenetV1/Logits/AvgPool_1a/AvgPool_2global']

I convert_global_avgpool_to_conv: remove node = ['MobilenetV1/Logits/AvgPool_1a/AvgPool_2global'], add node = ['MobilenetV1/Logits/AvgPool_1a/AvgPool']

I fold_constant ...

I fold_constant done.

I fuse_ops done.

I

I sparse_weight ...

I sparse_weight done.

I

Analysing : 100%|█████████████████████████████████████████████████| 58/58 [00:00<00:00, 2739.15it/s]

Quantizating : 100%|████████████████████████████████████████████████| 58/58 [00:00<00:00, 95.72it/s]

I

I fuse_ops ...

I fuse_ops with passes results:

I fuse_two_dataconvert: remove node = ['MobilenetV1/Predictions/Reshape_1_int8__cvt_float16_int8', 'MobilenetV1/Predictions/Reshape_1__cvt_int8_to_float16'], add node = ['MobilenetV1/Predictions/Reshape_1_int8__cvt_float16_int8']

I remove_invalid_dataconvert: remove node = ['MobilenetV1/Predictions/Reshape_1_int8__cvt_float16_int8']

I fuse_ops done.

I

I quant_optimizer ...

I quant_optimizer results:

I adjust_relu: ['Relu6__57', 'Relu6__55', 'Relu6__53', 'Relu6__51', 'Relu6__49', 'Relu6__47', 'Relu6__45', 'Relu6__43', 'Relu6__41', 'Relu6__39', 'Relu6__37', 'Relu6__35', 'Relu6__33', 'Relu6__31', 'Relu6__29', 'Relu6__27', 'Relu6__25', 'Relu6__23', 'Relu6__21', 'Relu6__19', 'Relu6__17', 'Relu6__15', 'Relu6__13', 'Relu6__11', 'Relu6__9', 'Relu6__7', 'Relu6__5']

I quant_optimizer done.

I

W build: The default input dtype of 'input' is changed from 'float32' to 'int8' in rknn model for performance!

Please take care of this change when deploy rknn model with Runtime API!

I rknn building ...

I RKNN: [16:01:52.341] compress = 0, conv_arith_fuse = 1, global_fuse = 1, multi-core-model-mode =

I RKNN: librknnc version: 1.4.0 (3b4520e4f@2022-09-05T12:50:09)

D RKNN: [16:01:52.354] RKNN is invoked

I RKNN: [16:01:52.394] Meet hybrid type, dtype: float16, tensor: MobilenetV1/Logits/Conv2d_1c_1x1/BiasAdd__float16

I RKNN: [16:01:52.394] Meet hybrid type, dtype: float16, tensor: MobilenetV1/Predictions/Reshape_1

I RKNN: [16:01:52.394] Meet hybrid type, dtype: float16, tensor: MobilenetV1/Predictions/Reshape_1_before

D RKNN: [16:01:52.394] >>>>>> start: N4rknn16RKNNAddFirstConvE

D RKNN: [16:01:52.394] <<<<<<<< end: N4rknn16RKNNAddFirstConvE

D RKNN: [16:01:52.394] >>>>>> start: N4rknn27RKNNEliminateQATDataConvertE

D RKNN: [16:01:52.394] <<<<<<<< end: N4rknn27RKNNEliminateQATDataConvertE

D RKNN: [16:01:52.394] >>>>>> start: N4rknn22RKNNAddReshapeAfterRNNE

D RKNN: [16:01:52.394] <<<<<<<< end: N4rknn22RKNNAddReshapeAfterRNNE

D RKNN: [16:01:52.394] >>>>>> start: N4rknn17RKNNTileGroupConvE

D RKNN: [16:01:52.394] <<<<<<<< end: N4rknn17RKNNTileGroupConvE

D RKNN: [16:01:52.394] >>>>>> start: N4rknn19RKNNTileFcBatchFuseE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn19RKNNTileFcBatchFuseE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn15RKNNAddConvBiasE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn15RKNNAddConvBiasE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn15RKNNTileChannelE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn15RKNNTileChannelE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn18RKNNPerChannelPrepE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn18RKNNPerChannelPrepE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn21RKNNFuseOptimizerPassE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn21RKNNFuseOptimizerPassE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn11RKNNBnQuantE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn11RKNNBnQuantE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn15RKNNTurnAutoPadE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn15RKNNTurnAutoPadE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn16RKNNInitRNNConstE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn16RKNNInitRNNConstE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn17RKNNInitCastConstE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn17RKNNInitCastConstE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn20RKNNMultiSurfacePassE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn20RKNNMultiSurfacePassE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn20RKNNAddSecondaryNodeE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn20RKNNAddSecondaryNodeE

D RKNN: [16:01:52.395] >>>>>> start: N4rknn23RKNNProfileAnalysisPassE

D RKNN: [16:01:52.395] <<<<<<<< end: N4rknn23RKNNProfileAnalysisPassE

D RKNN: [16:01:52.395] >>>>>> start: OpEmit

D RKNN: [16:01:52.395] <<<<<<<< end: OpEmit

D RKNN: [16:01:52.395] >>>>>> start: OpEmit

D RKNN: [16:01:52.396] <<<<<<<< end: OpEmit

D RKNN: [16:01:52.396] >>>>>> start: N4rknn21RKNNOperatorIdGenPassE

D RKNN: [16:01:52.397] <<<<<<<< end: N4rknn21RKNNOperatorIdGenPassE

D RKNN: [16:01:52.397] >>>>>> start: N4rknn23RKNNWeightTransposePassE

D RKNN: [16:01:52.541] <<<<<<<< end: N4rknn23RKNNWeightTransposePassE

D RKNN: [16:01:52.541] >>>>>> start: N4rknn26RKNNCPUWeightTransposePassE

D RKNN: [16:01:52.541] <<<<<<<< end: N4rknn26RKNNCPUWeightTransposePassE

D RKNN: [16:01:52.541] >>>>>> start: N4rknn18RKNNModelBuildPassE

D RKNN: [16:01:52.542] ID OpType DataType Target InputShape OutputShape DDR Cycles NPU Cycles Total Cycles Time(us) MacUsage(%) RW(KB) FullName

D RKNN: [16:01:52.542] 0 InputOperator INT8 CPU \ (1,3,224,224) 0 0 0 0 \ 147.00 InputOperator:input

D RKNN: [16:01:52.542] 1 ConvClip INT8 NPU (1,3,224,224),(32,3,3,3),(32) (1,32,112,112) 0 0 0 0 \ 541.50 Conv:MobilenetV1/MobilenetV1/Conv2d_0/Relu6

D RKNN: [16:01:52.542] 2 ConvClip INT8 NPU (1,32,112,112),(1,32,3,3),(32) (1,32,112,112) 0 0 0 0 \ 784.53 Conv:MobilenetV1/MobilenetV1/Conv2d_1_depthwise/Relu6

D RKNN: [16:01:52.542] 3 ConvClip INT8 NPU (1,32,112,112),(64,32,1,1),(64) (1,64,112,112) 0 0 0 0 \ 1178.50 Conv:MobilenetV1/MobilenetV1/Conv2d_1_pointwise/Relu6

D RKNN: [16:01:52.542] 4 ConvClip INT8 NPU (1,64,112,112),(1,64,3,3),(64) (1,64,56,56) 0 0 0 0 \ 981.06 Conv:MobilenetV1/MobilenetV1/Conv2d_2_depthwise/Relu6

D RKNN: [16:01:52.542] 5 ConvClip INT8 NPU (1,64,56,56),(128,64,1,1),(128) (1,128,56,56) 0 0 0 0 \ 597.00 Conv:MobilenetV1/MobilenetV1/Conv2d_2_pointwise/Relu6

D RKNN: [16:01:52.542] 6 ConvClip INT8 NPU (1,128,56,56),(1,128,3,3),(128) (1,128,56,56) 0 0 0 0 \ 786.12 Conv:MobilenetV1/MobilenetV1/Conv2d_3_depthwise/Relu6

D RKNN: [16:01:52.542] 7 ConvClip INT8 NPU (1,128,56,56),(128,128,1,1),(128) (1,128,56,56) 0 0 0 0 \ 801.00 Conv:MobilenetV1/MobilenetV1/Conv2d_3_pointwise/Relu6

D RKNN: [16:01:52.542] 8 ConvClip INT8 NPU (1,128,56,56),(1,128,3,3),(128) (1,128,28,28) 0 0 0 0 \ 492.12 Conv:MobilenetV1/MobilenetV1/Conv2d_4_depthwise/Relu6

D RKNN: [16:01:52.542] 9 ConvClip INT8 NPU (1,128,28,28),(256,128,1,1),(256) (1,256,28,28) 0 0 0 0 \ 328.00 Conv:MobilenetV1/MobilenetV1/Conv2d_4_pointwise/Relu6

D RKNN: [16:01:52.542] 10 ConvClip INT8 NPU (1,256,28,28),(1,256,3,3),(256) (1,256,28,28) 0 0 0 0 \ 396.25 Conv:MobilenetV1/MobilenetV1/Conv2d_5_depthwise/Relu6

D RKNN: [16:01:52.542] 11 ConvClip INT8 NPU (1,256,28,28),(256,256,1,1),(256) (1,256,28,28) 0 0 0 0 \ 458.00 Conv:MobilenetV1/MobilenetV1/Conv2d_5_pointwise/Relu6

D RKNN: [16:01:52.542] 12 ConvClip INT8 NPU (1,256,28,28),(1,256,3,3),(256) (1,256,14,14) 0 0 0 0 \ 249.25 Conv:MobilenetV1/MobilenetV1/Conv2d_6_depthwise/Relu6

D RKNN: [16:01:52.542] 13 ConvClip INT8 NPU (1,256,14,14),(512,256,1,1),(512) (1,512,14,14) 0 0 0 0 \ 279.00 Conv:MobilenetV1/MobilenetV1/Conv2d_6_pointwise/Relu6

D RKNN: [16:01:52.542] 14 ConvClip INT8 NPU (1,512,14,14),(1,512,3,3),(512) (1,512,14,14) 0 0 0 0 \ 204.50 Conv:MobilenetV1/MobilenetV1/Conv2d_7_depthwise/Relu6

D RKNN: [16:01:52.542] 15 ConvClip INT8 NPU (1,512,14,14),(512,512,1,1),(512) (1,512,14,14) 0 0 0 0 \ 456.00 Conv:MobilenetV1/MobilenetV1/Conv2d_7_pointwise/Relu6

D RKNN: [16:01:52.542] 16 ConvClip INT8 NPU (1,512,14,14),(1,512,3,3),(512) (1,512,14,14) 0 0 0 0 \ 204.50 Conv:MobilenetV1/MobilenetV1/Conv2d_8_depthwise/Relu6

D RKNN: [16:01:52.542] 17 ConvClip INT8 NPU (1,512,14,14),(512,512,1,1),(512) (1,512,14,14) 0 0 0 0 \ 456.00 Conv:MobilenetV1/MobilenetV1/Conv2d_8_pointwise/Relu6

D RKNN: [16:01:52.542] 18 ConvClip INT8 NPU (1,512,14,14),(1,512,3,3),(512) (1,512,14,14) 0 0 0 0 \ 204.50 Conv:MobilenetV1/MobilenetV1/Conv2d_9_depthwise/Relu6

D RKNN: [16:01:52.542] 19 ConvClip INT8 NPU (1,512,14,14),(512,512,1,1),(512) (1,512,14,14) 0 0 0 0 \ 456.00 Conv:MobilenetV1/MobilenetV1/Conv2d_9_pointwise/Relu6

D RKNN: [16:01:52.542] 20 ConvClip INT8 NPU (1,512,14,14),(1,512,3,3),(512) (1,512,14,14) 0 0 0 0 \ 204.50 Conv:MobilenetV1/MobilenetV1/Conv2d_10_depthwise/Relu6

D RKNN: [16:01:52.542] 21 ConvClip INT8 NPU (1,512,14,14),(512,512,1,1),(512) (1,512,14,14) 0 0 0 0 \ 456.00 Conv:MobilenetV1/MobilenetV1/Conv2d_10_pointwise/Relu6

D RKNN: [16:01:52.542] 22 ConvClip INT8 NPU (1,512,14,14),(1,512,3,3),(512) (1,512,14,14) 0 0 0 0 \ 204.50 Conv:MobilenetV1/MobilenetV1/Conv2d_11_depthwise/Relu6

D RKNN: [16:01:52.542] 23 ConvClip INT8 NPU (1,512,14,14),(512,512,1,1),(512) (1,512,14,14) 0 0 0 0 \ 456.00 Conv:MobilenetV1/MobilenetV1/Conv2d_11_pointwise/Relu6

D RKNN: [16:01:52.542] 24 ConvClip INT8 NPU (1,512,14,14),(1,512,3,3),(512) (1,512,7,7) 0 0 0 0 \ 131.00 Conv:MobilenetV1/MobilenetV1/Conv2d_12_depthwise/Relu6

D RKNN: [16:01:52.542] 25 ConvClip INT8 NPU (1,512,7,7),(1024,512,1,1),(1024) (1,1024,7,7) 0 0 0 0 \ 593.50 Conv:MobilenetV1/MobilenetV1/Conv2d_12_pointwise/Relu6

D RKNN: [16:01:52.542] 26 ConvClip INT8 NPU (1,1024,7,7),(1,1024,3,3),(1024) (1,1024,7,7) 0 0 0 0 \ 115.00 Conv:MobilenetV1/MobilenetV1/Conv2d_13_depthwise/Relu6

D RKNN: [16:01:52.542] 27 ConvClip INT8 NPU (1,1024,7,7),(1024,1024,1,1),(1024) (1,1024,7,7) 0 0 0 0 \ 1130.00 Conv:MobilenetV1/MobilenetV1/Conv2d_13_pointwise/Relu6

D RKNN: [16:01:52.542] 28 Conv INT8 NPU (1,1024,7,7),(1,1024,7,7),(1024) (1,1024,1,1) 0 0 0 0 \ 107.00 Conv:MobilenetV1/Logits/AvgPool_1a/AvgPool

D RKNN: [16:01:52.542] 29 Conv INT8 NPU (1,1024,1,1),(1001,1024,1,1),(1001) (1,1001,1,1) 0 0 0 0 \ 1010.86 Conv:MobilenetV1/Logits/Conv2d_1c_1x1/BiasAdd

D RKNN: [16:01:52.542] 30 exDataConvert INT8 NPU (1,1001,1,1) (1,1001,1,1) 0 0 0 0 \ 2.95 exDataConvert:MobilenetV1/Logits/Conv2d_1c_1x1/BiasAdd__cvt_int8_float16

D RKNN: [16:01:52.542] 31 Softmax FLOAT16 CPU (1,1001,1,1) (1,1001,1,1) 0 0 0 0 \ 3.92 Softmax:MobilenetV1/Predictions/Reshape_1

D RKNN: [16:01:52.542] 32 Reshape FLOAT16 CPU (1,1001,1,1),(2) (1,1001) 0 0 0 0 \ 3.93 Reshape:MobilenetV1/Logits/SpatialSqueeze

D RKNN: [16:01:52.542] 33 OutputOperator FLOAT16 CPU (1,1001),(1,1001) \ 0 0 0 0 \ 3.91 OutputOperator:MobilenetV1/Predictions/Reshape_1

D RKNN: [16:01:52.542] <<<<<<<< end: N4rknn18RKNNModelBuildPassE

D RKNN: [16:01:52.542] >>>>>> start: N4rknn21RKNNMemStatisticsPassE

D RKNN: [16:01:52.542] -------------------------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [16:01:52.542] ID User Tensor DataType OrigShape NativeShape | [Start End) Size

D RKNN: [16:01:52.542] -------------------------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [16:01:52.542] 1 ConvClip input INT8 (1,3,224,224) (1,1,224,224,3) | 0x00000000 0x00024c00 0x00024c00

D RKNN: [16:01:52.542] 2 ConvClip Relu6__5:0 INT8 (1,32,112,112) (1,4,112,112,8) | 0x00024c00 0x00086c00 0x00062000

D RKNN: [16:01:52.542] 3 ConvClip Relu6__7:0 INT8 (1,32,112,112) (1,4,112,112,8) | 0x00086c00 0x000e8c00 0x00062000

D RKNN: [16:01:52.542] 4 ConvClip Relu6__9:0 INT8 (1,64,112,112) (1,8,112,112,8) | 0x000e8c00*0x001acc00 0x000c4000

D RKNN: [16:01:52.542] 5 ConvClip Relu6__11:0 INT8 (1,64,56,56) (1,8,56,56,8) | 0x00000000 0x00031000 0x00031000

D RKNN: [16:01:52.542] 6 ConvClip Relu6__13:0 INT8 (1,128,56,56) (1,16,56,56,8) | 0x00031000 0x00093000 0x00062000

D RKNN: [16:01:52.542] 7 ConvClip Relu6__15:0 INT8 (1,128,56,56) (1,16,56,56,8) | 0x00093000 0x000f5000 0x00062000

D RKNN: [16:01:52.542] 8 ConvClip Relu6__17:0 INT8 (1,128,56,56) (1,16,56,56,8) | 0x00000000 0x00062000 0x00062000

D RKNN: [16:01:52.542] 9 ConvClip Relu6__19:0 INT8 (1,128,28,28) (1,16,28,28,8) | 0x00062000 0x0007a800 0x00018800

D RKNN: [16:01:52.542] 10 ConvClip Relu6__21:0 INT8 (1,256,28,28) (1,32,28,28,8) | 0x00000000 0x00031000 0x00031000

D RKNN: [16:01:52.542] 11 ConvClip Relu6__23:0 INT8 (1,256,28,28) (1,32,28,28,8) | 0x00031000 0x00062000 0x00031000

D RKNN: [16:01:52.542] 12 ConvClip Relu6__25:0 INT8 (1,256,28,28) (1,34,28,28,8) | 0x00062000 0x00096100 0x00034100

D RKNN: [16:01:52.542] 13 ConvClip Relu6__27:0 INT8 (1,256,14,14) (1,32,14,14,8) | 0x00000000 0x0000c400 0x0000c400

D RKNN: [16:01:52.542] 14 ConvClip Relu6__29:0 INT8 (1,512,14,14) (1,64,14,14,8) | 0x0000c400 0x00024c00 0x00018800

D RKNN: [16:01:52.542] 15 ConvClip Relu6__31:0 INT8 (1,512,14,14) (1,64,14,14,8) | 0x00024c00 0x0003d400 0x00018800

D RKNN: [16:01:52.542] 16 ConvClip Relu6__33:0 INT8 (1,512,14,14) (1,66,14,14,8) | 0x00000000 0x00019440 0x00019440

D RKNN: [16:01:52.542] 17 ConvClip Relu6__35:0 INT8 (1,512,14,14) (1,64,14,14,8) | 0x00019440 0x00031c40 0x00018800

D RKNN: [16:01:52.542] 18 ConvClip Relu6__37:0 INT8 (1,512,14,14) (1,66,14,14,8) | 0x00000000 0x00019440 0x00019440

D RKNN: [16:01:52.542] 19 ConvClip Relu6__39:0 INT8 (1,512,14,14) (1,64,14,14,8) | 0x00019440 0x00031c40 0x00018800

D RKNN: [16:01:52.542] 20 ConvClip Relu6__41:0 INT8 (1,512,14,14) (1,66,14,14,8) | 0x00000000 0x00019440 0x00019440

D RKNN: [16:01:52.542] 21 ConvClip Relu6__43:0 INT8 (1,512,14,14) (1,64,14,14,8) | 0x00019440 0x00031c40 0x00018800

D RKNN: [16:01:52.542] 22 ConvClip Relu6__45:0 INT8 (1,512,14,14) (1,66,14,14,8) | 0x00000000 0x00019440 0x00019440

D RKNN: [16:01:52.542] 23 ConvClip Relu6__47:0 INT8 (1,512,14,14) (1,64,14,14,8) | 0x00019440 0x00031c40 0x00018800

D RKNN: [16:01:52.542] 24 ConvClip Relu6__49:0 INT8 (1,512,14,14) (1,66,14,14,8) | 0x00000000 0x00019440 0x00019440

D RKNN: [16:01:52.542] 25 ConvClip Relu6__51:0 INT8 (1,512,7,7) (1,67,7,7,8) | 0x00019440 0x0001fc40 0x00006800

D RKNN: [16:01:52.542] 26 ConvClip Relu6__53:0 INT8 (1,1024,7,7) (1,137,7,7,8) | 0x00000000 0x0000d340 0x0000d340

D RKNN: [16:01:52.542] 27 ConvClip Relu6__55:0 INT8 (1,1024,7,7) (1,135,7,7,8) | 0x0000d340 0x0001a340 0x0000d000

D RKNN: [16:01:52.542] 28 Conv Relu6__57:0 INT8 (1,1024,7,7) (1,137,7,7,8) | 0x00000000 0x0000d340 0x0000d340

D RKNN: [16:01:52.542] 29 Conv MobilenetV1/Logits/AvgPool_1a/AvgPool INT8 (1,1024,1,1) (1,128,1,1,8) | 0x0000d340 0x0000d740 0x00000400

D RKNN: [16:01:52.542] 30 exDataConvert MobilenetV1/Logits/Conv2d_1c_1x1/BiasAdd INT8 (1,1001,1,1) (1,126,1,1,8) | 0x00000000 0x000003f0 0x000003f0

D RKNN: [16:01:52.542] 31 Softmax MobilenetV1/Logits/Conv2d_1c_1x1/BiasAdd__float16 FLOAT16 (1,1001,1,1) (1,502,1,1,4) | 0x00000400 0x00000bd8 0x000007d8

D RKNN: [16:01:52.542] 32 Reshape MobilenetV1/Predictions/Reshape_1_before FLOAT16 (1,1001,1,1) (1,1001,1,1) | 0x00000c00 0x000013d8 0x000007d8

D RKNN: [16:01:52.542] 33 OutputOperator MobilenetV1/Predictions/Reshape_1 FLOAT16 (1,1001) (1,1001) | 0x00000000 0x000007e0 0x000007e0

D RKNN: [16:01:52.542] -------------------------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [16:01:52.542] -------------------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [16:01:52.542] ID User Tensor DataType OrigShape | [Start End) Size

D RKNN: [16:01:52.542] -------------------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [16:01:52.542] 1 ConvClip const_fold_opt__306 INT8 (32,3,3,3) | 0x000fba80 0x000fc380 0x00000900

D RKNN: [16:01:52.542] 1 ConvClip MobilenetV1/MobilenetV1/Conv2d_0/Conv2D_bias INT32 (32) | 0x004198c0 0x004199c0 0x00000100

D RKNN: [16:01:52.542] 2 ConvClip const_fold_opt__285 INT8 (1,32,3,3) | 0x00243ec0 0x00243fe0 0x00000120

D RKNN: [16:01:52.542] 2 ConvClip MobilenetV1/MobilenetV1/Conv2d_1_depthwise/depthwise_bias INT32 (32) | 0x0040e7c0 0x0040e8c0 0x00000100

D RKNN: [16:01:52.542] 3 ConvClip const_fold_opt__303 INT8 (64,32,1,1) | 0x000fd580 0x000fdd80 0x00000800

D RKNN: [16:01:52.542] 3 ConvClip MobilenetV1/MobilenetV1/Conv2d_1_pointwise/Conv2D_bias INT32 (64) | 0x0040e5c0 0x0040e7c0 0x00000200

D RKNN: [16:01:52.542] 4 ConvClip const_fold_opt__301 INT8 (1,64,3,3) | 0x000fdd80 0x000fdfc0 0x00000240

D RKNN: [16:01:52.542] 4 ConvClip MobilenetV1/MobilenetV1/Conv2d_2_depthwise/depthwise_bias INT32 (64) | 0x0040e3c0 0x0040e5c0 0x00000200

D RKNN: [16:01:52.542] 5 ConvClip const_fold_opt__299 INT8 (128,64,1,1) | 0x000fdfc0 0x000fffc0 0x00002000

D RKNN: [16:01:52.542] 5 ConvClip MobilenetV1/MobilenetV1/Conv2d_2_pointwise/Conv2D_bias INT32 (128) | 0x0040dfc0 0x0040e3c0 0x00000400

D RKNN: [16:01:52.542] 6 ConvClip const_fold_opt__311 INT8 (1,128,3,3) | 0x00000000 0x00000480 0x00000480

D RKNN: [16:01:52.542] 6 ConvClip MobilenetV1/MobilenetV1/Conv2d_3_depthwise/depthwise_bias INT32 (128) | 0x0040dbc0 0x0040dfc0 0x00000400

D RKNN: [16:01:52.542] 7 ConvClip const_fold_opt__281 INT8 (128,128,1,1) | 0x00284000 0x00288000 0x00004000

D RKNN: [16:01:52.542] 7 ConvClip MobilenetV1/MobilenetV1/Conv2d_3_pointwise/Conv2D_bias INT32 (128) | 0x0040d7c0 0x0040dbc0 0x00000400

D RKNN: [16:01:52.542] 8 ConvClip const_fold_opt__269 INT8 (1,128,3,3) | 0x002c9200 0x002c9680 0x00000480

D RKNN: [16:01:52.542] 8 ConvClip MobilenetV1/MobilenetV1/Conv2d_4_depthwise/depthwise_bias INT32 (128) | 0x0040d3c0 0x0040d7c0 0x00000400

D RKNN: [16:01:52.542] 9 ConvClip const_fold_opt__264 INT8 (256,128,1,1) | 0x00309680 0x00311680 0x00008000

D RKNN: [16:01:52.542] 9 ConvClip MobilenetV1/MobilenetV1/Conv2d_4_pointwise/Conv2D_bias INT32 (256) | 0x0040cbc0 0x0040d3c0 0x00000800

D RKNN: [16:01:52.542] 10 ConvClip const_fold_opt__294 INT8 (1,256,3,3) | 0x001011c0 0x00101ac0 0x00000900

D RKNN: [16:01:52.542] 10 ConvClip MobilenetV1/MobilenetV1/Conv2d_5_depthwise/depthwise_bias INT32 (256) | 0x0040c3c0 0x0040cbc0 0x00000800

D RKNN: [16:01:52.542] 11 ConvClip const_fold_opt__258 INT8 (256,256,1,1) | 0x003116c0 0x003216c0 0x00010000

D RKNN: [16:01:52.542] 11 ConvClip MobilenetV1/MobilenetV1/Conv2d_5_pointwise/Conv2D_bias INT32 (256) | 0x0040bbc0 0x0040c3c0 0x00000800

D RKNN: [16:01:52.542] 12 ConvClip const_fold_opt__256 INT8 (1,256,3,3) | 0x003216c0 0x00321fc0 0x00000900

D RKNN: [16:01:52.542] 12 ConvClip MobilenetV1/MobilenetV1/Conv2d_6_depthwise/depthwise_bias INT32 (256) | 0x0040b3c0 0x0040bbc0 0x00000800

D RKNN: [16:01:52.542] 13 ConvClip const_fold_opt__252 INT8 (512,256,1,1) | 0x003a1fc0 0x003c1fc0 0x00020000

D RKNN: [16:01:52.542] 13 ConvClip MobilenetV1/MobilenetV1/Conv2d_6_pointwise/Conv2D_bias INT32 (512) | 0x0040a3c0 0x0040b3c0 0x00001000

D RKNN: [16:01:52.542] 14 ConvClip const_fold_opt__291 INT8 (1,512,3,3) | 0x00141ac0 0x00142cc0 0x00001200

D RKNN: [16:01:52.542] 14 ConvClip MobilenetV1/MobilenetV1/Conv2d_7_depthwise/depthwise_bias INT32 (512) | 0x004093c0 0x0040a3c0 0x00001000

D RKNN: [16:01:52.542] 15 ConvClip const_fold_opt__246 INT8 (512,512,1,1) | 0x003c43c0 0x004043c0 0x00040000

D RKNN: [16:01:52.542] 15 ConvClip MobilenetV1/MobilenetV1/Conv2d_7_pointwise/Conv2D_bias INT32 (512) | 0x004083c0 0x004093c0 0x00001000

D RKNN: [16:01:52.542] 16 ConvClip const_fold_opt__304 INT8 (1,512,3,3) | 0x000fc380 0x000fd580 0x00001200

D RKNN: [16:01:52.542] 16 ConvClip MobilenetV1/MobilenetV1/Conv2d_8_depthwise/depthwise_bias INT32 (512) | 0x004073c0 0x004083c0 0x00001000

D RKNN: [16:01:52.542] 17 ConvClip const_fold_opt__292 INT8 (512,512,1,1) | 0x00101ac0 0x00141ac0 0x00040000

D RKNN: [16:01:52.542] 17 ConvClip MobilenetV1/MobilenetV1/Conv2d_8_pointwise/Conv2D_bias INT32 (512) | 0x004063c0 0x004073c0 0x00001000

D RKNN: [16:01:52.542] 18 ConvClip const_fold_opt__270 INT8 (1,512,3,3) | 0x002c8000 0x002c9200 0x00001200

D RKNN: [16:01:52.542] 18 ConvClip MobilenetV1/MobilenetV1/Conv2d_9_depthwise/depthwise_bias INT32 (512) | 0x004053c0 0x004063c0 0x00001000

D RKNN: [16:01:52.542] 19 ConvClip const_fold_opt__265 INT8 (512,512,1,1) | 0x002c9680 0x00309680 0x00040000

D RKNN: [16:01:52.542] 19 ConvClip MobilenetV1/MobilenetV1/Conv2d_9_pointwise/Conv2D_bias INT32 (512) | 0x004043c0 0x004053c0 0x00001000

D RKNN: [16:01:52.542] 20 ConvClip const_fold_opt__289 INT8 (1,512,3,3) | 0x00142cc0 0x00143ec0 0x00001200

D RKNN: [16:01:52.542] 20 ConvClip MobilenetV1/MobilenetV1/Conv2d_10_depthwise/depthwise_bias INT32 (512) | 0x004188c0 0x004198c0 0x00001000

D RKNN: [16:01:52.542] 21 ConvClip const_fold_opt__282 INT8 (512,512,1,1) | 0x00244000 0x00284000 0x00040000

D RKNN: [16:01:52.542] 21 ConvClip MobilenetV1/MobilenetV1/Conv2d_10_pointwise/Conv2D_bias INT32 (512) | 0x004178c0 0x004188c0 0x00001000

D RKNN: [16:01:52.542] 22 ConvClip const_fold_opt__308 INT8 (1,512,3,3) | 0x000fa880 0x000fba80 0x00001200

D RKNN: [16:01:52.542] 22 ConvClip MobilenetV1/MobilenetV1/Conv2d_11_depthwise/depthwise_bias INT32 (512) | 0x004168c0 0x004178c0 0x00001000

D RKNN: [16:01:52.542] 23 ConvClip const_fold_opt__276 INT8 (512,512,1,1) | 0x00288000 0x002c8000 0x00040000

D RKNN: [16:01:52.542] 23 ConvClip MobilenetV1/MobilenetV1/Conv2d_11_pointwise/Conv2D_bias INT32 (512) | 0x004158c0 0x004168c0 0x00001000

D RKNN: [16:01:52.542] 24 ConvClip const_fold_opt__298 INT8 (1,512,3,3) | 0x000fffc0 0x001011c0 0x00001200

D RKNN: [16:01:52.542] 24 ConvClip MobilenetV1/MobilenetV1/Conv2d_12_depthwise/depthwise_bias INT32 (512) | 0x004148c0 0x004158c0 0x00001000

D RKNN: [16:01:52.542] 25 ConvClip const_fold_opt__254 INT8 (1024,512,1,1) | 0x00321fc0 0x003a1fc0 0x00080000

D RKNN: [16:01:52.542] 25 ConvClip MobilenetV1/MobilenetV1/Conv2d_12_pointwise/Conv2D_bias INT32 (1024) | 0x004128c0 0x004148c0 0x00002000

D RKNN: [16:01:52.542] 26 ConvClip const_fold_opt__250 INT8 (1,1024,3,3) | 0x003c1fc0 0x003c43c0 0x00002400

D RKNN: [16:01:52.542] 26 ConvClip MobilenetV1/MobilenetV1/Conv2d_13_depthwise/depthwise_bias INT32 (1024) | 0x004108c0 0x004128c0 0x00002000

D RKNN: [16:01:52.542] 27 ConvClip const_fold_opt__288 INT8 (1024,1024,1,1) | 0x00143ec0 0x00243ec0 0x00100000

D RKNN: [16:01:52.542] 27 ConvClip MobilenetV1/MobilenetV1/Conv2d_13_pointwise/Conv2D_bias INT32 (1024) | 0x0040e8c0 0x004108c0 0x00002000

D RKNN: [16:01:52.542] 28 Conv MobilenetV1/Logits/AvgPool_1a/AvgPool_2global_2conv_weight0 INT8 (1,1024,7,7) | 0x0041b940 0x00427d40 0x0000c400

D RKNN: [16:01:52.542] 28 Conv MobilenetV1/Logits/AvgPool_1a/AvgPool_2global_2conv_weight0_bias INT32 (1024) | 0x00427d40*0x00429d40 0x00002000

D RKNN: [16:01:52.542] 29 Conv const_fold_opt__309 INT8 (1001,1024,1,1) | 0x00000480 0x000fa880 0x000fa400

D RKNN: [16:01:52.542] 29 Conv MobilenetV1/Logits/Conv2d_1c_1x1/Conv2D_bias INT32 (1001) | 0x004199c0 0x0041b940 0x00001f80

D RKNN: [16:01:52.542] 32 Reshape const_fold_opt__259 INT64 (2) | 0x00311680 0x00311690 0x00000010

D RKNN: [16:01:52.542] -------------------------------------------------------------------------------------------------------+---------------------------------

D RKNN: [16:01:52.543] ----------------------------------------

D RKNN: [16:01:52.543] Total Weight Memory Size: 4365632

D RKNN: [16:01:52.543] Total Internal Memory Size: 1756160

D RKNN: [16:01:52.543] Predict Internal Memory RW Amount: 10331296

D RKNN: [16:01:52.543] Predict Weight Memory RW Amount: 4365552

D RKNN: [16:01:52.543] ----------------------------------------

D RKNN: [16:01:52.543] <<<<<<<< end: N4rknn21RKNNMemStatisticsPassE

I rknn buiding done

done

--> Export rknn model

done

--> Init runtime environment

W init_runtime: Target is None, use simulator!

done

--> Running model

Analysing : 100%|█████████████████████████████████████████████████| 60/60 [00:00<00:00, 2740.54it/s]

Preparing : 100%|██████████████████████████████████████████████████| 60/60 [00:00<00:00, 136.33it/s]

mobilenet_v1

-----TOP 5-----

[156]: 0.9345703125

[155]: 0.0570068359375

[205]: 0.00429534912109375

[284]: 0.003116607666015625

[285]: 0.00017178058624267578

done