Difference between revisions of "Distributed Tensorflow in Kubernetes"

From ESS-WIKI

| (One intermediate revision by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Introduce == | == Introduce == | ||

| + | Distributed Tensorflow (Clustering) can speed up your training. | ||

| + | Distributed tensorflow in kubernates make it easy to: | ||

| + | # Add k8s nodes to extend computing capability | ||

| + | # Simplify the work to make a distributed tensorflow | ||

| − | + | This topic will describe how to make a distributed tensorflow. | |

| − | + | == TFJob == | |

| − | + | * TFJob is a CRD(Custom Resource Definitions) of k8s that will create by kubeflow. | |

| − | + | * TFJob can help you to set | |

| − | |||

== Prerequisite == | == Prerequisite == | ||

| − | + | # You must know the basic concept of distributed tensorflow here: [https://www.tensorflow.org/deploy/distributed| Distributed TensorFlow] | |

| − | #You must know the basic concept of distributed tensorflow here: [https://www.tensorflow.org/deploy/distributed| Distributed TensorFlow] | + | # You must know how to write a distributed tensorflow training. Ex: [https://www.tensorflow.org/api_docs/python/tf/estimator/train_and_evaluate| train_and_evaluate] |

| − | #You must know how to write a distributed tensorflow training. Ex: [https://www.tensorflow.org/api_docs/python/tf/estimator/train_and_evaluate| train_and_evaluate] | ||

== Steps == | == Steps == | ||

| − | + | 1. Create(Download) source & Dockerfile [[File:Iris train and eval.zip]] and unzip to the same folder. | |

| − | 1. Create(Download) source & Dockerfile | ||

2. Create training container, where "ecgwc" is the username in dockerhub and "tf-iris:dist" is the container name | 2. Create training container, where "ecgwc" is the username in dockerhub and "tf-iris:dist" is the container name | ||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

$ docker build -t ecgwc/tf-iris:dist . | $ docker build -t ecgwc/tf-iris:dist . | ||

| − | </syntaxhighlight>3. Check if trainig docker is workable.<syntaxhighlight lang="bash"> | + | </syntaxhighlight> |

| + | |||

| + | 3. Check if trainig docker is workable. | ||

| + | <syntaxhighlight lang="bash"> | ||

$ docker run --rm ecgwc/tf-iris:dist | $ docker run --rm ecgwc/tf-iris:dist | ||

| − | </syntaxhighlight>[[File:Dist tf k8s-1.png | + | </syntaxhighlight> |

| + | [[File:Dist tf k8s-1.png]] | ||

| + | |||

| + | 4. Push docker to dockerHub | ||

| + | <syntaxhighlight lang="bash"> | ||

$ docker push ecgwc/tf-iris:dist | $ docker push ecgwc/tf-iris:dist | ||

| − | </syntaxhighlight> 5. Create(Download) yaml file for distributed tensorflow: [[File:Tf-dist-iris.zip | + | </syntaxhighlight> |

| + | |||

| + | 5. Create(Download) yaml file for distributed tensorflow: [[File:Tf-dist-iris.zip]] | ||

| + | |||

6. Deploy yaml to k8s | 6. Deploy yaml to k8s | ||

<syntaxhighlight lang="bash"> | <syntaxhighlight lang="bash"> | ||

$ kubectl create -f tf-dist-iris.yaml | $ kubectl create -f tf-dist-iris.yaml | ||

| − | </syntaxhighlight> 7. Check training | + | </syntaxhighlight> |

| − | [[File:Dist tf k8s-2.png | + | |

| + | 7. Check training status | ||

| + | * Check pod | ||

| + | [[File:Dist tf k8s-2.png]] | ||

| + | |||

| + | * Check tfjob | ||

| + | [[File:Dist tf k8s-3.png]] | ||

| + | |||

| + | * Check training log | ||

| + | <syntaxhighlight lang="bash"> | ||

| + | $ kubectl -n kubeflow logs tf-dist-chief-0 | ||

| + | </syntaxhighlight> | ||

| + | |||

== Reference == | == Reference == | ||

| − | + | https://github.com/Azure/kubeflow-labs/tree/master/7-distributed-tensorflow | |

| − | |||

Latest revision as of 04:17, 16 November 2018

Contents

Introduce

Distributed Tensorflow (Clustering) can speed up your training. Distributed tensorflow in kubernates make it easy to:

- Add k8s nodes to extend computing capability

- Simplify the work to make a distributed tensorflow

This topic will describe how to make a distributed tensorflow.

TFJob

- TFJob is a CRD(Custom Resource Definitions) of k8s that will create by kubeflow.

- TFJob can help you to set

Prerequisite

- You must know the basic concept of distributed tensorflow here: Distributed TensorFlow

- You must know how to write a distributed tensorflow training. Ex: train_and_evaluate

Steps

1. Create(Download) source & Dockerfile File:Iris train and eval.zip and unzip to the same folder.

2. Create training container, where "ecgwc" is the username in dockerhub and "tf-iris:dist" is the container name

$ docker build -t ecgwc/tf-iris:dist .

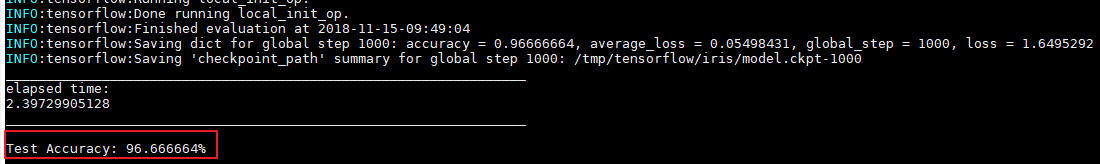

3. Check if trainig docker is workable.

$ docker run --rm ecgwc/tf-iris:dist

4. Push docker to dockerHub

$ docker push ecgwc/tf-iris:dist

5. Create(Download) yaml file for distributed tensorflow: File:Tf-dist-iris.zip

6. Deploy yaml to k8s

$ kubectl create -f tf-dist-iris.yaml

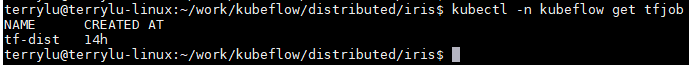

7. Check training status

- Check pod

- Check tfjob

- Check training log

$ kubectl -n kubeflow logs tf-dist-chief-0

Reference

https://github.com/Azure/kubeflow-labs/tree/master/7-distributed-tensorflow