Difference between revisions of "Edge AI SDK/AI Framework/JetsonOrin"

(→Edge AI SDK / Application) |

(→Edge AI SDK / GenAI Application) |

||

| (7 intermediate revisions by 2 users not shown) | |||

| Line 6: | Line 6: | ||

More Info refer to [https://developer.nvidia.com/embedded/jetpack https://developer.nvidia.com/embedded/jetpack] | More Info refer to [https://developer.nvidia.com/embedded/jetpack https://developer.nvidia.com/embedded/jetpack] | ||

| − | | + | JetPack [https://developer.nvidia.com/embedded/jetpack-sdk-512 5.1.2] includes TensorRT 8.5.2, DeepStream 6.2, |

= DeepStream = | = DeepStream = | ||

| Line 16: | Line 16: | ||

More Info refer to [https://developer.nvidia.com/deepstream-sdk https://developer.nvidia.com/deepstream-sdk] | More Info refer to [https://developer.nvidia.com/deepstream-sdk https://developer.nvidia.com/deepstream-sdk] | ||

| + | = TensorRT = | ||

| + | |||

| + | [https://developer.nvidia.com/tensorrt TensorRT] is a high performance deep learning inference runtime for image classification, segmentation, and object detection neural networks. TensorRT is built on CUDA, NVIDIA’s parallel programming model, and enables you to optimize inference for all deep learning frameworks. It includes a deep learning inference optimizer and runtime that delivers low latency and high-throughput for deep learning inference applications. | ||

| + | |||

| + | | ||

= Applications = | = Applications = | ||

| − | == Edge AI | + | == Edge AI SDK / Vision Application == |

{| border="1" cellpadding="1" cellspacing="1" style="width: 500px;" | {| border="1" cellpadding="1" cellspacing="1" style="width: 500px;" | ||

| Line 30: | Line 35: | ||

|- | |- | ||

| style="width: 154px;" | Person Detection | | style="width: 154px;" | Person Detection | ||

| − | | style="width: 154px;" | sample_ssd_relu6.uff | + | | style="width: 154px;" | sample_ssd_relu6.uff |

|- | |- | ||

| style="width: 154px;" | Face Detection | | style="width: 154px;" | Face Detection | ||

| − | | style="width: | + | | style="width: 154px;" | facenet.etlt / faciallandmarks.etlt |

|- | |- | ||

| style="width: 154px;" | Pose Estimation | | style="width: 154px;" | Pose Estimation | ||

| − | | style="width: | + | | style="width: 154px;" | bodypose2D model |

| + | |} | ||

| + | == Edge AI SDK / GenAI Application == | ||

| + | |||

| + | {| border="1" cellpadding="1" cellspacing="1" style="width: 500px;" | ||

| + | |- | ||

| + | | style="width: 154px;" | Application | ||

| + | | style="width: 179px;" | Model | ||

| + | |- | ||

| + | | style="width: 154px;" | Chatbot | ||

| + | | style="width: 154px;" | Llama-2-7b | ||

|} | |} | ||

Latest revision as of 08:55, 30 May 2024

Contents

JetPack

NVIDIA JetPack SDK is the most comprehensive solution for building end-to-end accelerated AI applications. JetPack provides a full development environment for hardware-accelerated AI-at-the-edge development on Nvidia Jetson modules. JetPack includes Jetson Linux with bootloader, Linux kernel, Ubuntu desktop environment, and a complete set of libraries for acceleration of GPU computing, multimedia, graphics, and computer vision. It also includes samples, documentation, and developer tools for both host computer and developer kit, and supports higher level SDKs such as DeepStream for streaming video analytics, Isaac for robotics, and Riva for conversational AI.

More Info refer to https://developer.nvidia.com/embedded/jetpack

JetPack 5.1.2 includes TensorRT 8.5.2, DeepStream 6.2,

DeepStream

DeepStream is a complete streaming analytics toolkit based on GStreamer for AI-based multi-sensor processing, video, audio, and image understanding. It’s ideal for vision AI developers, software partners, startups, and OEMs building IVA apps and services. Developers can now create stream processing pipelines that incorporate neural networks and other complex processing tasks such as tracking, video encoding/decoding, and video rendering. DeepStream pipelines enable real-time analytics on video, image, and sensor data.

DeepStream’s multi-platform support gives you a faster, easier way to develop vision AI applications and services. You can even deploy them on-premises, on the edge, and in the cloud with the click of a button.

More Info refer to https://developer.nvidia.com/deepstream-sdk

TensorRT

TensorRT is a high performance deep learning inference runtime for image classification, segmentation, and object detection neural networks. TensorRT is built on CUDA, NVIDIA’s parallel programming model, and enables you to optimize inference for all deep learning frameworks. It includes a deep learning inference optimizer and runtime that delivers low latency and high-throughput for deep learning inference applications.

Applications

Edge AI SDK / Vision Application

| Application | Model |

| Object Detection | yolov3 |

| Person Detection | sample_ssd_relu6.uff |

| Face Detection | facenet.etlt / faciallandmarks.etlt |

| Pose Estimation | bodypose2D model |

Edge AI SDK / GenAI Application

| Application | Model |

| Chatbot | Llama-2-7b |

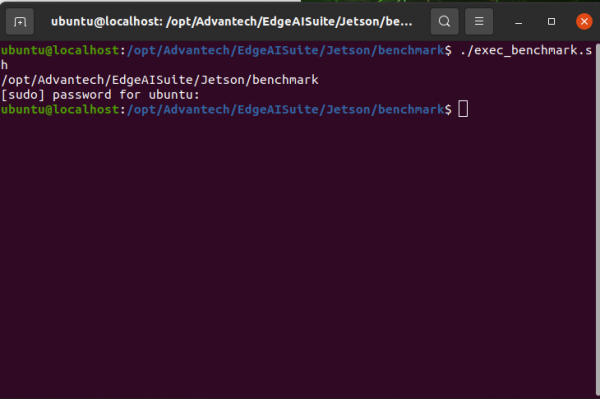

Benchmark

Jetson is used to deploy a wide range of popular DNN models and ML frameworks to the edge with high performance inferencing, for tasks like real-time classification and object detection, pose estimation, semantic segmentation, and natural language processing (NLP).

More Info refer to [ https://developer.nvidia.com/embedded/jetson-benchmarks ]

More Info refer to [ https://github.com/NVIDIA-AI-IOT/jetson_benchmarks ]

cd /opt/Advantech/EdgeAISuite/Jetson/benchmark

./exec_benchmark.sh

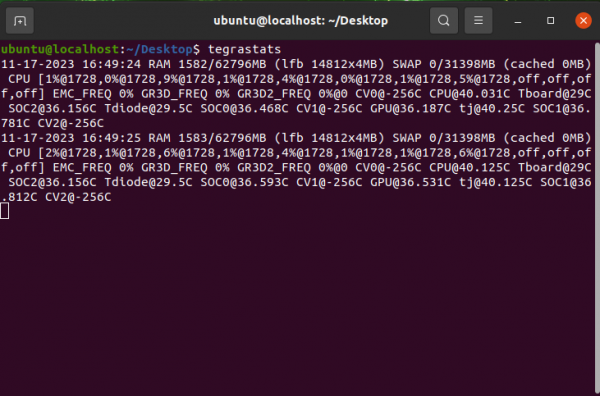

Tegrastats Utility

This SDK provides the tegrastats utility, which reports memory usage and processor usage for Tegra-based devices. You can find the utility in your package at the following location.

More Info refer to Link