Difference between revisions of "Edge AI SDK/AI Framework/OpenVINO"

Eric.liang (talk | contribs) (Created page with " = OpenVINO = <span style="font-size:larger;">[https://www.intel.com/content/www/us/en/developer/tools/openvino-toolkit/overview.html OpenVINO]™ toolkit: An open-source sol...") |

(→Edge AI SDK / Application) |

||

| (8 intermediate revisions by 2 users not shown) | |||

| Line 12: | Line 12: | ||

| | ||

| − | = OpenVINO Runtime SDK = | + | == OpenVINO Runtime SDK == |

| − | + | <span style="font-size:large;">[https://www.intel.com/content/www/us/en/developer/articles/release-notes/openvino/2023-0.html 2023.0]</span> | |

<span style="font-size:large;">Overall updates</span> | <span style="font-size:large;">Overall updates</span> | ||

| Line 24: | Line 24: | ||

| | ||

| − | + | ||

| + | |||

| + | = Applications = | ||

| + | |||

| + | == Edge AI SDK / Vision Application == | ||

| + | {| border="1" cellpadding="1" cellspacing="1" style="width: 500px;" | ||

| + | |- | ||

| + | | style="width: 154px;" | Application | ||

| + | | style="width: 179px;" | Model | ||

| + | |- | ||

| + | | style="width: 154px;" | Object Detection | ||

| + | | style="width: 154px;" | yolov3 (tf) | ||

| + | |- | ||

| + | | style="width: 154px;" | Person Detection | ||

| + | | style="width: 154px;" | person-detection-retail-0013 | ||

| + | |- | ||

| + | | style="width: 154px;" | Face Detection | ||

| + | | style="width: 154px;" | faceboxes-pytorch | ||

| + | |- | ||

| + | | style="width: 154px;" | Pose Estimation | ||

| + | | style="width: 154px;" | human-pose-estimation-0001 | ||

| + | |} | ||

| + | == Edge AI SDK / GenAI Application == | ||

| + | {| border="1" cellpadding="1" cellspacing="1" style="width: 500px;" | ||

| + | |- | ||

| + | | style="width: 154px;" | Application | ||

| + | | style="width: 179px;" | Model | ||

| + | |- | ||

| + | | style="width: 154px;" | Chatbot | ||

| + | | style="width: 154px;" | Llama-2-7b | ||

| + | |} | ||

= Benchmark = | = Benchmark = | ||

| Line 64: | Line 94: | ||

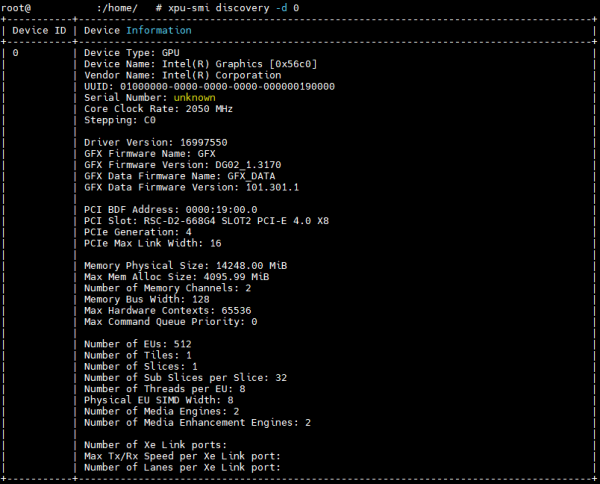

[[File:Xpu-smi.png|600px|Xpu-smi.png]] | [[File:Xpu-smi.png|600px|Xpu-smi.png]] | ||

| − | <span style="font-size:large;">You can refer | + | <span style="font-size:large;">You can refer more info [https://github.com/intel/xpumanager/blob/master/SMI_README.md xpu-smi]</span> |

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 04:29, 31 May 2024

Contents

OpenVINO

OpenVINO™ toolkit: An open-source solution for optimizing and deploying AI inference, in domains such as computer vision, automatic speech recognition, natural language processing, recommendation systems, and more. With its plug-in architecture, OpenVINO allows developers to write once and deploy anywhere. We are proud to announce the release of OpenVINO 2023.0 introducing a range of new features, improvements, and deprecations aimed at enhancing the developer experience.

- Enables the use of models trained with popular frameworks, such as TensorFlow and PyTorch.

- Optimizes inference of deep learning models by applying model retraining or fine-tuning, like post-training quantization.

- Supports heterogeneous execution across Intel hardware, using a common API for the Intel CPU, Intel Integrated Graphics, Intel Discrete Graphics, and other commonly used accelerators.

OpenVINO Runtime SDK

Overall updates

- Proxy & hetero plugins have been migrated to API 2.0, providing enhanced compatibility and stability.

- Symbolic shape inference preview is now available, leading to improved performance for LLMs.

- OpenVINO's graph representation has been upgraded to opset12, introducing a new set of operations that offer enhanced functionality and optimizations.

Applications

Edge AI SDK / Vision Application

| Application | Model |

| Object Detection | yolov3 (tf) |

| Person Detection | person-detection-retail-0013 |

| Face Detection | faceboxes-pytorch |

| Pose Estimation | human-pose-estimation-0001 |

Edge AI SDK / GenAI Application

| Application | Model |

| Chatbot | Llama-2-7b |

Benchmark

You can refer the link to test the performance with the benchmark_app

benchmark_app

The OpenVINO benchmark setup includes a single system with OpenVINO™, as well as the benchmark application installed. It measures the time spent on actual inference (excluding any pre or post processing) and then reports on the inferences per second (or Frames Per Second).

You can refer : link

Examples

cd /opt/Advantech/EdgeAISuite/Intel_Standard/benchmark

<CPU>

$ ./benchmark_app -m ../model/mobilenet-ssd/FP16/mobilenet-ssd.xml -i car.png -t 8 -d CPU

<iGPU>

$ ./benchmark_app -m ../model/mobilenet-ssd/FP16/mobilenet-ssd.xml -i car.png -t 8 -d GPU.0

<dGPU>

$ ./benchmark_app -m ../model/mobilenet-ssd/FP16/mobilenet-ssd.xml -i car.png -t 8 -d GPU.1

Utility

XPU-SIM

Intel® XPU Manager ( offical link ) is a free and open-source solution for local and remote monitoring and managing Intel® Data Center GPUs. It is designed to simplify administration, maximize reliability and uptime, and improve utilization.

Intel XPU System Management Interface (SMI) A command line utility for local XPU management.

Key features

Monitoring GPU utilization and health, getting job-level statistics, running comprehensive diagnostics, controlling power, policy management, firmware updating, and more.

Show GPU basic information , sample below

You can refer more info xpu-smi