Difference between revisions of "Edge AI SDK/AI Framework/Nvidia x86 64"

| Line 22: | Line 22: | ||

== <span style="font-size:larger;">Edge AI SDK / Application</span> == | == <span style="font-size:larger;">Edge AI SDK / Application</span> == | ||

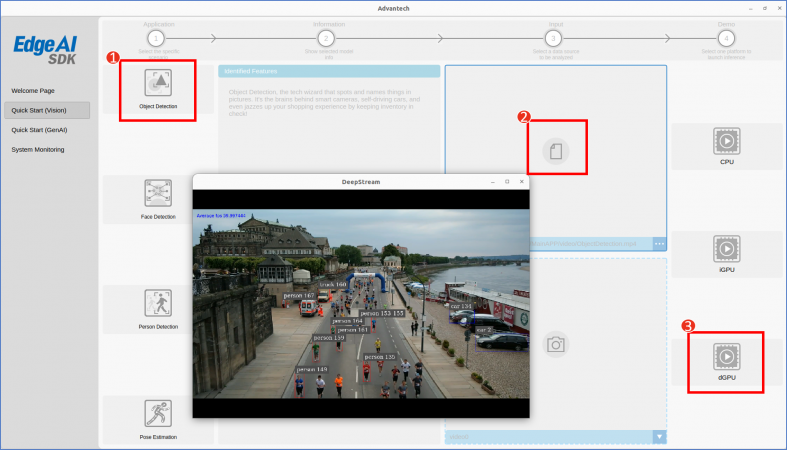

| − | <span style="font-size:larger;">Quick Start / Application / Video or WebCam / | + | <span style="font-size:larger;">Quick Start (Vision) / Application / Video or WebCam / dGPU</span> |

| − | + | [[File:EdgeAISDK rtxa5000.png|800x450px|EdgeAISDK rtxa5000.png]] | |

<span style="font-size:larger;"> </span> | <span style="font-size:larger;"> </span> | ||

| Line 34: | Line 34: | ||

|- | |- | ||

| style="width: 154px;" | <span style="font-size:larger;">Object Detection</span> | | style="width: 154px;" | <span style="font-size:larger;">Object Detection</span> | ||

| − | | style="width: 179px;" | | + | | style="width: 179px;" | |

|- | |- | ||

| style="width: 154px;" | <span style="font-size:larger;">Person Detection</span> | | style="width: 154px;" | <span style="font-size:larger;">Person Detection</span> | ||

| − | | style="width: 179px;" | | + | | style="width: 179px;" | |

|- | |- | ||

| style="width: 154px;" | <span style="font-size:larger;">Face Detection</span> | | style="width: 154px;" | <span style="font-size:larger;">Face Detection</span> | ||

| − | | style="width: 179px;" | | + | | style="width: 179px;" | |

|- | |- | ||

| style="width: 154px;" | <span style="font-size:larger;">Pose Estimation</span> | | style="width: 154px;" | <span style="font-size:larger;">Pose Estimation</span> | ||

| − | | style="width: 179px;" | | + | | style="width: 179px;" | |

|} | |} | ||

= <span style="font-size:larger;">Benchmark</span> = | = <span style="font-size:larger;">Benchmark</span> = | ||

| − | <span style="font-size:larger;"><span style="font-size:larger;">In order to measure FPS, power and latency of the | + | <span style="font-size:larger;"><span style="font-size:larger;">In order to measure FPS, power and latency of the RTX-A5000 you can use the docker to run the command "trtexec" . For more information please refer to the ''trtexec'' documentation in [https://github.com/NVIDIA/TensorRT/tree/main/samples/trtexec link].</span></span> |

<span style="font-size:larger;"><span style="font-size:larger;"> </span></span> | <span style="font-size:larger;"><span style="font-size:larger;"> </span></span> | ||

| Line 56: | Line 56: | ||

| | ||

| − | == <span style="font-size:larger;"><span style="font-size:larger;"> | + | == <span style="font-size:larger;"><span style="font-size:larger;">RTX-A5000 Benchmark</span></span> == |

| + | <pre><span style="font-size:x-small;">docker run</span> | ||

| − | + | trtexec --loadEngine=models/model_fp16.engine --batch=16</pre> | |

| − | |||

| − | + | [[File:EdgeAISDK rtxa5000 trtexec.png|1000x300px|EdgeAISDK rtxa5000 trtexec.png]] | |

<span style="font-size:larger;"><span style="font-size:larger;"> </span></span> | <span style="font-size:larger;"><span style="font-size:larger;"> </span></span> | ||

| Line 69: | Line 69: | ||

== <span style="font-size:larger;"><span style="font-size:larger;">Edge AI SDK / Benchmark</span></span> == | == <span style="font-size:larger;"><span style="font-size:larger;">Edge AI SDK / Benchmark</span></span> == | ||

| − | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">Evaluate the | + | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">Evaluate the RTX-A5000 performance with Edge AI SDK.</span></span></span> |

| − | + | [[File:EdgeAISDK rtxa5000 benchmark.png|800x500px|EdgeAISDK rtxa5000 benchmark.png]] | |

| − | |||

| − | |||

| | ||

| − | = <span style="font-size:larger;"><span style="font-size:larger;"> | + | = <span style="font-size:larger;"><span style="font-size:larger;">Docker run</span></span> = |

| − | <span style="font-size:larger;"> | + | <span style="font-size:larger;">Use Docker run to a command line tool used to leverage the power of the RTX A5000 GPU for AI, data science, and graphics applications within a containerized environment. It allows you to run inferences, collect statistics, and manage device events efficiently. Use "<code>--help"</code> to exhibit more usages.</span> |

| − | <pre> | + | <pre>docker run --help</pre> |

| − | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">Refer to [https://github.com/ | + | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">Refer to [https://github.com/docker/welcome-to-docker Welcom-to-docker]</span></span></span> |

<span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> </span></span></span> | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> </span></span></span> | ||

| Line 90: | Line 88: | ||

| | ||

| − | == <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> | + | == <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">RTX-A5000 Utilization</span></span></span> == |

| − | <pre> | + | <pre>nvidia-smi</pre> |

| − | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">[[File:EdgeAISDK | + | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">[[File:EdgeAISDK rtxa5000 utility.png|800x400px|EdgeAISDK rtxa5000 utility.png]]</span></span></span> |

<span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> </span></span></span> | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> </span></span></span> | ||

| Line 99: | Line 97: | ||

| | ||

| − | == <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> | + | == <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">RTX-A5000 Temperature</span></span></span> == |

| − | <pre> | + | <pre>nvidia-smi</pre> |

| − | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> [[File: | + | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> [[File:EdgeAISDK rtxa5000 thermal.png|800x400px|EdgeAISDK rtxa5000 thermal.png]]</span></span></span> |

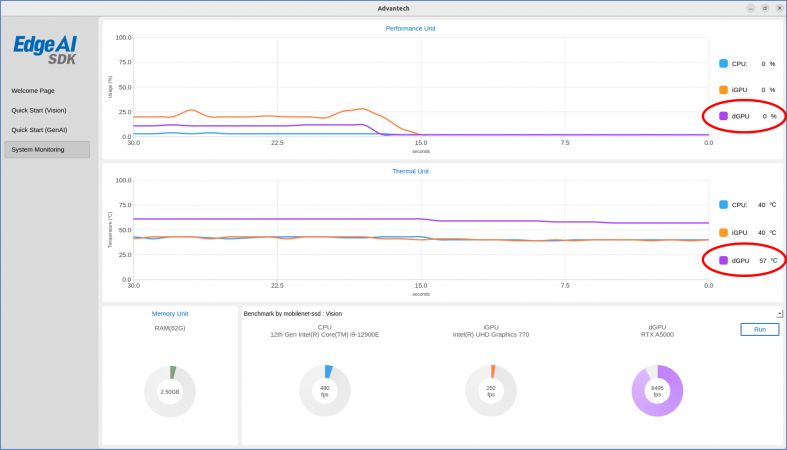

== <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">Edge AI SDK / Monitoring</span></span></span> == | == <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;">Edge AI SDK / Monitoring</span></span></span> == | ||

| − | + | [[File:EdgeAISDK rtxa5000 UI.png|800x450px|EdgeAISDK rtxa5000 UI.png]] | |

[[Category:Editor]] | [[Category:Editor]] | ||

Revision as of 07:20, 3 June 2024

Contents

RTX-A5000 AI Suite rc

The NVIDIA RTX A5000 is supported by an advanced software suite designed to accelerate AI, data science, and graphics applications on professional workstations.

Spearhead innovation from your desktop with the NVIDIA RTX™ A5000 graphics card, the perfect balance of power, performance, and reliability to tackle complex workflows. Built on the latest NVIDIA Ampere architecture and featuring 24 gigabytes (GB) of GPU memory, it’s everything designers, engineers, and artists need to realize their visions for the future, today.

Applications

TAPPAS is a solution designed to streamline the development and deployment of edge applications demanding high AI performance. This reference application software package empowers users to expedite their time-to-market by minimizing the development workload. TAPPAS encompasses a user-friendly set of fully operational application examples based on GStreamer, featuring pipeline elements and pre-trained AI tasks. These examples leverage advanced Deep Neural Networks, highlighting Hailo's AI processors' top-notch throughput and power efficiency. Furthermore, TAPPAS serves as a demonstration of Hailo's system integration capabilities, showcasing specific use cases on predefined software and hardware platforms. Utilizing TAPPAS simplifies integration with Hailo's runtime software stack and offers a starting point for users to fine-tune their applications. By demonstrating Hailo's system integration scenarios on both predefined software and hardware platforms, it can be used for evaluations, reference code, and demos. This approach effectively accelerates time to market, streamlines integration with Hailo's runtime software stack, and provides customers with a foundation to fine-tune their applications.

Refer to github-TAPPAS

Edge AI SDK / Application

Quick Start (Vision) / Application / Video or WebCam / dGPU

| Application | Model |

| Object Detection | |

| Person Detection | |

| Face Detection | |

| Pose Estimation |

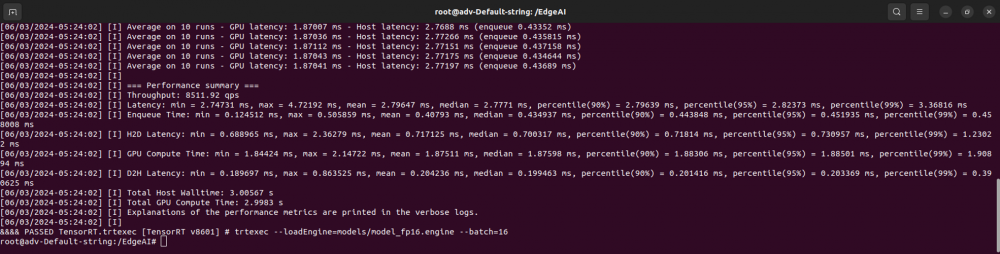

Benchmark

In order to measure FPS, power and latency of the RTX-A5000 you can use the docker to run the command "trtexec" . For more information please refer to the trtexec documentation in link.

RTX-A5000 Benchmark

<span style="font-size:x-small;">docker run</span> trtexec --loadEngine=models/model_fp16.engine --batch=16

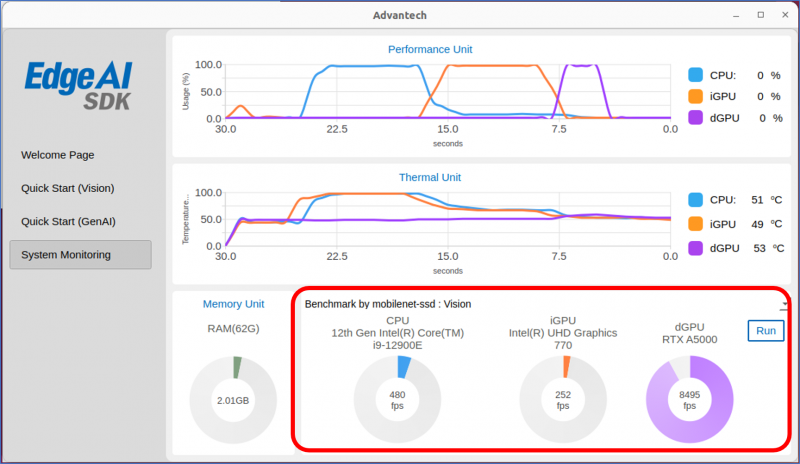

Edge AI SDK / Benchmark

Evaluate the RTX-A5000 performance with Edge AI SDK.

Docker run

Use Docker run to a command line tool used to leverage the power of the RTX A5000 GPU for AI, data science, and graphics applications within a containerized environment. It allows you to run inferences, collect statistics, and manage device events efficiently. Use "--help" to exhibit more usages.

docker run --help

Refer to Welcom-to-docker

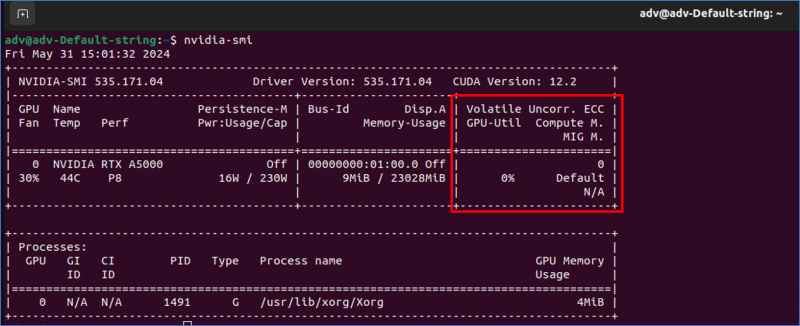

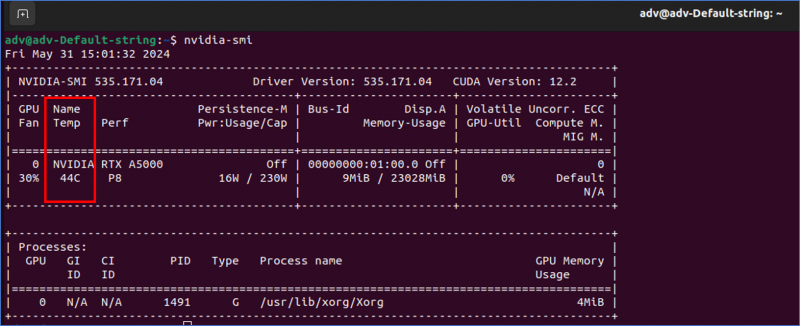

RTX-A5000 Utilization

nvidia-smi

RTX-A5000 Temperature

nvidia-smi