Difference between revisions of "Edge AI SDK/AI Framework/Nvidia x86 64"

m (Kent.yao moved page Edge AI SDK/AI Framework/Nvidia x86 64-rc to Edge AI SDK/AI Framework/Nvidia x86 64) |

|||

| (8 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | = | + | = DeepStream = |

| − | + | DeepStream is a complete streaming analytics toolkit based on GStreamer for AI-based multi-sensor processing, video, audio, and image understanding. It’s ideal for vision AI developers, software partners, startups, and OEMs building IVA apps and services. Developers can now create stream processing pipelines that incorporate neural networks and other complex processing tasks such as tracking, video encoding/decoding, and video rendering. DeepStream pipelines enable real-time analytics on video, image, and sensor data. | |

| − | + | DeepStream’s multi-platform support gives you a faster, easier way to develop vision AI applications and services. You can even deploy them on-premises, on the edge, and in the cloud with the click of a button. | |

| − | | + | More Info refer to [https://developer.nvidia.com/deepstream-sdk https://developer.nvidia.com/deepstream-sdk] |

| − | + | === DeepStream 6.4 Prerequisites: === | |

| − | + | You must install the following components: | |

| − | + | *Ubuntu 22.04 | |

| + | *GStreamer 1.20.3 | ||

| + | *Nvidia Driver R535.104.12 | ||

| + | *CUDA 12.2 | ||

| + | *TensorRT 8.6.1.6 | ||

| − | + | = TensorRT = | |

| − | | + | [https://developer.nvidia.com/tensorrt TensorRT] is a high performance deep learning inference runtime for image classification, segmentation, and object detection neural networks. TensorRT is built on CUDA, NVIDIA’s parallel programming model, and enables you to optimize inference for all deep learning frameworks. It includes a deep learning inference optimizer and runtime that delivers low latency and high-throughput for deep learning inference applications. |

| | ||

| − | + | = <span style="font-size:larger;">Edge AI SDK / Application</span> = | |

<span style="font-size:larger;">Quick Start (Vision) / Application / Video or WebCam / dGPU</span> | <span style="font-size:larger;">Quick Start (Vision) / Application / Video or WebCam / dGPU</span> | ||

| Line 34: | Line 38: | ||

|- | |- | ||

| style="width: 154px;" | <span style="font-size:larger;">Object Detection</span> | | style="width: 154px;" | <span style="font-size:larger;">Object Detection</span> | ||

| − | | style="width: 179px;" | | + | | style="width: 179px;" | yolov3.weights |

|- | |- | ||

| style="width: 154px;" | <span style="font-size:larger;">Person Detection</span> | | style="width: 154px;" | <span style="font-size:larger;">Person Detection</span> | ||

| − | | style="width: 179px;" | | + | | style="width: 179px;" | sample_ssd_relu6.uff |

|- | |- | ||

| style="width: 154px;" | <span style="font-size:larger;">Face Detection</span> | | style="width: 154px;" | <span style="font-size:larger;">Face Detection</span> | ||

| − | | style="width: 179px;" | | + | | style="width: 179px;" | facenet.etlt |

|- | |- | ||

| style="width: 154px;" | <span style="font-size:larger;">Pose Estimation</span> | | style="width: 154px;" | <span style="font-size:larger;">Pose Estimation</span> | ||

| − | | style="width: 179px;" | | + | | style="width: 179px;" | model.etlt |

|} | |} | ||

= <span style="font-size:larger;">Benchmark</span> = | = <span style="font-size:larger;">Benchmark</span> = | ||

| − | <span style="font-size:larger;"><span style="font-size:larger;">In order to measure FPS, power and latency of the RTX-A5000 you can use | + | <span style="font-size:larger;"><span style="font-size:larger;">In order to measure FPS, power and latency of the RTX-A5000 you can use the command "trtexec" . For more information please refer to the ''trtexec'' documentation in [https://github.com/NVIDIA/TensorRT/tree/main/samples/trtexec link].</span></span> |

<span style="font-size:larger;"><span style="font-size:larger;"> </span></span> | <span style="font-size:larger;"><span style="font-size:larger;"> </span></span> | ||

| Line 57: | Line 61: | ||

== <span style="font-size:larger;"><span style="font-size:larger;">RTX-A5000 Benchmark</span></span> == | == <span style="font-size:larger;"><span style="font-size:larger;">RTX-A5000 Benchmark</span></span> == | ||

| − | <pre> | + | <pre>trtexec --loadEngine=models/model_fp16.engine --batch=16</pre> |

| − | |||

| − | trtexec --loadEngine=models/model_fp16.engine --batch=16</pre> | ||

[[File:EdgeAISDK rtxa5000 trtexec.png|1000x300px|EdgeAISDK rtxa5000 trtexec.png]] | [[File:EdgeAISDK rtxa5000 trtexec.png|1000x300px|EdgeAISDK rtxa5000 trtexec.png]] | ||

| Line 106: | Line 108: | ||

[[File:EdgeAISDK rtxa5000 UI.png|800x450px|EdgeAISDK rtxa5000 UI.png]] | [[File:EdgeAISDK rtxa5000 UI.png|800x450px|EdgeAISDK rtxa5000 UI.png]] | ||

| − | |||

| − | |||

Latest revision as of 09:45, 2 July 2024

Contents

DeepStream

DeepStream is a complete streaming analytics toolkit based on GStreamer for AI-based multi-sensor processing, video, audio, and image understanding. It’s ideal for vision AI developers, software partners, startups, and OEMs building IVA apps and services. Developers can now create stream processing pipelines that incorporate neural networks and other complex processing tasks such as tracking, video encoding/decoding, and video rendering. DeepStream pipelines enable real-time analytics on video, image, and sensor data.

DeepStream’s multi-platform support gives you a faster, easier way to develop vision AI applications and services. You can even deploy them on-premises, on the edge, and in the cloud with the click of a button.

More Info refer to https://developer.nvidia.com/deepstream-sdk

DeepStream 6.4 Prerequisites:

You must install the following components:

- Ubuntu 22.04

- GStreamer 1.20.3

- Nvidia Driver R535.104.12

- CUDA 12.2

- TensorRT 8.6.1.6

TensorRT

TensorRT is a high performance deep learning inference runtime for image classification, segmentation, and object detection neural networks. TensorRT is built on CUDA, NVIDIA’s parallel programming model, and enables you to optimize inference for all deep learning frameworks. It includes a deep learning inference optimizer and runtime that delivers low latency and high-throughput for deep learning inference applications.

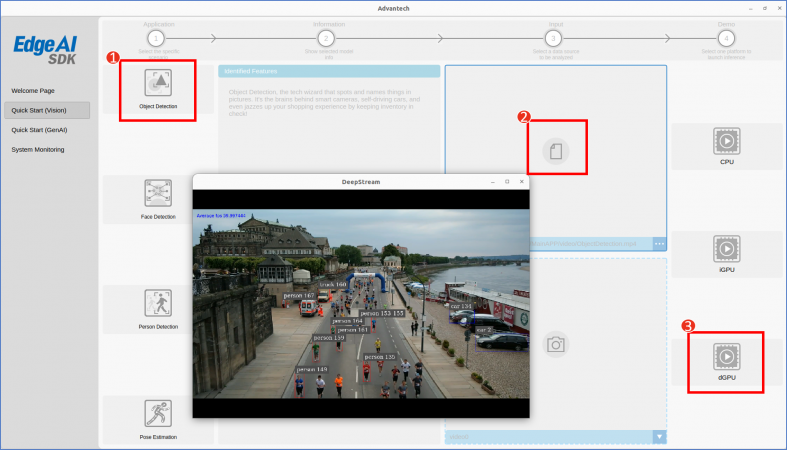

Edge AI SDK / Application

Quick Start (Vision) / Application / Video or WebCam / dGPU

| Application | Model |

| Object Detection | yolov3.weights |

| Person Detection | sample_ssd_relu6.uff |

| Face Detection | facenet.etlt |

| Pose Estimation | model.etlt |

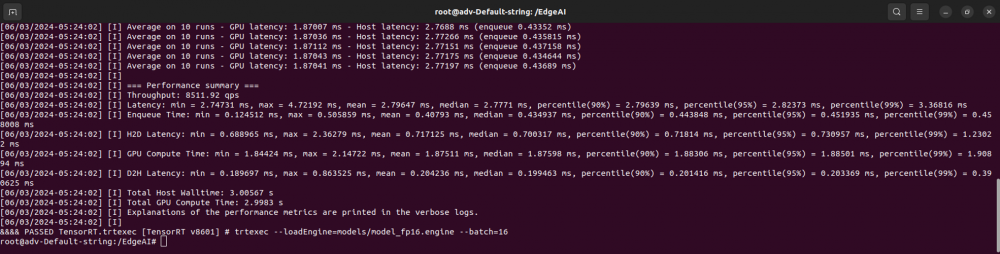

Benchmark

In order to measure FPS, power and latency of the RTX-A5000 you can use the command "trtexec" . For more information please refer to the trtexec documentation in link.

RTX-A5000 Benchmark

trtexec --loadEngine=models/model_fp16.engine --batch=16

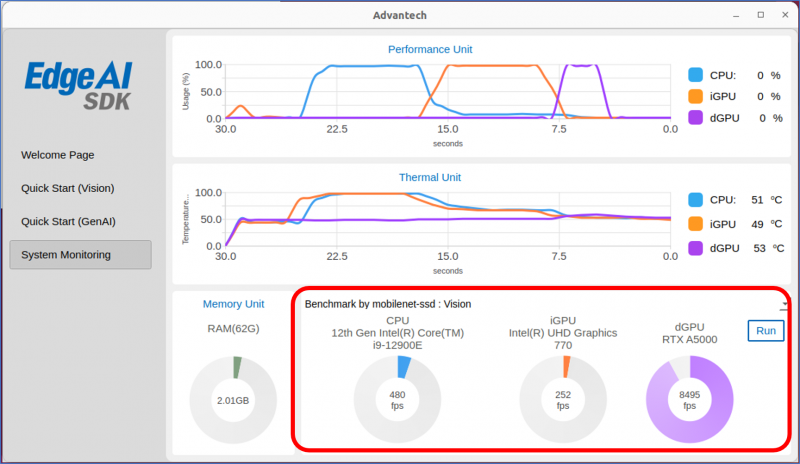

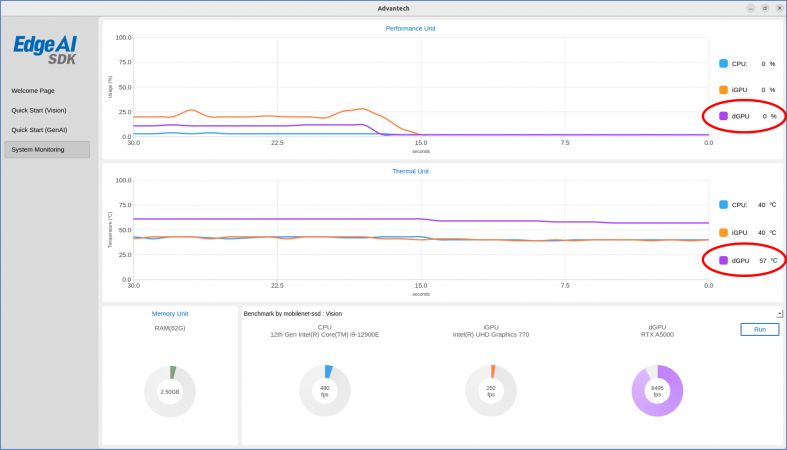

Edge AI SDK / Benchmark

Evaluate the RTX-A5000 performance with Edge AI SDK.

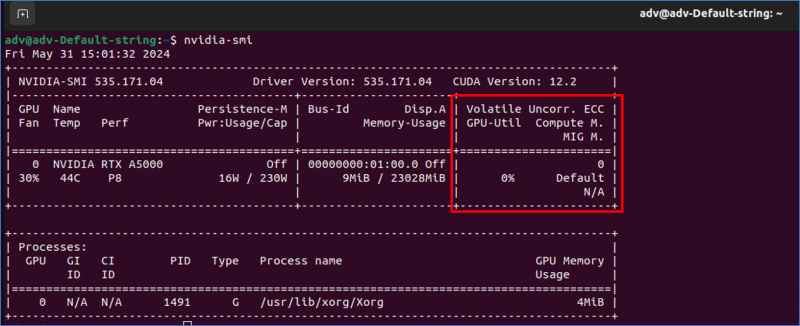

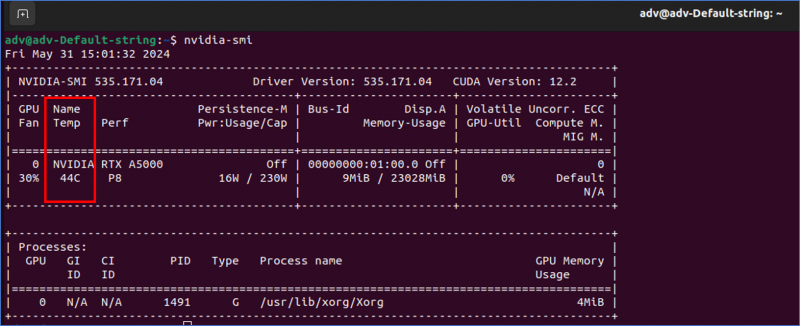

NVIDIA System Management Interface

The NVIDIA System Management Interface (nvidia-smi) is a command line utility, based on top of the NVIDIA Management Library (NVML), intended to aid in the management and monitoring of NVIDIA GPU devices.

This utility allows administrators to query GPU device state and with the appropriate privileges, permits administrators to modify GPU device state.

nvidia-smi

RTX-A5000 Utilization

nvidia-smi

RTX-A5000 Temperature

nvidia-smi