Difference between revisions of "Edge AI SDK/AI Framework/Hailo"

| Line 1: | Line 1: | ||

| − | = <span style="font-size:larger;">Hailo-Docker</span> = | + | = <span style="font-size:larger;">Hailo-in-Docker</span> = |

| | ||

| Line 33: | Line 33: | ||

| | ||

| + | |||

| + | = <span style="font-size:larger;">Hailo-in-Host</span> = | ||

| + | |||

| + | | ||

| + | |||

| + | == <span style="font-size:larger;">HailoRT</span> == | ||

| + | |||

| + | <span style="font-size:larger;">HailoRT is a '''production-grade, light, scalable runtime software, '''providing a robust library with intuitive APIs for optimized performance. Our AI SDK enables developers to build easy and fast pipelines for AI applications in production and is also suitable for evaluation and prototyping. It runs on Hailo AI Vision Processor or when utilizing Hailo AI Accelerator, it runs on the host processor and enables high throughput inferencing with one or more Hailo devices. HailoRT is available as open-source software via Hailo Github.</span> | ||

| + | |||

| + | <span style="font-size:larger;">Refer to [https://github.com/hailo-ai/hailort/tree/master github-hailort], [https://hailo.ai/products/hailo-software/hailo-ai-software-suite/#sw-hailort hailort]</span> | ||

| + | |||

| + | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> </span></span></span> | ||

| + | |||

| + | = <span style="font-size:larger;">Application</span> = | ||

| + | |||

| + | <span style="font-size:larger;">Enter the Hailo Docker container to launch and run AI applications within a preconfigured environment.</span> | ||

== <span style="font-size:larger;">Edge AI SDK / Application</span> == | == <span style="font-size:larger;">Edge AI SDK / Application</span> == | ||

| Line 95: | Line 111: | ||

| | ||

| − | == <span style="font-size:larger;">Hailo-8 Benchmark</span> == | + | == <span style="font-size:larger;">Hailo-8 Benchmark (Docker)</span> == |

| − | + | <span style="font-size:larger;">hailortcli benchmark <Hailo's Model .hef ></span> | |

<pre>## Into hailo docker container | <pre>## Into hailo docker container | ||

| Line 120: | Line 136: | ||

= <span style="font-size:larger;">HailoRT CLI</span> = | = <span style="font-size:larger;">HailoRT CLI</span> = | ||

| − | + | <span style="font-size:larger;">HailoRT CLI - a command line application used to control the Hailo device, run inferences, collect statistics and device events, etc. Use "--help" to exhibit more usages.</span> | |

| − | <pre> | + | <pre>hailortcli --help</pre> |

| − | |||

| − | hailortcli --help</pre> | ||

| − | + | <span style="font-size:larger;">Refer to [https://github.com/hailo-ai/hailort/tree/master github-hailort]</span> | |

<span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> </span></span></span> | <span style="font-size:larger;"><span style="font-size:larger;"><span style="font-size:larger;"> </span></span></span> | ||

| Line 133: | Line 147: | ||

| | ||

| − | == <span style="font-size:larger;">Hailo-8 Utilization</span> == | + | == <span style="font-size:larger;">Hailo-8 Utilization (Docker)</span> == |

<pre>## Into hailo docker container | <pre>## Into hailo docker container | ||

| Line 145: | Line 159: | ||

| | ||

| − | == <span style="font-size:larger;">Hailo-8 Temperature</span> == | + | == <span style="font-size:larger;">Hailo-8 Temperature (Host)</span> == |

<pre>python ts_monitoring_2023Sep07.py</pre> | <pre>python ts_monitoring_2023Sep07.py</pre> | ||

Revision as of 07:34, 13 May 2025

Hailo-in-Docker

Hailo AI Suite

Hailo AI Software Suite offers breakthrough AI accelerators and Vision processors uniquely designed to accelerate embedded deep learning applications on edge devices.

Hailo devices are accompanied by a comprehensive AI SDK that enables the compilation of deep learning models and the implementation of AI applications in production environments. The model build environment seamlessly integrates with common ML frameworks to allow smooth and easy integration in existing development ecosystems. The runtime environment enables integration and deployment in host processors, such as x86 and ARM based products, when utilizing Hailo-8, and in Hailo-15 vision processor.

Applications

TAPPAS is a solution designed to streamline the development and deployment of edge applications demanding high AI performance. This reference application software package empowers users to expedite their time-to-market by minimizing the development workload. TAPPAS encompasses a user-friendly set of fully operational application examples based on GStreamer, featuring pipeline elements and pre-trained AI tasks. These examples leverage advanced Deep Neural Networks, highlighting Hailo's AI processors' top-notch throughput and power efficiency. Furthermore, TAPPAS serves as a demonstration of Hailo's system integration capabilities, showcasing specific use cases on predefined software and hardware platforms. Utilizing TAPPAS simplifies integration with Hailo's runtime software stack and offers a starting point for users to fine-tune their applications. By demonstrating Hailo's system integration scenarios on both predefined software and hardware platforms, it can be used for evaluations, reference code, and demos. This approach effectively accelerates time to market, streamlines integration with Hailo's runtime software stack, and provides customers with a foundation to fine-tune their applications.

Refer to github-TAPPAS

How to

## Docker Pull

$ docker pull advigw/eas-x86-hailo8:ubuntu22.04-1.0.0

## Docker Run

$ docker run --rm --privileged --network host --name adv_hailo --ipc=host --device /dev/dri:/dev/dri -v /tmp/hailo_docker.xauth:/home/hailo/.Xauthority -v /tmp/.X11-unix/:/tmp/.X11-unix/ -v /dev:/dev -v /lib/firmware:/lib/firmware --group-add 44 -e DISPLAY=$DISPLAY -e XDG_RUNTIME_DIR=$XDG_RUNTIME_DIR -e hailort_enable_service=yes -it advigw/eas-x86-hailo8:ubuntu22.04-1.0.0 /bin/bash

Hailo-in-Host

HailoRT

HailoRT is a production-grade, light, scalable runtime software, providing a robust library with intuitive APIs for optimized performance. Our AI SDK enables developers to build easy and fast pipelines for AI applications in production and is also suitable for evaluation and prototyping. It runs on Hailo AI Vision Processor or when utilizing Hailo AI Accelerator, it runs on the host processor and enables high throughput inferencing with one or more Hailo devices. HailoRT is available as open-source software via Hailo Github.

Refer to github-hailort, hailort

Application

Enter the Hailo Docker container to launch and run AI applications within a preconfigured environment.

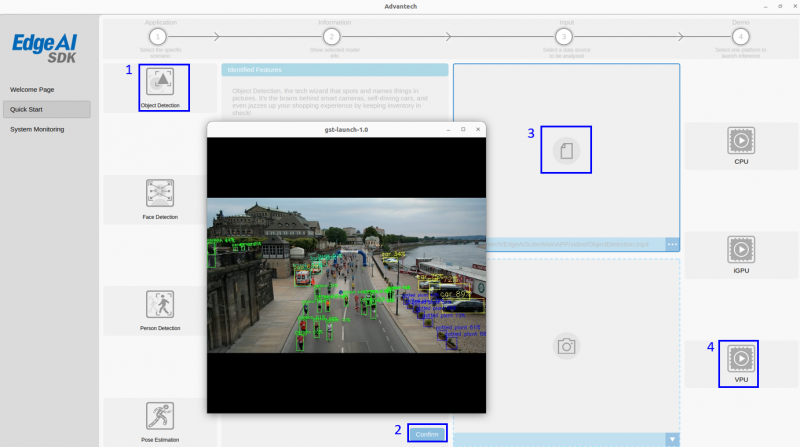

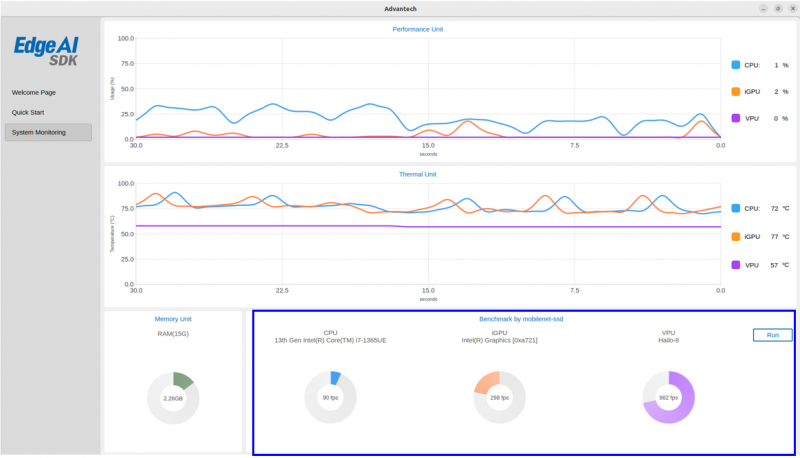

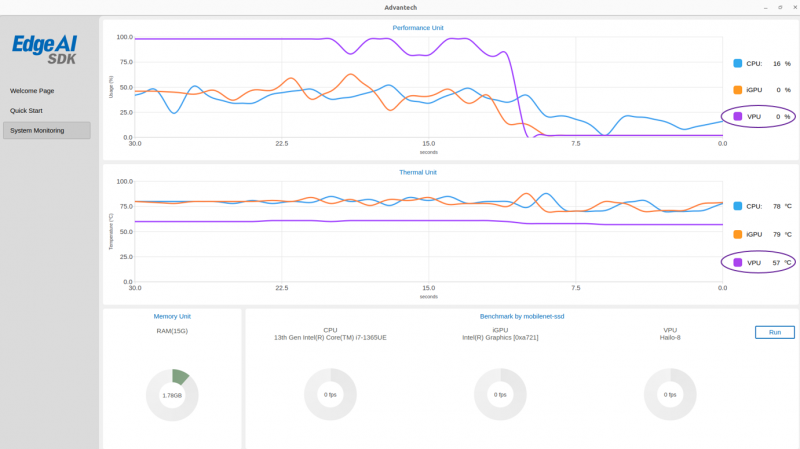

Edge AI SDK / Application

Quick Start / Application / Video or WebCam / VPU

| Application | Model | FPS (EAS-1200 for 1 chipset) | FPS (EAS-3300 for 2 chipset) |

| Object Detection | yolov8m.hef | 32 | 118 |

| Person Detection | yolov5s_personface_reid.hef / repvgg_a0_person_reid_2048.hef | 167 | 276 |

| Face Detection | scrfd_10g.hef / arcface_mobilefacenet_v1.hef | 78 | 157 |

| Pose Estimation | centerpose_regnetx_1.6gf_fpn.hef | 46 | 143 |

| Application | Model | FPS (EAS-1200 for 1 chipset) | FPS (EAS-3300 for 2 chipset) |

| benchmark | mobilenet-ssd | 1009 | 2008 |

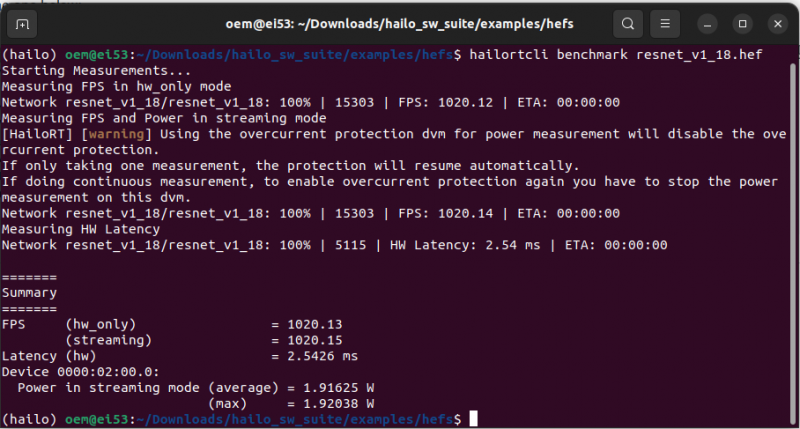

Benchmark

In order to measure FPS, power and latency of the Hailo Model Zoo networks you can use the HailoRT command line interface. For more information please refer to the HailoRT documentation in link.

Hailo-8 Benchmark (Docker)

hailortcli benchmark <Hailo's Model .hef >

## Into hailo docker container hailortcli benchmark <hailo_model.hef>

Edge AI SDK / Benchmark

Evaluate the Hailo-8 performance with Edge AI SDK.

HailoRT CLI

HailoRT CLI - a command line application used to control the Hailo device, run inferences, collect statistics and device events, etc. Use "--help" to exhibit more usages.

hailortcli --help

Refer to github-hailort

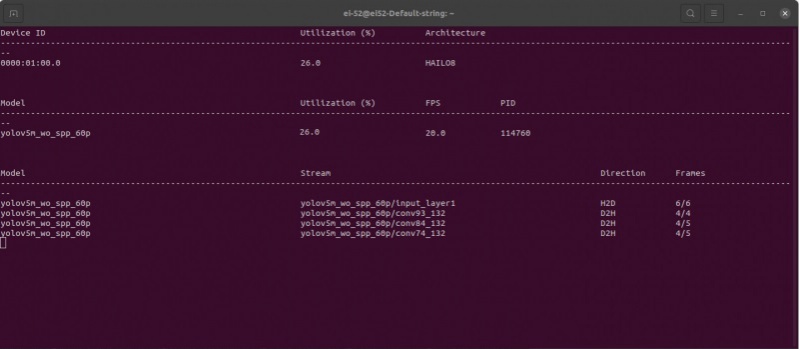

Hailo-8 Utilization (Docker)

## Into hailo docker container export HAILO_MONITOR=1 hailortcli monitor

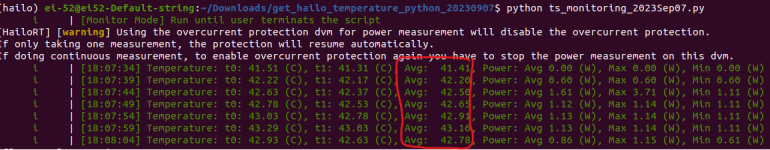

Hailo-8 Temperature (Host)

python ts_monitoring_2023Sep07.py