Difference between revisions of "Edge AI SDK/Q&A"

Eric.liang (talk | contribs) (→EAI-3100 issue) |

Eric.liang (talk | contribs) |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 46: | Line 46: | ||

In summary, no matter which platform you are developing AI applications on, you can find a suitable AI Model Zoo to accelerate your AI application development. | In summary, no matter which platform you are developing AI applications on, you can find a suitable AI Model Zoo to accelerate your AI application development. | ||

| − | = System | + | = System or Devices = |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| Line 70: | Line 63: | ||

Prepare a USB storage containing the <span style="color:#c0392b;">'''EAI_3100_2100_Patch-1.0.0-Ubuntu_22.04-x64.run'''</span> . | Prepare a USB storage containing the <span style="color:#c0392b;">'''EAI_3100_2100_Patch-1.0.0-Ubuntu_22.04-x64.run'''</span> . | ||

| − | *'''Download ''' '''[https://edgeaisuite.blob.core.windows.net/patch/EAI_3100_2100_Patch-1.0.0-Ubuntu_22.04-x64.run | + | *'''Download ''' <u>'''[https://edgeaisuite.blob.core.windows.net/patch/EAI_3100_2100_Patch-1.0.0-Ubuntu_22.04-x64.run EAI_3100_2100_Patch-1.0.0-Ubuntu_22.04-x64]'''</u> |

| − | | + | <u> </u> |

| − | '''Step 1 : Enter Ubuntu Recovery mode''' | + | <u>'''Step 1 : Enter Ubuntu Recovery mode'''</u> |

| − | After BIOS initialization, press the '''''"ESC"''''' key to enter recovery mode. | + | <u>After BIOS initialization, press the '''''"ESC"''''' key to enter recovery mode.</u> |

| − | Select "Advanced options for Ubuntu". | + | <u>Select "Advanced options for Ubuntu".</u> |

| − | [[File:Advance.png|600x100px|Advance.png]] | + | <u>[[File:Advance.png|600x100px|Advance.png]]</u> |

| − | Next, choose the highest version and select "recovery mode" to proceed. | + | <u>Next, choose the highest version and select "recovery mode" to proceed.</u> |

| − | [[File:Recovery mode.png|600x100px|Recovery mode.png]] | + | <u>[[File:Recovery mode.png|600x100px|Recovery mode.png]]</u> |

| − | After into the recovery mode follow the steps below. | + | <u>After into the recovery mode follow the steps below.</u> |

| − | *First,select the '''"<span style="color:#3498db;">network</span>"''' option and choose "Yes" when prompted by the system to continue. | + | *<u>First,select the '''"<span style="color:#3498db;">network</span>"''' option and choose "Yes" when prompted by the system to continue.</u> |

| − | *Second,select the '''"<span style="color:#3498db;">root</span>"''' option to enter. | + | *<u>Second,select the '''"<span style="color:#3498db;">root</span>"''' option to enter.</u> |

| − | | + | <u> </u> |

| − | '''Step 2 : Mount the USB''' | + | <u>'''Step 2 : Mount the USB'''</u> |

| − | Enter "<span style="color:#2980b9;">'''<code>fdisk -l</code>'''</span>" to find the location of the USB drive. | + | <u>Enter "<span style="color:#2980b9;">'''<code>fdisk -l</code>'''</span>" to find the location of the USB drive.</u> |

| − | [[File:Intel patch.png|400x400px|Intel patch.png]] | + | <u>[[File:Intel patch.png|400x400px|Intel patch.png]]</u> |

| − | For example, the usb storage location is <u>'''<code>/dev/sdb1</code>'''</u> (as shown in the second red box in the image). | + | <u>For example, the usb storage location is <u>'''<code>/dev/sdb1</code>'''</u> (as shown in the second red box in the image). |

Enter the following commands to mount the USB: | Enter the following commands to mount the USB: | ||

Latest revision as of 09:36, 22 April 2024

Contents

Edge AI

How To convert an AI Model to run on edge inference runtime ?

Converting an AI model to run on edge inference runtime is a crucial step in implementing complex AI applications on resource-constrained edge devices. This article will discuss this topic focusing on three platforms: Intel OpenVINO, Nvidia, and Hailo.

- Intel OpenVINO platform. OpenVINO is a deep learning inference optimizer and runtime library developed by Intel. It is designed for Intel hardware (including CPUs, GPUs, FPGAs, and VPUs) to achieve optimal deep learning performance on these devices. A key component of OpenVINO is the Model Optimizer, a powerful tool that can convert various deep learning models (such as TensorFlow, Caffe, and ONNX) into OpenVINO's IR (Intermediate Representation) format. This IR model can then run on any Intel hardware that supports OpenVINO. For more information about OpenVINO, please refer to Intel's official website: https://software.intel.com/content/www/us/en/develop/tools/openvino-toolkit.html

- Nvidia platform. Nvidia offers TensorRT, a high-performance library for optimizing and running deep learning models. TensorRT can convert various deep learning models (such as TensorFlow, Keras, and ONNX) into a format that can be efficiently run on Nvidia GPUs. This is particularly useful for applications that need to run AI models on edge devices (such as Nvidia's Jetson series). For more information about TensorRT, please refer to Nvidia's official website: https://developer.nvidia.com/tensorrt

- Hailo platform. Hailo is a company specializing in edge AI processors, and their Hailo-8 deep learning processor is designed for edge devices to provide optimal performance and efficiency. Hailo offers an SDK that can convert TensorFlow and ONNX models into a format that can run on the Hailo-8 processor. This allows developers to easily deploy their AI models to Hailo's edge devices. For more information about Hailo, please refer to Hailo's official website: https://hailo.ai/

In general, converting an AI model to run on edge inference runtime involves a process of model optimization and conversion. Each platform provides their own tools and methods to ensure the model can run efficiently on their hardware.

How To evaluate AI performance of an Edge AI platfrom or AI Accelerator ?

When evaluating AI performance, several factors need to be considered. These include the inference speed of the model (i.e., the speed at which the model makes predictions), the performance of the hardware (including CPU and GPU usage, memory usage, and power consumption).

The Edge AI SDK benchmark enabling developers to comprehensively evaluate the performance of their AI applications on edge devices.

Through the Edge AI SDK, developers can easily establish an Edge AI environment, allowing them to quickly begin evaluating their AI applications on Advantech's Edge AI platforms with various AI accelerators.

How to Estimate If AI Computing Power is Enough?

Refer to this video

How to Know AI Computing Power on Various Platforms?

Refer to this video

How to Deploy Your AI Remotely?

Refer to this video

AI Model

Where to find AI Model for my AI platform?

The AI Model Zoo is a collection that provides various pre-trained deep learning models. Developers can use these models to accelerate the development of their AI applications. Below is the relevant information about the AI Model Zoo for Intel's OpenVINO, Nvidia, and Hailo platforms.

- Intel's OpenVINO provides a pre-trained model set called the "Open Model Zoo." This model set contains a series of models that can be used for various different computer vision tasks, such as object detection, face recognition, human pose estimation, and so on. You can find these models on OpenVINO's official website: https://github.com/openvinotoolkit/open_model_zoo

- Nvidia's AI Model Zoo. Nvidia provides a model library called "Nvidia NGC," which contains various pre-trained models optimized for Nvidia hardware. These models can be used for various AI tasks, such as image classification, object detection, speech recognition, and so on. You can find these models on the official Nvidia NGC website: https://ngc.nvidia.com/catalog/models

- Hailo's AI Model Zoo. Hailo provides a model library that contains various pre-trained models specifically optimized for the Hailo-8 deep learning processor. These models can be used for various edge AI applications, such as object detection, face recognition, speech recognition, and so on. You can find these models on Hailo's official website: https://hailo.ai/products/hailo-software/hailo-ai-software-suite/#sw-modelzoo

In summary, no matter which platform you are developing AI applications on, you can find a suitable AI Model Zoo to accelerate your AI application development.

System or Devices

EAI-3100 issue

Issue: Ubuntu fails to start after installing the EAI-3100/EAI-2100 driver.

Why:

In certain instances, specific software within the Ubuntu system has been updated ( EX : libegl1-mesa-dev, mesa-va-drivers, etc) . Due to version conflicts with the EAI-3100/EAI-2100, these particular software updates may fails to start after installing the EAI-3100/EAI-2100 driver.

How To:

Preparation:

Prepare a USB storage containing the EAI_3100_2100_Patch-1.0.0-Ubuntu_22.04-x64.run .

Step 1 : Enter Ubuntu Recovery mode

After BIOS initialization, press the "ESC" key to enter recovery mode.

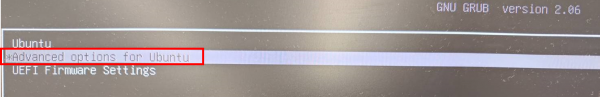

Select "Advanced options for Ubuntu".

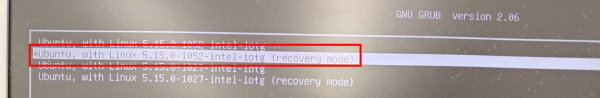

Next, choose the highest version and select "recovery mode" to proceed.

After into the recovery mode follow the steps below.

- First,select the "network" option and choose "Yes" when prompted by the system to continue.

- Second,select the "root" option to enter.

Step 2 : Mount the USB

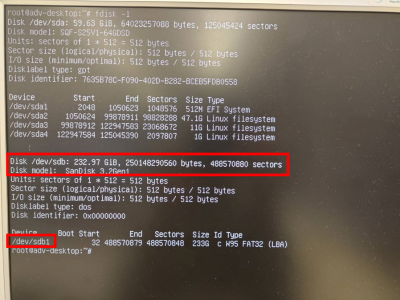

Enter "fdisk -l" to find the location of the USB drive.

For example, the usb storage location is <u>/dev/sdb1 (as shown in the second red box in the image).

Enter the following commands to mount the USB:

$ mkdir /mnt/usb

$ mount /dev/sdb1 /mnt/usb/

$ cd /mnt/usb

Step 3 : Patch

Enter the following commands :

$ cd chmod +x EAI_3100_2100_Patch-1.0.0-Ubuntu_22.04-x64.run

$ ./EAI_3100_2100_Patch-1.0.0-Ubuntu_22.04-x64.run

$ reboot