Difference between revisions of "RK Platform NPU SDK"

Yunjin.jiang (talk | contribs) |

Yunjin.jiang (talk | contribs) |

||

| Line 105: | Line 105: | ||

= '''rknn-toolkit2''' = | = '''rknn-toolkit2''' = | ||

| + | |||

== Tool Introduction == | == Tool Introduction == | ||

| − | + | ||

| + | RKNN-Toolkit2 is a development kit that provides users with model conversion, inference and performance evaluation on PC platforms. Users can easily complete the following functions through the Python interface provided by the tool: | ||

| + | |||

| + | #<span style="color:#0000ff;">'''Model conversion'''</span>: support to convert Caffe / TensorFlow / TensorFlow Lite / ONNX / Darknet / PyTorch model to RKNN model, support RKNN model import/export, which can be used on Rockchip NPU platform later. | ||

| + | #<span style="color:#0000ff;">'''Quantization'''</span>: support to convert float model to quantization model, currently support quantized methods including asymmetric quantization (asymmetric_quantized-8). and support hybrid quantization. | ||

| + | #<span style="color:#0000ff;">'''Model inference'''</span>: Able to simulate NPU to run RKNN model on PC and get the inference result. This tool can also distribute the RKNN model to the specified NPU device to run, and get the inference results. | ||

| + | #<span style="color:#0000ff;">'''Performance & Memory evaluation'''</span>: distribute the RKNN model to the specified NPU device to run, and evaluate the model performance and memory consumption in the actual device. | ||

| + | #<span style="color:#0000ff;">'''Quantitative error analysis'''</span>: This function will give the Euclidean or cosine distance of each layer of inference results before and after the model is quantized. This can be used to analyze how quantitative error occurs, and provide ideas for improving the accuracy of quantitative models. | ||

| + | #<span style="color:#0000ff;">'''Model encryption'''</span>: Use the specified encryption method to encrypt the RKNN model as a whole. | ||

== System Dependency == | == System Dependency == | ||

Revision as of 08:57, 8 August 2023

Contents

Preface

NPU Introduce

RK3568

- Neural network acceleration engine with processing performance up to 0.8 TOPS

- Support integer 4, integer 8, integer 16, float 16, Bfloat 16 and tf32 operation

- Support deep learning frameworks: TensorFlow, Caffe, Tflite, Pytorch, Onnx NN, Android NN, etc.

- One isolated voltage domain to support DVFS

RK3588

- Neural network acceleration engine with processing performance up to 6 TOPS

- Include triple NPU core, and support triple core co-work, dual core co-work, and work independently

- Support integer 4, integer 8, integer 16, float 16, Bfloat 16 and tf32 operation

- Embedded 384KBx3 internal buffer

- Multi-task, multi-scenario in parallel

- Support deep learning frameworks: TensorFlow, Caffe, Tflite, Pytorch, Onnx NN, Android NN, etc.

- One isolated voltage domain to support DVFS

RKNN SDK

RKNN SDK (Password: a887)include two parts:

- rknn-toolkit2

- rknpu2

├── rknn-toolkit2

│ ├── doc

│ ├── examples

│ ├── packages

│ └── rknn_toolkit_lite2

└── rknpu2

├── doc

├── examples

└── runtime

rknpu2

'rknpu2' include documents (rknpu2/doc) and examples (rknpu2/examples) to help to fast develop AI applications using rknn model(*.rknn).

Other models (eg:Caffe、TensorFlow etc) can be translated to rknn model through 'rknn-toolkit2'.

RKNN API Library file librknnrt.so and header file rknn_api.h can be found in rknpu2/runtime.

Released BSP and images have already included NPU driver and runtime libraries.

Here are two examples built in released images:

1. rknn_ssd_demo

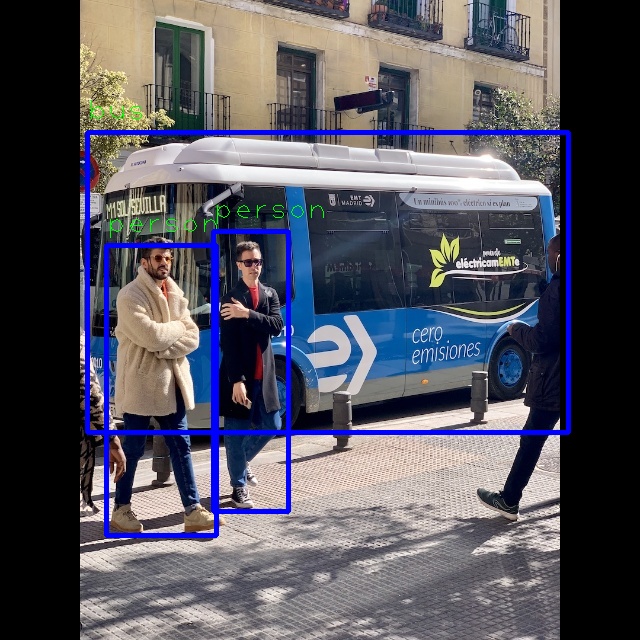

cd /tools/test/adv/npu2/rknn_ssd_demo ./rknn_ssd_demo model/ssd_inception_v2.rknn model/bus.jpg resize 640 640 to 300 300 Loading model ... rknn_init ... model input num: 1, output num: 2 input tensors: index=0, name=Preprocessor/sub:0, n_dims=4, dims=[1, 300, 300, 3], n_elems=270000, size=270000, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=0, scale=0.007843 output tensors: index=0, name=concat:0, n_dims=4, dims=[1, 1917, 1, 4], n_elems=7668, size=7668, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=50, scale=0.090787 index=1, name=concat_1:0, n_dims=4, dims=[1, 1917, 91, 1], n_elems=174447, size=174447, fmt=NCHW, type=INT8, qnt_type=AFFINE, zp=58, scale=0.140090 rknn_run loadLabelName ssd - loadLabelName ./model/coco_labels_list.txt loadBoxPriors person @ (106 245 216 535) 0.994422 bus @ (87 132 568 432) 0.991533 person @ (213 231 288 511) 0.843047

2. rknn_mobilenet_demo

cd /tools/test/adv/npu2/rknn_mobilenet_demo ./rknn_mobilenet_demo model/mobilenet_v1.rknn model/cat_224x224.jpg model input num: 1, output num: 1 input tensors: index=0, name=input, n_dims=4, dims=[1, 224, 224, 3], n_elems=150528, size=150528, fmt=NHWC, type=INT8, qnt_type=AFFINE, zp=0, scale=0.007812 output tensors: index=0, name=MobilenetV1/Predictions/Reshape_1, n_dims=2, dims=[1, 1001, 0, 0], n_elems=1001, size=1001, fmt=UNDEFINED, type=INT8, qnt_type=AFFINE, zp=-128, scale=0.003906 rknn_run --- Top5 --- 283: 0.468750 282: 0.242188 286: 0.105469 464: 0.089844 264: 0.019531

rknn-toolkit2

Tool Introduction

RKNN-Toolkit2 is a development kit that provides users with model conversion, inference and performance evaluation on PC platforms. Users can easily complete the following functions through the Python interface provided by the tool:

- Model conversion: support to convert Caffe / TensorFlow / TensorFlow Lite / ONNX / Darknet / PyTorch model to RKNN model, support RKNN model import/export, which can be used on Rockchip NPU platform later.

- Quantization: support to convert float model to quantization model, currently support quantized methods including asymmetric quantization (asymmetric_quantized-8). and support hybrid quantization.

- Model inference: Able to simulate NPU to run RKNN model on PC and get the inference result. This tool can also distribute the RKNN model to the specified NPU device to run, and get the inference results.

- Performance & Memory evaluation: distribute the RKNN model to the specified NPU device to run, and evaluate the model performance and memory consumption in the actual device.

- Quantitative error analysis: This function will give the Euclidean or cosine distance of each layer of inference results before and after the model is quantized. This can be used to analyze how quantitative error occurs, and provide ideas for improving the accuracy of quantitative models.

- Model encryption: Use the specified encryption method to encrypt the RKNN model as a whole.

System Dependency

TBD

Installation

TBD