Difference between revisions of "NXP eIQ"

Darren.huang (talk | contribs) |

Darren.huang (talk | contribs) |

||

| Line 1: | Line 1: | ||

| − | + | <span style="color:#0070c0">NXP i.MX series</span> | |

| − | |||

| − | |||

The i.MX 8M Plus family focuses on neural processing unit (NPU) and vision system, advance multimedia, andindustrial automation with high reliability. | The i.MX 8M Plus family focuses on neural processing unit (NPU) and vision system, advance multimedia, andindustrial automation with high reliability. | ||

| Line 64: | Line 62: | ||

[[File:2023-09-27 170604.png|400px|2023-09-27 170604.png]] | [[File:2023-09-27 170604.png|400px|2023-09-27 170604.png]] | ||

| + | | ||

==== <span style="color:#0070c0">eIQ - A Python Framework for eIQ on i.MX Processors</span> ==== | ==== <span style="color:#0070c0">eIQ - A Python Framework for eIQ on i.MX Processors</span> ==== | ||

| Line 88: | Line 87: | ||

*[[:File:pyeiq-3.1.0.tar.gz]] | *[[:File:pyeiq-3.1.0.tar.gz]] | ||

| − | + | <span style="color:#0070c0">How to Run Samples</span> | |

| − | |||

* Start the manager tool: | * Start the manager tool: | ||

| Line 124: | Line 122: | ||

|} | |} | ||

| − | + | <span style="color:#0070c0">PyeIQ Demos</span> | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | *covid19_detection | |

| + | *object_classification_tflite | ||

| + | *object_detection_tflite | ||

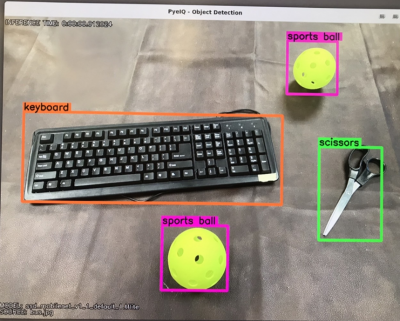

| − | + | ====== <span style="color:#0070c0">Demos Example - Running Object Detection</span> ====== | |

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | ====== <span style="color:#0070c0">Demos Example - Running Object Detection | ||

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos. Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance. | Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos. Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance. | ||

| Line 251: | Line 136: | ||

* This runs inference on a default image: | * This runs inference on a default image: | ||

| − | + | [[File:2023-09-27_172321.png|400px]] | |

| − | [[File: | + | |

*Run the ''Object Detection'' '''Custom Image '''demo using the following line: | *Run the ''Object Detection'' '''Custom Image '''demo using the following line: | ||

Revision as of 10:24, 27 September 2023

NXP i.MX series

The i.MX 8M Plus family focuses on neural processing unit (NPU) and vision system, advance multimedia, andindustrial automation with high reliability.

- The Neural Processing Unit (NPU) of i.MX 8M Plus operating at up to 2.3 TOPS

Contents

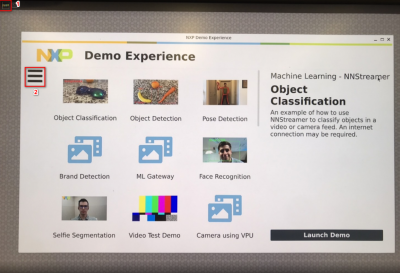

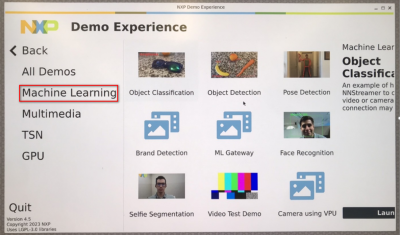

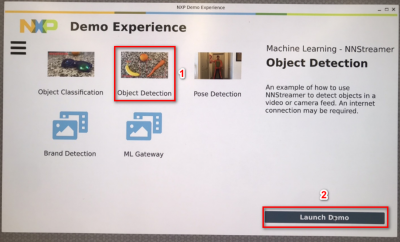

NXP Demo Experience

- Preinstalled on NXP-provided demo Linux images

- imx-image-full image must be used

- Yocto 3.3 (5.10.52_2.1.0 ) ~ Yocto 4.2 (6.1.1_1.0.0)

- Need to connect the internet

Start the demo launcher by clicking NXP Logo is displayed on the top left-hand corner of the screen

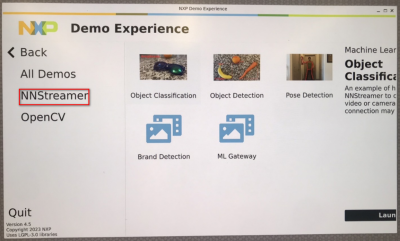

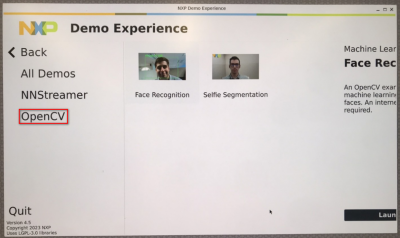

Machine Learning Demos

- NNStreamer demos

- Object classification

- Object detection

- Pose detection

- Brand detection

- ML gateway

- OpenCV demos

- Face recognition

- Selfie segmentation

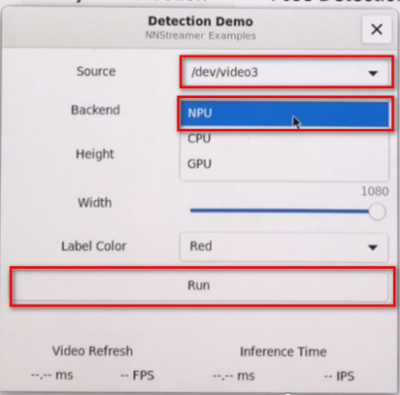

NNStreamer Demo: Object Detection

Click the "Object Detection " and Launch Demo

Set some parameters:

- Source: Select the camera to use or to use the example video

- Backend: Select whether to use the NPU (if available) or CPU for inferences.

- Height: Select the input height of the video if using a camera.

- Width: Select the input width of the video if using a camera.

- Label Color: Select the color of the overlay labels.

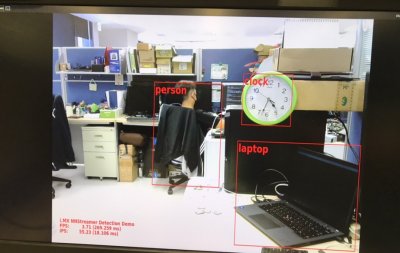

The result of NPU object detection

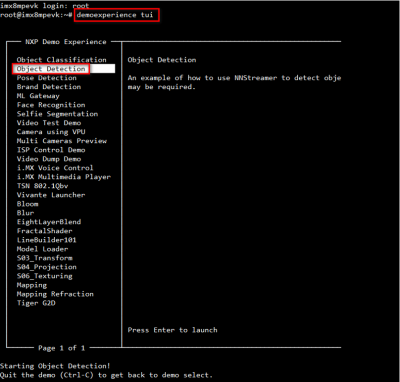

NXP Demo Experience - Text User Interface(TUI)

- Command :demoexperience tui

eIQ - A Python Framework for eIQ on i.MX Processors

PyeIQ is written on top of eIQ™ ML Software Development Environment and provides a set of Python classes

allowing the user to run Machine Learning applications in a simplified and efficiently way without spending time on

cross-compilations, deployments or reading extensive guides.

Installation

- Method 1: Use pip3 tool to install the package located at PyPI repository:

$ pip3 install pyeiq

- Method 2: Get the latest tarball Download files and copy it to the board:

$ pip3 install <tarball>

pyeiq tarball:

How to Run Samples

- Start the manager tool:

$ pyeiq

- The above command returns the PyeIQ manager tool options:

| Manager Tool Command | Description | Example |

| pyeiq --list-apps | List the available applications. | |

| pyeiq --list-demos | List the available demos. | |

| pyeiq --run <app_name/demo_name> | Run the application or demo. | # pyeiq --run object_detection_tflite |

| pyeiq --info <app_name/demo_name> | Application or demo short description and usage. | |

| pyeiq --clear-cache | Clear cached media generated by demos. | # pyeiq --info object_detection_tflite |

PyeIQ Demos

- covid19_detection

- object_classification_tflite

- object_detection_tflite

Demos Example - Running Object Detection

Object detection is a computer technology related to computer vision and image processing that deals with detecting instances of semantic objects of a certain class (such as humans, buildings, or cars) in digital images and videos. Well-researched domains of object detection include face detection and pedestrian detection. Object detection has applications in many areas of computer vision, including image retrieval and video surveillance.

- Run the Object Detection Default Image demo using the following line:

$ pyeiq --run object_detection_tflite

* This runs inference on a default image:

- Run the Object Detection Custom Image demo using the following line:

$ pyeiq --run object_detection_tflite --image=/path_to_the_image

- Run the Object Detection Video File using the following line:

$ pyeiq --run object_detection_tflite --video_src=/path_to_the_video

- Run the Object Detection Video Camera or Webcam using the following line:

$ pyeiq --run object_detection_tflite --video_src=/dev/video<index>