Difference between revisions of "Edge AI SDK/AI Framework/RK3588"

Zhihao.zhu (talk | contribs) (Created page with " = Requirement = {| border="1" cellpadding="1" cellspacing="1" style="width: 900;" |- | style="width: 100px;" | Device | style="width: 250px;" | EMMC (recommend physical size...") |

Zhihao.zhu (talk | contribs) |

||

| Line 34: | Line 34: | ||

| style="width: 150px;" | v3.3.1 | | style="width: 150px;" | v3.3.1 | ||

| style="width: 300px;" | Debian 12 kernel:6.1.75 | | style="width: 300px;" | Debian 12 kernel:6.1.75 | ||

| − | | style="width: 500px;" | | + | | style="width: 500px;" | librknnrt version: 2.0.0b0、 rknpu 0.9.7 |

|} | |} | ||

| − | = RKNPU2 | + | == RKNN SDK == |

| + | RKNN SDK ([https://pan.baidu.com/s/1R0DhNJU56Uhp4Id7AzNfgQ Baidu] Password: a887)include two parts: | ||

| + | *rknpu2 (on the Board End) | ||

| + | *rknn-toolkit2 (on the PC) | ||

| + | <pre> | ||

| + | ├── rknn-toolkit2 | ||

| + | │ ├── doc | ||

| + | │ ├── examples | ||

| + | │ ├── packages | ||

| + | │ └── rknn_toolkit_lite2 | ||

| + | └── rknpu2 | ||

| + | ├── Driver | ||

| + | └── RKNPU2 Environment | ||

| + | </pre> | ||

| − | + | = '''rknpu2''' = | |

| + | |||

| + | 'rknpu2' include driver and runtime-lib to help to fast develop AI applications using rknn model(*.rknn). | ||

| + | Other models (eg:Caffe、TensorFlow etc) can be translated to rknn model through 'rknn-toolkit2'. | ||

| + | RKNN API Library file librknnrt.so and header file rknn_api.h can be found in rknpu2/runtime. | ||

| + | Released BSP and images have already included NPU driver and runtime libraries. | ||

| + | = RKNPU2 driver = | ||

| + | dmesg | grep -i rknpu. | ||

| + | The official firmware of boards all installs the RKNPU2 driver. | ||

| + | |||

| + | = RKNPU2 Environment = | ||

| + | Here are two basic concepts in the RKNPU2 environment: | ||

| + | RKNN Server: A background proxy service running on the development board. The main function of this service is to call the interface corresponding to the board end Runtime to process the data transmitted by the computer through USB, and return the processing results to the computer. | ||

| + | RKNPU2 Runtime library (librknnrt.so): The main responsibility is to load the RKNN model in the system and perform inference operations of the RKNN model by calling a dedicated neural processing unit (NPU) | ||

More Info refer to [https://developer.nvidia.com/embedded/jetpack https://developer.nvidia.com/embedded/jetpack] | More Info refer to [https://developer.nvidia.com/embedded/jetpack https://developer.nvidia.com/embedded/jetpack] | ||

| + | JetPack [https://developer.nvidia.com/embedded/jetpack-sdk-512 5.1.2] includes CUDA 11.4.19, TensorRT 8.5.2, DeepStream 6.2, | ||

| − | + | = '''rknn-toolkit2''' = | |

| − | = RKNN = | + | RKNN-Toolkit2 is a development kit that provides users with model conversion, inference and performance evaluation on PC platforms. Users can easily complete the following functions through the Python interface provided by the tool: |

| + | #<span style="color:#0000ff;">'''Model conversion'''</span>: support to convert Caffe / TensorFlow / TensorFlow Lite / ONNX / Darknet / PyTorch model to RKNN model, support RKNN model import/export, which can be used on Rockchip NPU platform later. | ||

| + | #<span style="color:#0000ff;">'''Quantization'''</span>: support to convert float model to quantization model, currently support quantized methods including asymmetric quantization (asymmetric_quantized-8). and support hybrid quantization. | ||

| + | #<span style="color:#0000ff;">'''Model inference'''</span>: Able to simulate NPU to run RKNN model on PC and get the inference result. This tool can also distribute the RKNN model to the specified NPU device to run, and get the inference results. | ||

| + | #<span style="color:#0000ff;">'''Performance & Memory evaluation'''</span>: distribute the RKNN model to the specified NPU device to run, and evaluate the model performance and memory consumption in the actual device. | ||

| + | #<span style="color:#0000ff;">'''Quantitative error analysis'''</span>: This function will give the Euclidean or cosine distance of each layer of inference results before and after the model is quantized. This can be used to analyze how quantitative error occurs, and provide ideas for improving the accuracy of quantitative models. | ||

| + | #<span style="color:#0000ff;">'''Model encryption'''</span>: Use the specified encryption method to encrypt the RKNN model as a whole. | ||

| − | |||

| | ||

Revision as of 06:52, 7 July 2025

Contents

Requirement

| Device | EMMC (recommend physical size) | Full Installed Size |

| ASR-A501 | 64 GB | 135 MB |

| AOM-3821 | 32 GB | 135 MB |

Version

| Device | EdgeAISDK | OS | Support Framework Version |

| AOM-3821 | v3.3.1 | Debian 12 kernel:6.1.75 | |

| ASR-A501 | v3.3.1 | Debian 12 kernel:6.1.75 | librknnrt version: 2.0.0b0、 rknpu 0.9.7 |

RKNN SDK

RKNN SDK (Baidu Password: a887)include two parts:

- rknpu2 (on the Board End)

- rknn-toolkit2 (on the PC)

├── rknn-toolkit2

│ ├── doc

│ ├── examples

│ ├── packages

│ └── rknn_toolkit_lite2

└── rknpu2

├── Driver

└── RKNPU2 Environment

rknpu2

'rknpu2' include driver and runtime-lib to help to fast develop AI applications using rknn model(*.rknn). Other models (eg:Caffe、TensorFlow etc) can be translated to rknn model through 'rknn-toolkit2'. RKNN API Library file librknnrt.so and header file rknn_api.h can be found in rknpu2/runtime. Released BSP and images have already included NPU driver and runtime libraries.

RKNPU2 driver

dmesg | grep -i rknpu. The official firmware of boards all installs the RKNPU2 driver.

RKNPU2 Environment

Here are two basic concepts in the RKNPU2 environment: RKNN Server: A background proxy service running on the development board. The main function of this service is to call the interface corresponding to the board end Runtime to process the data transmitted by the computer through USB, and return the processing results to the computer. RKNPU2 Runtime library (librknnrt.so): The main responsibility is to load the RKNN model in the system and perform inference operations of the RKNN model by calling a dedicated neural processing unit (NPU)

More Info refer to https://developer.nvidia.com/embedded/jetpack JetPack 5.1.2 includes CUDA 11.4.19, TensorRT 8.5.2, DeepStream 6.2,

rknn-toolkit2

RKNN-Toolkit2 is a development kit that provides users with model conversion, inference and performance evaluation on PC platforms. Users can easily complete the following functions through the Python interface provided by the tool:

- Model conversion: support to convert Caffe / TensorFlow / TensorFlow Lite / ONNX / Darknet / PyTorch model to RKNN model, support RKNN model import/export, which can be used on Rockchip NPU platform later.

- Quantization: support to convert float model to quantization model, currently support quantized methods including asymmetric quantization (asymmetric_quantized-8). and support hybrid quantization.

- Model inference: Able to simulate NPU to run RKNN model on PC and get the inference result. This tool can also distribute the RKNN model to the specified NPU device to run, and get the inference results.

- Performance & Memory evaluation: distribute the RKNN model to the specified NPU device to run, and evaluate the model performance and memory consumption in the actual device.

- Quantitative error analysis: This function will give the Euclidean or cosine distance of each layer of inference results before and after the model is quantized. This can be used to analyze how quantitative error occurs, and provide ideas for improving the accuracy of quantitative models.

- Model encryption: Use the specified encryption method to encrypt the RKNN model as a whole.

Applications

Edge AI SDK / Vision Application

| Application | Model | AOM-3821 FPS (video file) | ASR-A501 FPS (video file) |

| Object Detection | yolov10 | 30 | 30 |

| Person Detection | yolov10 | 30 | 30 |

| Face Detection | retinaface | 30 | 30 |

| Pose Estimation | yolov8_pose | 30 | 30 |

Edge AI SDK / GenAI Application

| Version | Application | Model | Note |

| v3.0.0 | LLM Chatbot | Llama-2-7b | |

| v3.3.0 | GenAI Chatbot | Gemma3:4b | Refer to Link |

Benchmark

Jetson is used to deploy a wide range of popular DNN models and ML frameworks to the edge with high performance inferencing, for tasks like real-time classification and object detection, pose estimation, semantic segmentation, and natural language processing (NLP).

More Info refer to [ https://developer.nvidia.com/embedded/jetson-benchmarks ]

More Info refer to [ https://github.com/NVIDIA-AI-IOT/jetson_benchmarks ]

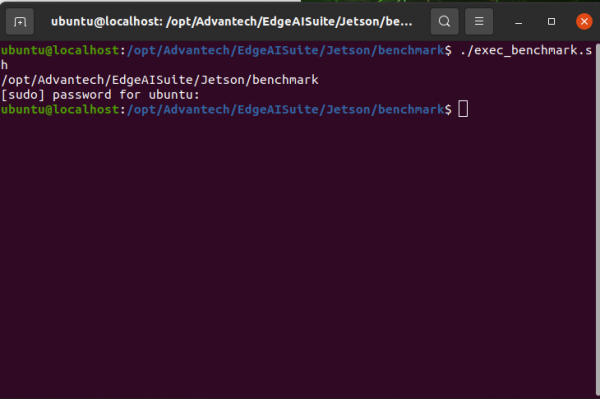

cd /opt/Advantech/EdgeAISuite/Jetson/benchmark

./exec_benchmark.sh

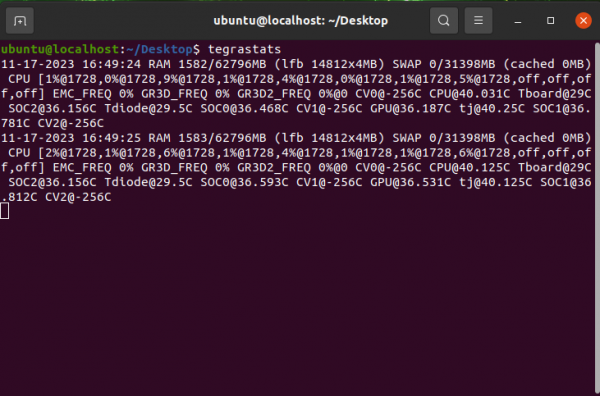

Tegrastats Utility

This SDK provides the tegrastats utility, which reports memory usage and processor usage for Tegra-based devices. You can find the utility in your package at the following location.

More Info refer to Link