Edge AI SDK/GenAIChatbot

Brief of GenAI Chatbot

Contents

Introduction

GenAI Chatbot is a next-generation conversational AI assistant built on the OLLAMA architecture, supporting all models compatible with OLLAMA. Designed for seamless integration with GenAI Studio, it allows users to directly import models that have been fine-tuned within GenAI Studio, enabling easy deployment and immediate use of custom models in the chatbot. At its core, GenAI Chatbot utilizes efficient Small Language Models (SLMs) to provide natural, context-aware interactions. The chatbot features advanced capabilities, including audio processing (Speech-to-Text [STT], Text-to-Speech [TTS]), Retrieval-Augmented Generation (RAG), and an embedded vector database (VectorDB), all within a flexible configuration suitable for diverse application scenarios. It is currently optimized for embedded platforms such as NVIDIA Jetson Orin Nano and Jetson Orin AGX.

SLM Chatbot

The GenAI Chatbot utilizes a Small Language Model (SLM) as its core engine. SLMs provide efficient language understanding and generation capabilities, delivering fast and accurate responses with low computational overhead. This allows the chatbot to operate smoothly on both edge devices and server environments.

Integrate with GenAI Studio

GenAI Chatbot is fully integrated with GenAI Studio, the AI LLM model management platform. Within GenAI Studio, users can:

- Perform model fine-tuning.

- Access a variety of model quantization methods for deployment on different hardware.

- Convert models easily to the formats required by various platforms.

- Customize and personalize AI models with ease.

This integration significantly simplifies the process of deploying and customizing chatbots for both enterprises and developers.

Audio

- Speech-to-Text (STT)

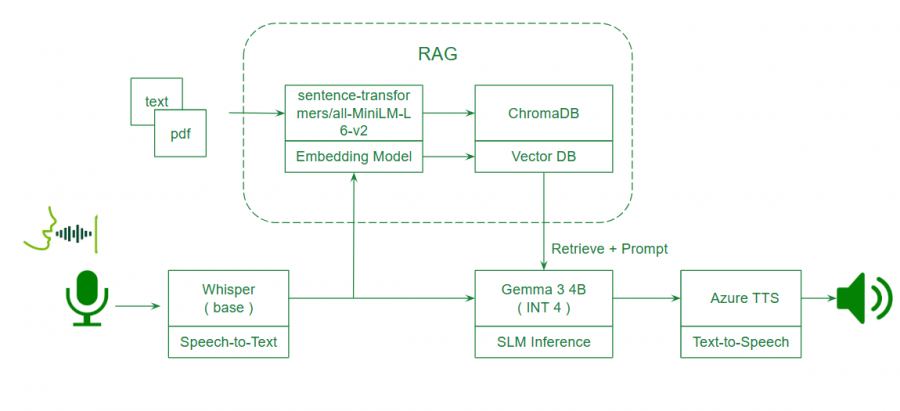

GenAI Chatbot supports natural voice input, automatically converting user speech queries into text for processing. The system can flexibly integrate with a variety of Speech-to-Text (STT) engines and providers. By default, it uses the Whisper-Base model and supports integration with OpenAI API, Web Browser API, Deepgram API, and Azure AI Speech API, making it easy to connect to different STT services as needed.

- Text-to-Speech (TTS)

Chatbot responses can be automatically converted to natural-sounding speech using Text-to-Speech (TTS). Users are free to choose different voices and languages. The system supports the Web API by default and can be integrated with OpenAI API, Transformers, ElevenLabs, and Azure AI Speech API, providing flexible options for TTS services.

RAG

GenAI Chatbot supports Retrieval-Augmented Generation (RAG), combining large language models (LLMs) with external knowledge bases to greatly improve the accuracy and depth of answers. This makes it suitable for knowledge-based Q&A, document search, FAQ systems, and various information retrieval scenarios.

- Embedded

- The system converts various documents, knowledge sources, or custom content into vector (embedding) representations to enable efficient semantic search.

- By default, the sentence-transformers/all-MiniLM-L6-v2 model is used for embeddings, balancing computational efficiency and semantic understanding.

- Supports automatic embedding of multilingual documents and multiple file formats: txt, pdf (only text).

- Custom embedding models are supported to flexibly meet the needs of different professional domains.

- Note: The maximum size of individual files and support for specific file formats are subject to the architecture and limitations of the selected embedding model. Some models have limited capability when processing long texts, special formats, or large files. It is recommended to evaluate and choose the most suitable embedding model based on your actual application scenarios to ensure both performance and accuracy.

- VecorDB

- The generated embeddings are stored in a VectorDB (vector database), supporting large-scale, efficient semantic search and matching.

- By default, ChromaDB is used as the vector database, offering real-time retrieval and ease of deployment and management.

- The system can be extended to support other mainstream VectorDBs, making it suitable for enterprise or cloud-based deployments.

- Built-in features such as deduplication, sharding, and data weighting ensure query efficiency and result quality.

How To

Download SLM Models from GenAI Studio

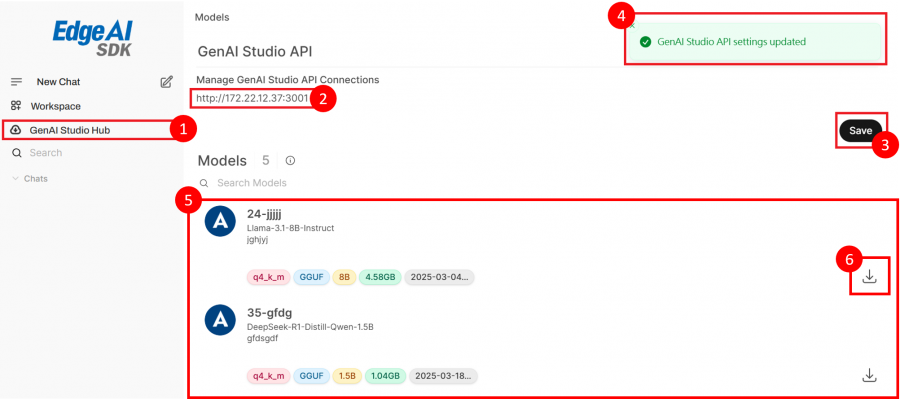

- Click on icon 1 to open the GenAI Studio Hub page.

- At icon 2, enter the URL of your GenAI Studio.

- Click on icon 3 to save your settings.

- Once the configuration is successful, a notification will appear as shown at icon 4.

- Icon 5 displays a list of all models supported by GenAI Studio.

- Click on icon 6 to download the desired model.

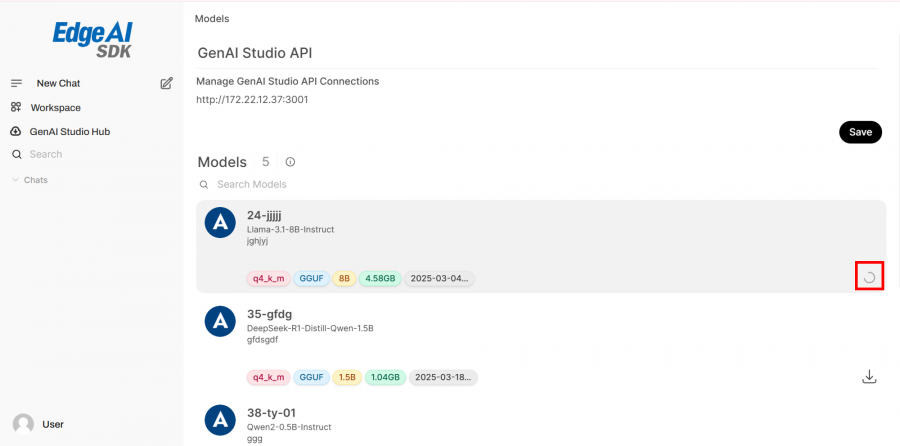

- Once the download begins, a loading icon will be displayed.

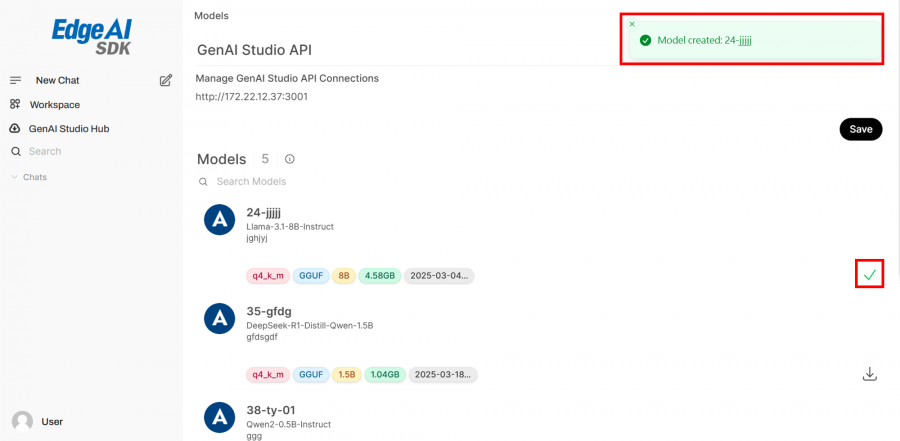

- After the download is complete, a notification will appear, and the download icon will change to a completion icon.

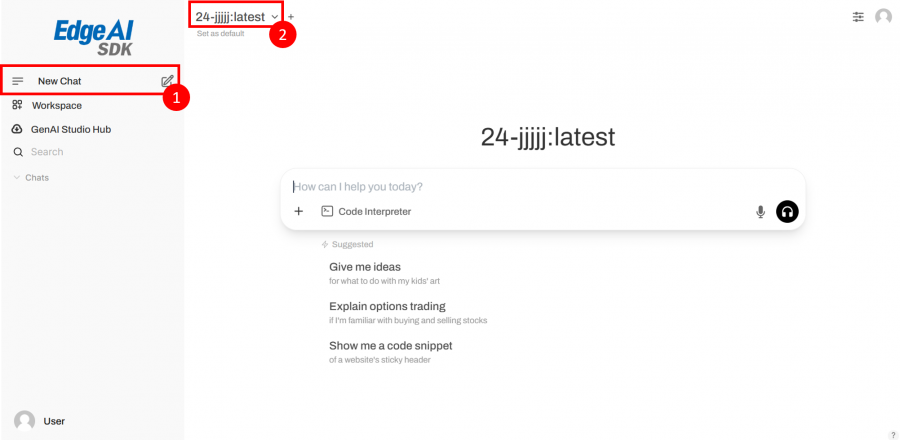

- After the model has finished downloading, click on icon 1 to create a new conversation.

- Next, click on icon 2 to select the model you just downloaded and start using it.

Create a new chatbot assistant with RAG

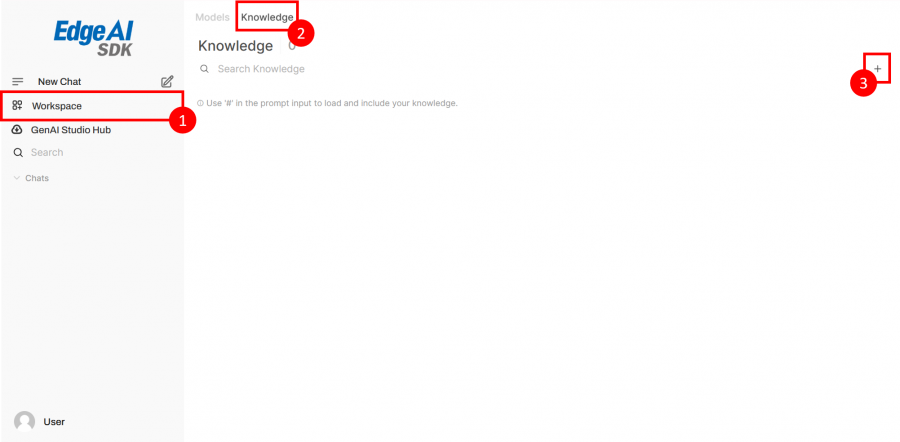

- Click on icon 1 to open the Workspace page.

- Click on icon 2 to open the Knowledge settings.

- Click on icon 3 to add new Knowledge.

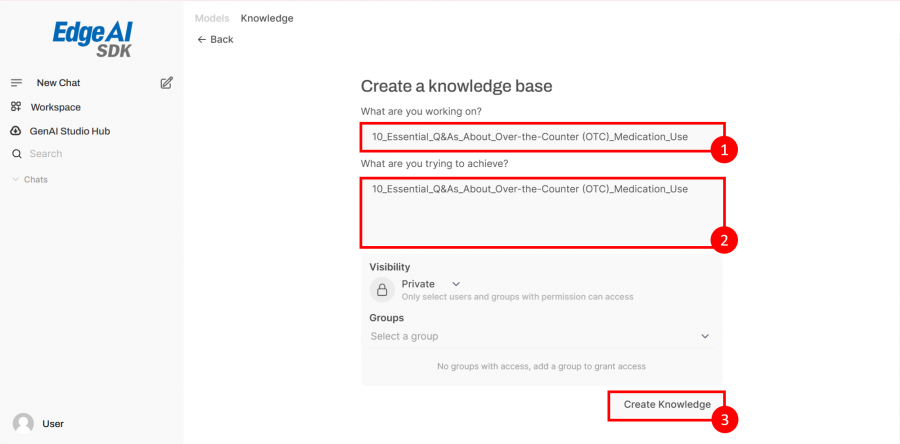

- Enter the title at icon 1.

- Enter the content at icon 2.

- Click on icon 3 to create the Knowledge.

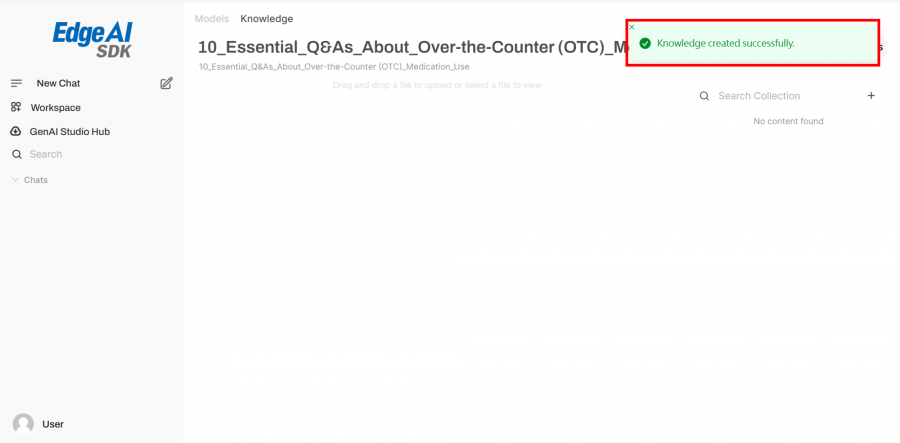

- The page will automatically switch back to the Knowledge section. After creation is complete, a notification will appear.

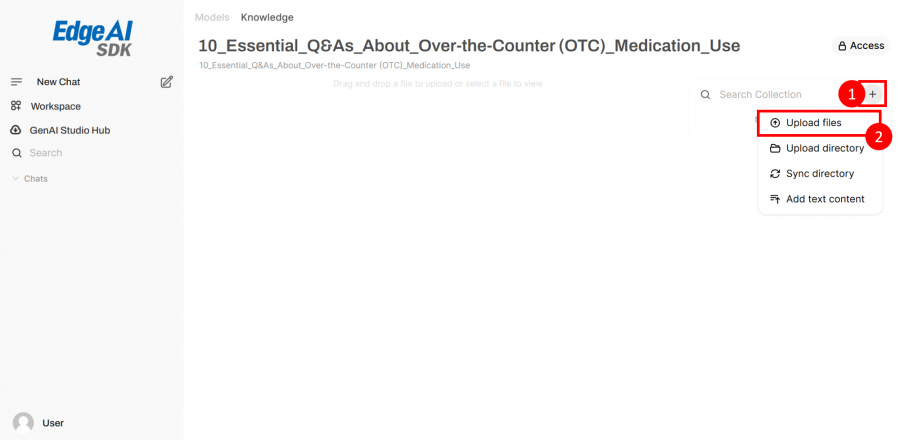

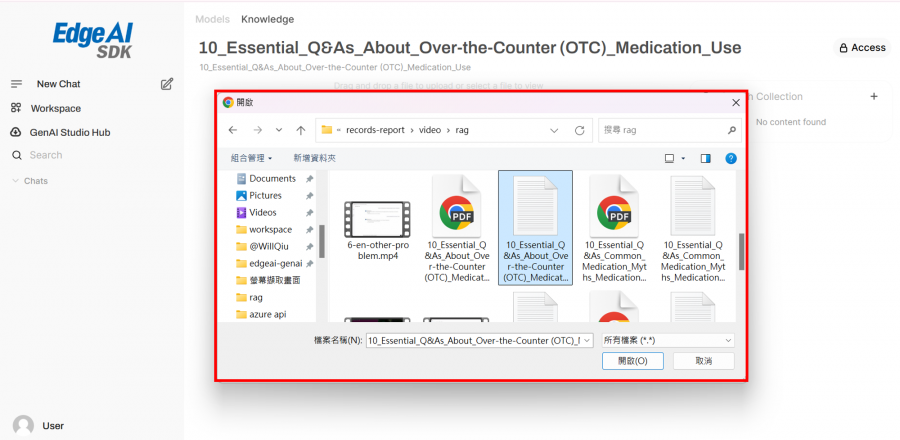

- Click on icon 1, the "+" icon.

- Click on icon 2, "Upload files."

- A file selection window will pop up. Select the files you want to use.

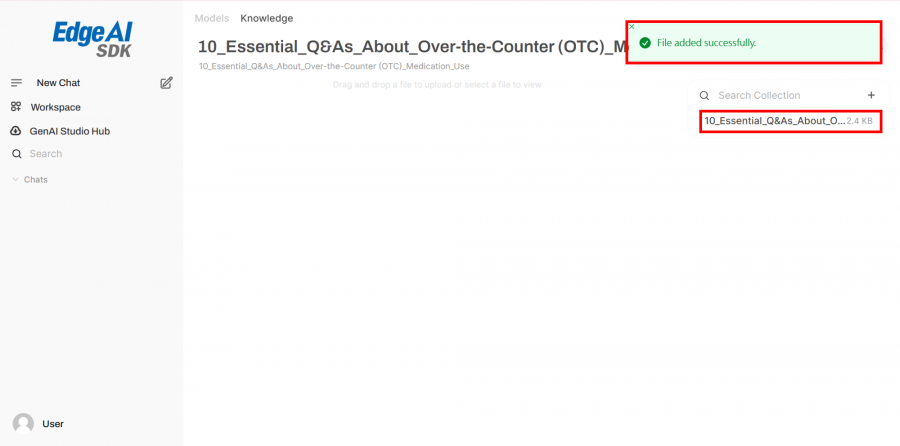

- After the upload, the files will be displayed and a success notification will appear.

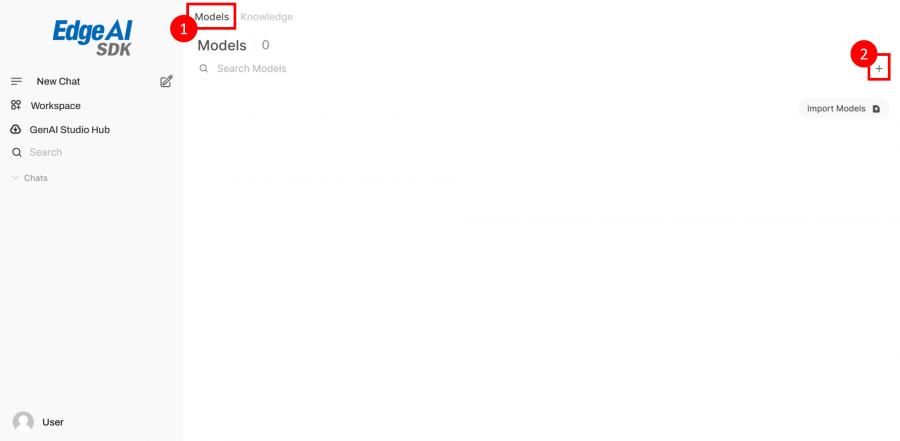

- After the Knowledge is created, click on icon 1 to go to the Models page.

- Click on icon 2, the "+" icon, to add a new knowledge model.

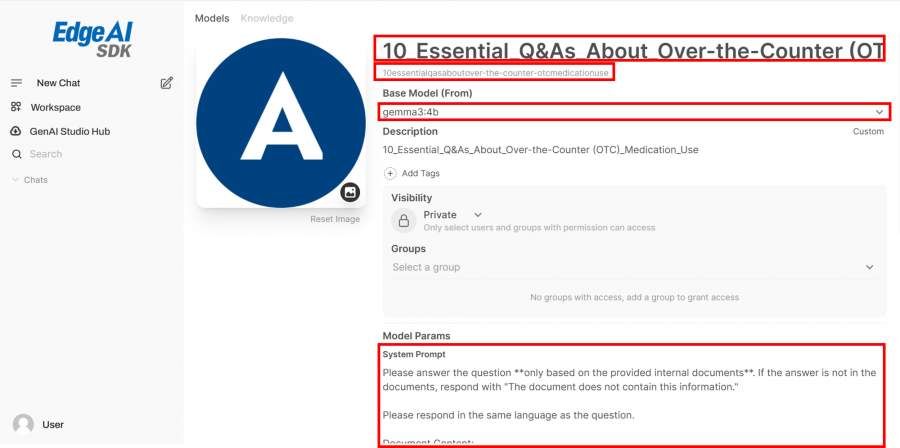

- On the add new model page, fill in and select the required fields shown in the red box: Title, Subtitle, Base Model, and System Prompt.

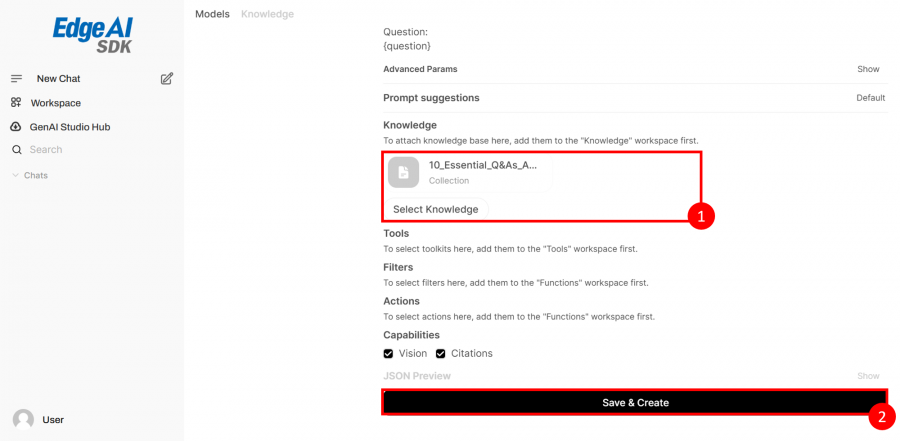

- Continuing from the previous page, click on icon 1 to select the Knowledge you just created, then click on icon 2 to save and create the model.

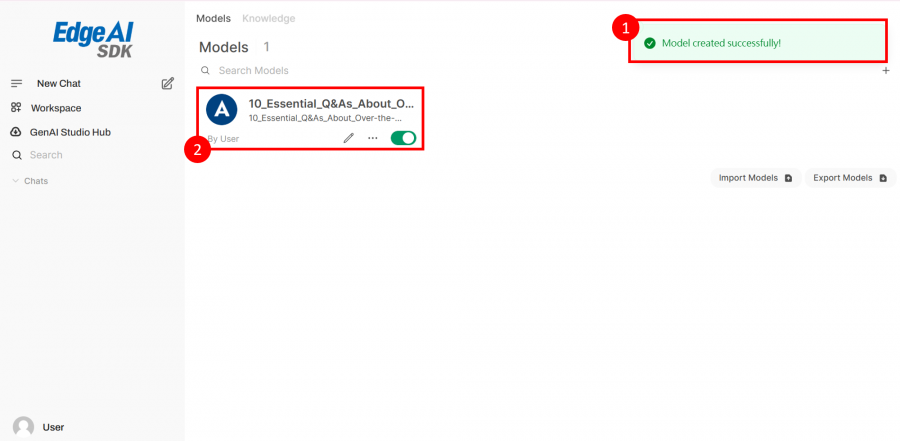

- After successful creation, a notification will appear at icon 1. Then, click on the model at icon 2 to enter the model chat.

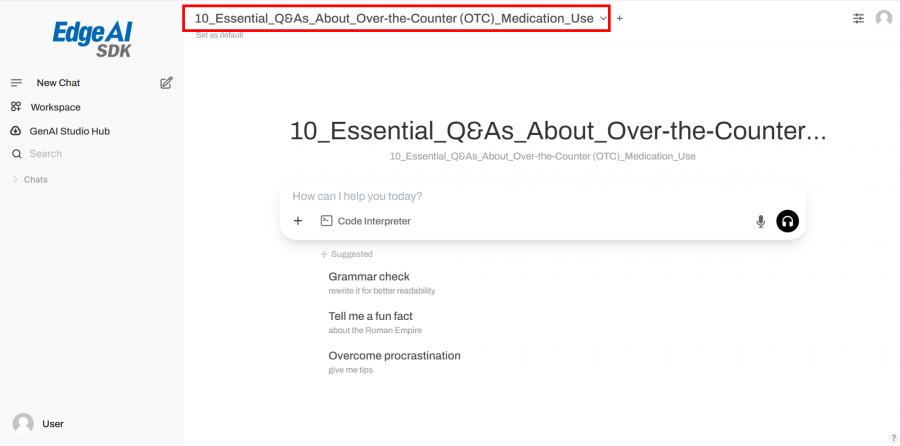

- The chat window will display that the model in use is the Knowledge model you created.

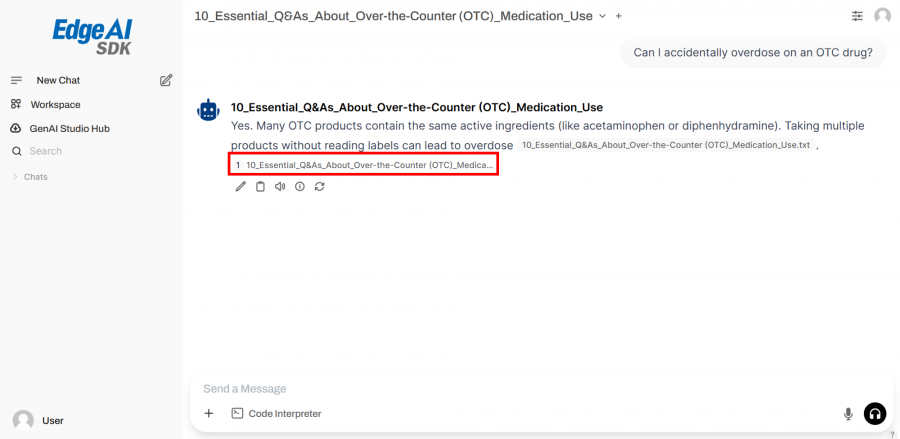

- After starting the conversation, you will see that the model retrieves information from the Knowledge you created in its responses.

Configuring TTS with Azure AI Speech API

Create an Account on Azure AI Speech API

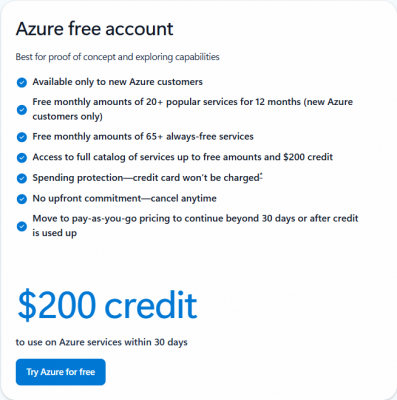

- Get started with Azure’s free account: new users receive $200 credit for 30 days and free access to popular services.

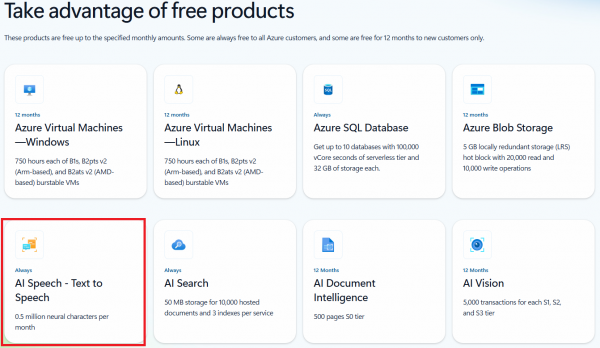

- AI Speech – Text-to-Speech: 500,000 neural characters per month for free accounts

2. Set Up the Azure Speech Service

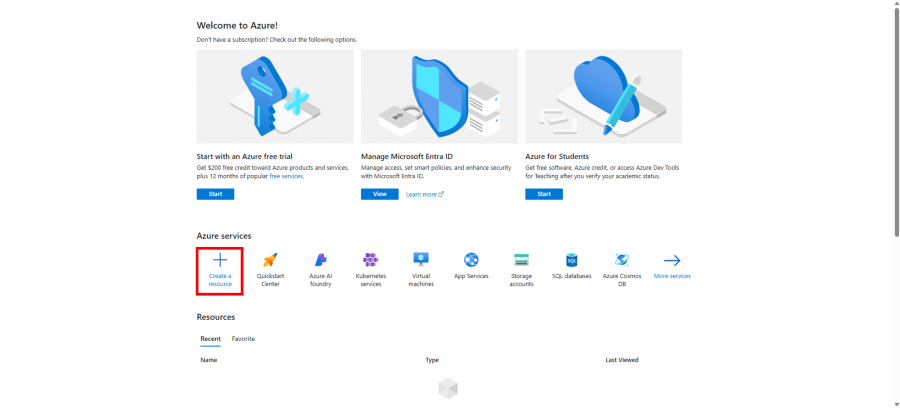

- In the Azure Portal, click on "Create a resource".

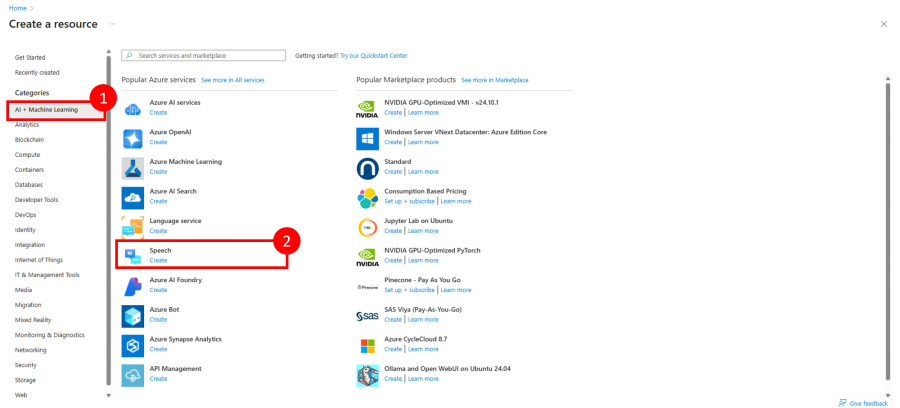

- Click on icon 1: "AI + Machine Learning"

- Then, click on icon 2: "Speech"

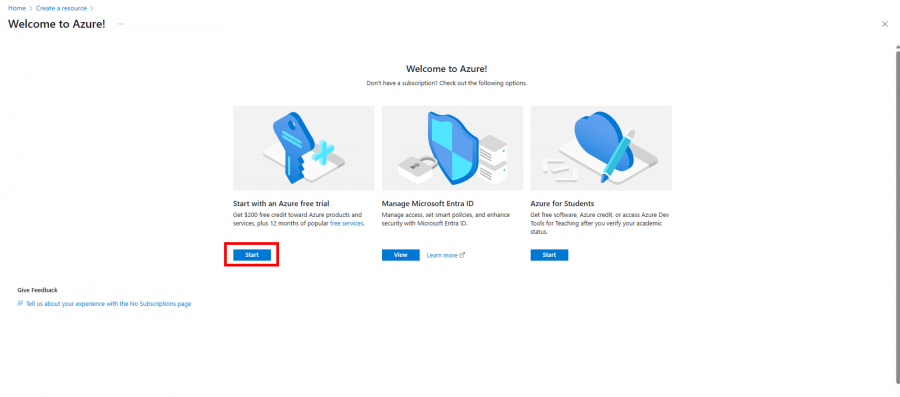

- Click on “Start” at the position marked by the red box.

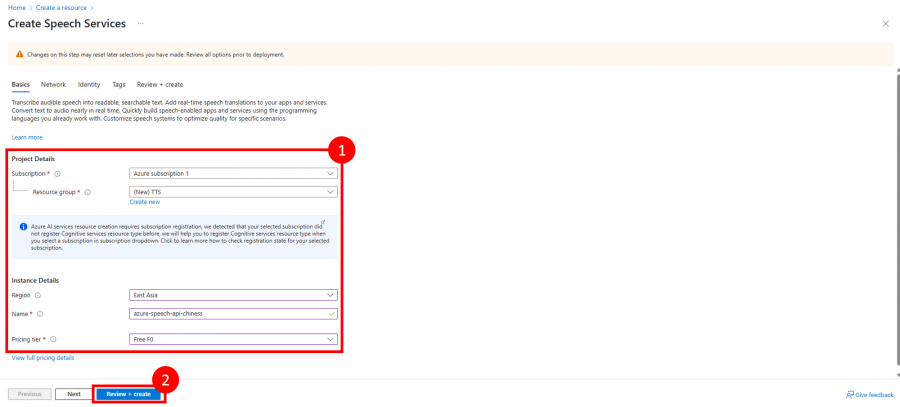

- Click "Create" and fill in the necessary details:

- Subscription: Choose your Azure subscription.

- Resource Group: Select an existing group or create a new one.

- Region: Choose a region close to your location.

- Name: Provide a unique name for your Speech resource.

- Pricing Tier: Select Free F0.

- Click on icon 2: "Review + create"

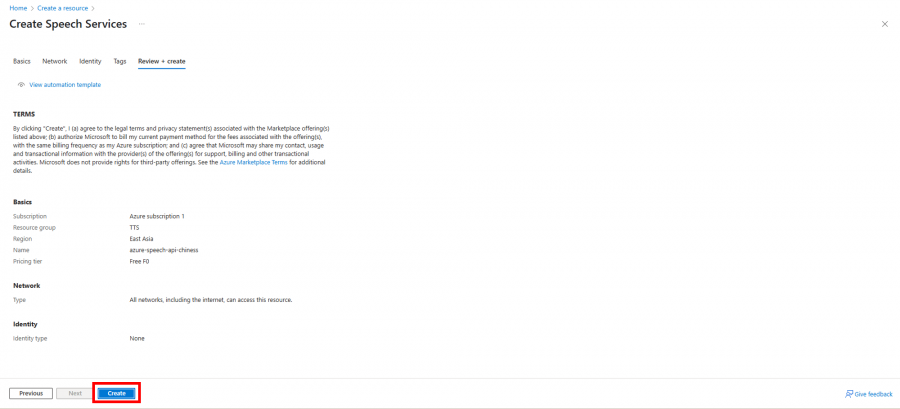

- After confirming the information, click the “Create” button.

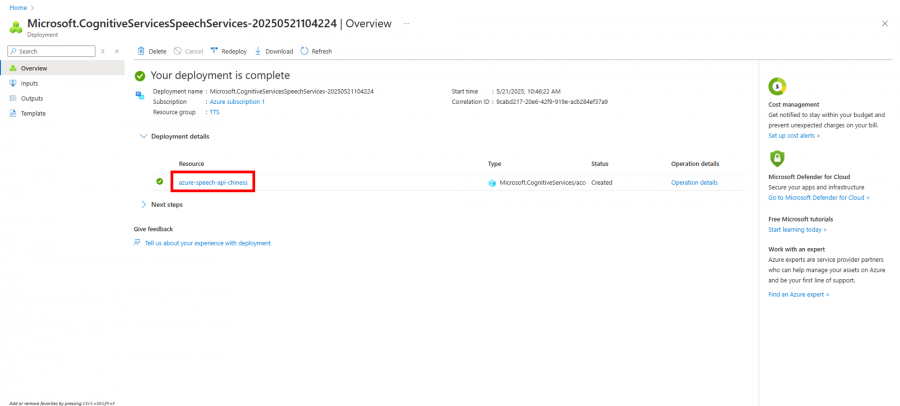

- After entering the overview page, click on the name link of the resource you created under "Resource."

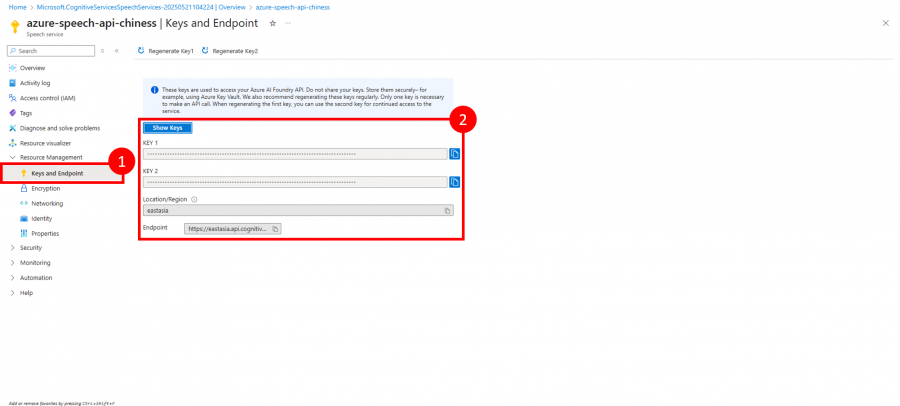

- In the left-hand menu, click on "Keys and Endpoint".

- Note down the Key1 or Key2 and the Endpoint URL; you'll need these to authenticate your API requests.

Setting Up on GenAI Chatbot

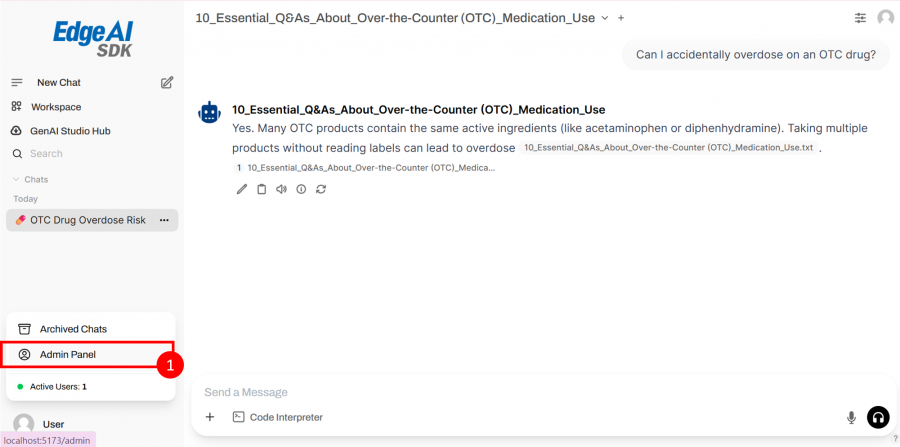

- Select icon 1 and click on "Admin Panel."

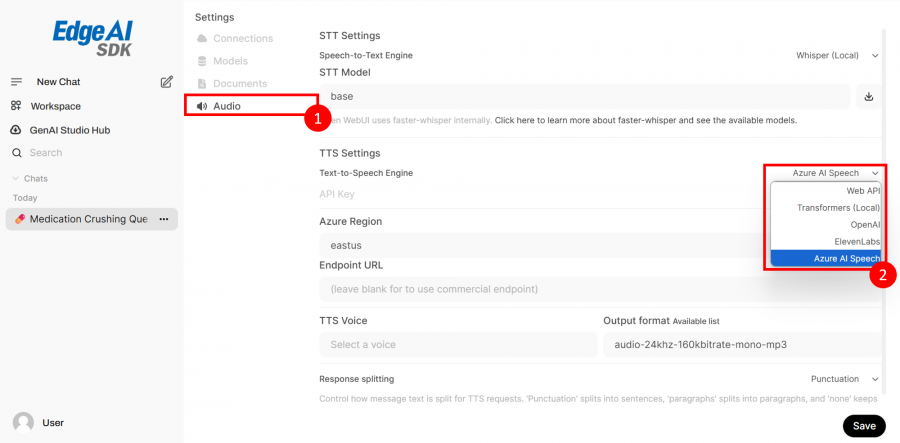

- Find the TTS Settings section and select the Engine you want to use, as shown at icon 2: "Azure AI Speech"

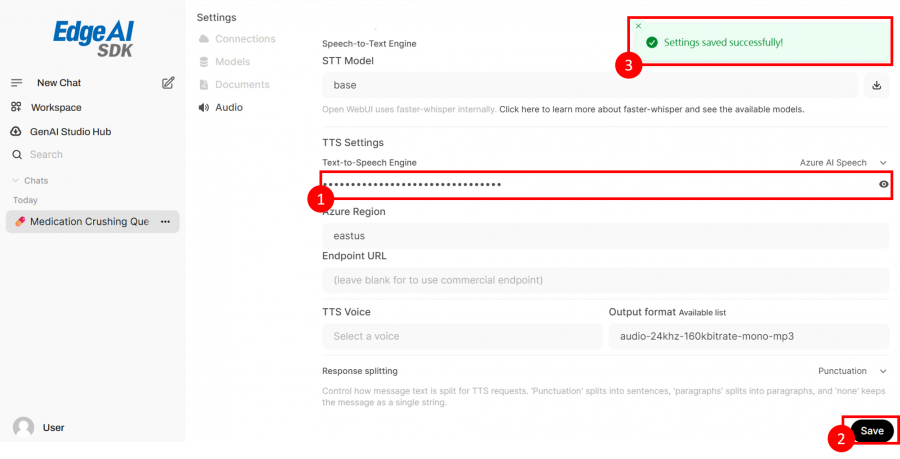

- Enter the Azure AI Speech API token at icon 1.

- Click on icon 2, "Save."

- Finally, you will see a success notification at icon 3.

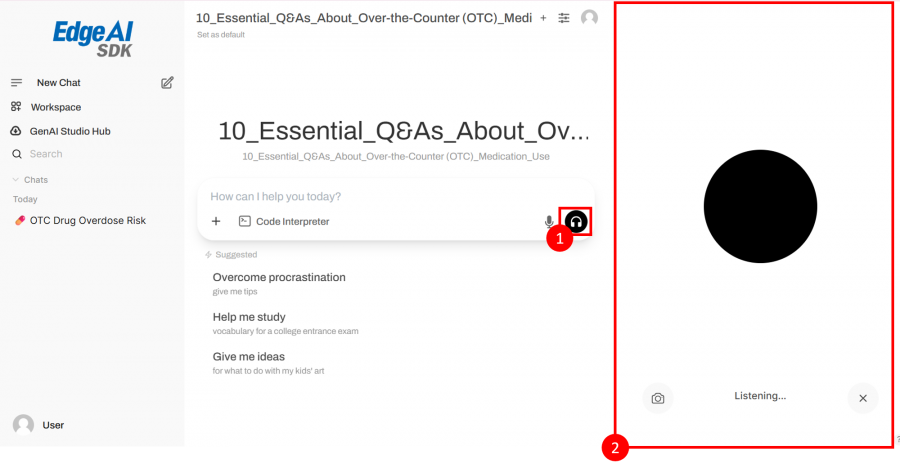

- Go to the chat window and click on icon 1, the conversation icon.

- An icon will appear at the position of icon 2, indicating that the feature is ready to use.

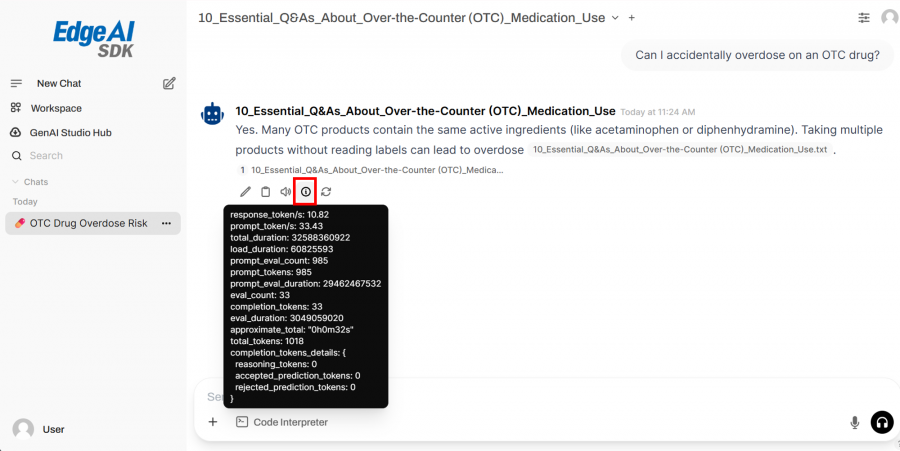

Evaluate the Benchmark of Each Chatbot Response

- An information button is provided next to each response. Clicking it reveals detailed performance and inference statistics for that response, including token counts, processing speed, and computation time. This allows developers to monitor and optimize system performance in real time.

Example

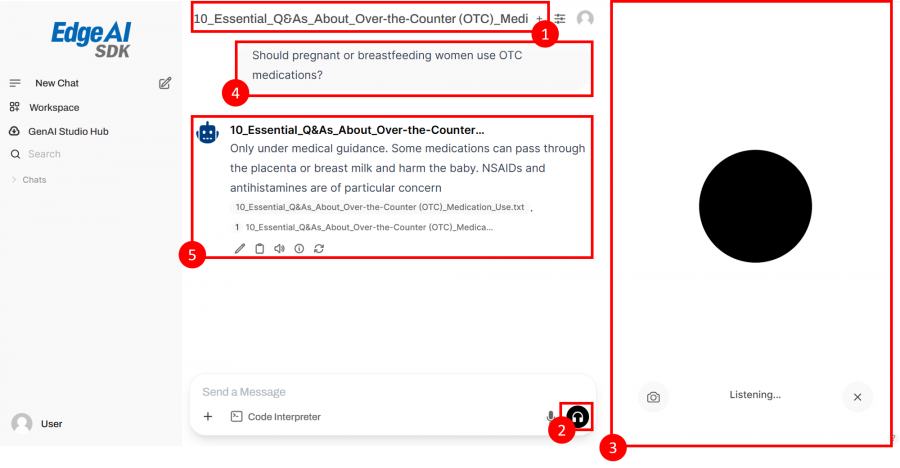

Creating an Audio + RAG Chatbot for Medication Assistant

1. Configuring TTS with Azure AI Speech API

2. Prepare files for RAG in PDF or TXT:

2. Create a new chatbot assistant with RAG

1. Client the Workspace from the left menu.

2. Go to the Knowledge

3. Click the + icon to add a new knowledge

The page will automatically switch back to the Knowledge section. After creation is complete, a notification will appear.

After the Knowledge is created, click on icon 1 to go to the Models page.

Click on icon 2, the "+" icon, to add a new knowledge model.

On the add new model page, fill in and select the required fields shown in the red box: Title, Subtitle, Base Model, and System Prompt.

Continuing from the previous page, click on icon 1 to select the Knowledge you just created, then click on icon 2 to save and create the model.

After successful creation, a notification will appear at icon 1. Then, click on the model at icon 2 to enter the model chat.