Advantech Robotic Suite/OpenVINO

Contents

Introduction

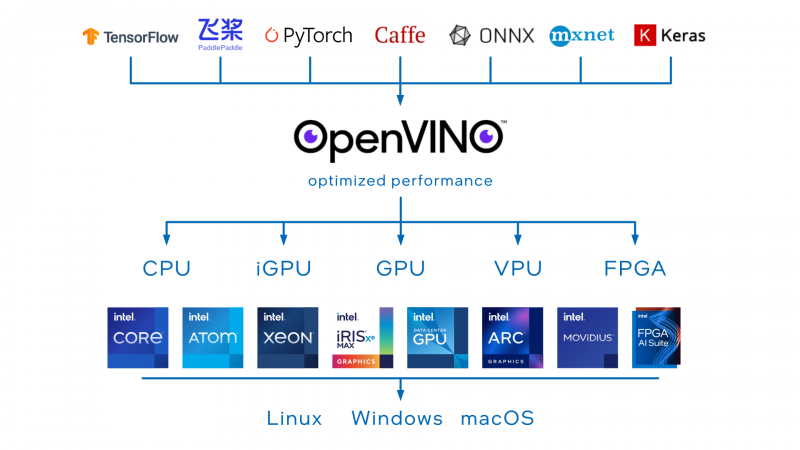

Intel OpenVINO is an open-source toolkit for optimizing and deploying deep learning models. It provides boosted deep learning performance for vision, audio, and language models from popular frameworks like TensorFlow, PyTorch, and more.

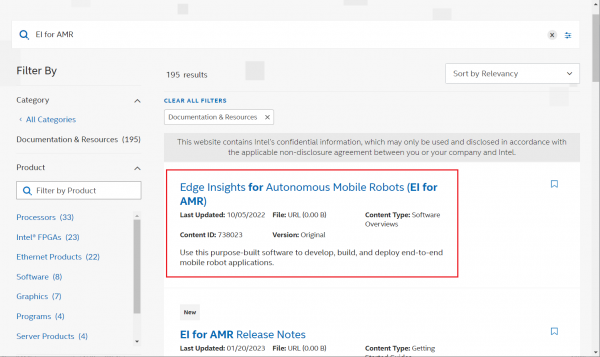

Intel have already published OpenVINO container in the Edge Insights for Autonomous Mobile Robots (EI for AMR), EI for AMR is a container modularize toolkit for user to develop, build, and deploy end-to-end mobile robot applications with this purpose-built, open, and modular software development kit that includes libraries, middleware, and sample applications based on the open-source Robot Operating System 2* (ROS 2).

User can download and install OpenVINO from EI for AMR portal: https://www.intel.com/content/www/us/en/developer/topic-technology/edge-5g/edge-solutions/autonomous-mobile-robots.html

Featured Components

- Intel® Distribution of OpenVINO™ Toolkit

- Intel® oneAPI Base Toolkit

- Intel® RealSense™ SDK 2.0

- Algorithms of FastMap for 3D mapping

- ROS 2 Sample Applications

Benefits

- Enables code to be implemented once and deployed to multiple hardware configurations.

- Accelerates deployment of customer ROS 2-based applications by reducing evaluation and development time.

- Provides prevalidated, scalable EI for AMR platforms through development partners.

Architecture

OpenVINO enables you to optimize a deep learning model from almost any framework and deploy it with best-in-class performance on a range of Intel processors and other hardware platforms.

Support Platform

Intel EI for AMR support 10 gen and newer Intel CPU and GPU, below list Advantech devices that are Intel ESDQ tested.

| Device | CPU Type |

|---|---|

| MIO-5375 | Intel Core i5-1145G7E |

| ARK-1250L | Intel Core i5-1145G7E |

| EI-52 | Intel Core i5-1145G7E |

Download & Installation

You can sign up and login to the Intel® Developer Zone to download and install OpenVINO container, please refer to the document https://www.intel.com/content/www/us/en/docs/ei-for-amr/get-started-guide-robot-kit/2022-3/overview.html

Run Sample

In this section, we will setup EI for AMR environment variables and run automated yml file that opens a ROS 2 sample application inside the EI for AMR Docker container.

Setup environment variables

- Go to the AMR_containers folder:

cd Edge_Insights_for_Autonomous_Mobile_Robots_2022.3/AMR_containers/

- Prepare the environment setup:

source ./01_docker_sdk_env/docker_compose/common/docker_compose.source export CONTAINER_BASE_PATH=`pwd` export ROS_DOMAIN_ID=12

Turtlesim Tutorial

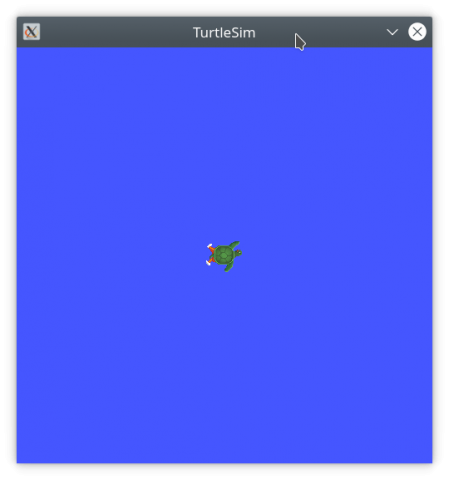

Turtlesim is a tool made for teaching ROS and ROS packages, below steps will introduct you to start the tutorial.

Step1. Run docker-compose to launch tutorial

To start turtlesim.tutorial:

CHOOSE_USER=eiforamr docker-compose -f 01_docker_sdk_env/docker_compose/05_tutorials/turtlesim.tutorial.yml down

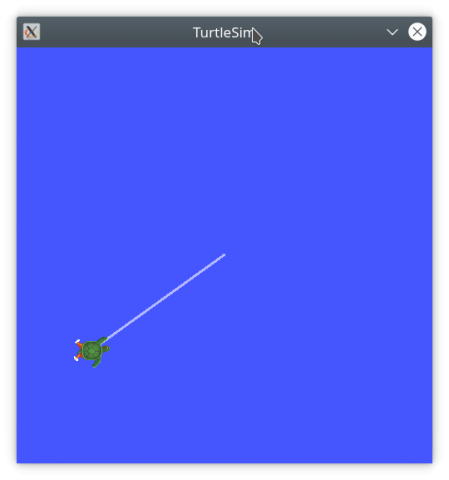

TurtleSim start a window and shows the turtle at initial location.

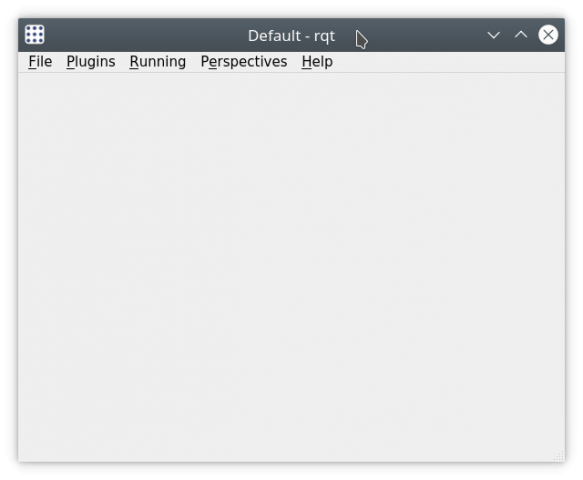

Rqt also start for the user to control turtle location.

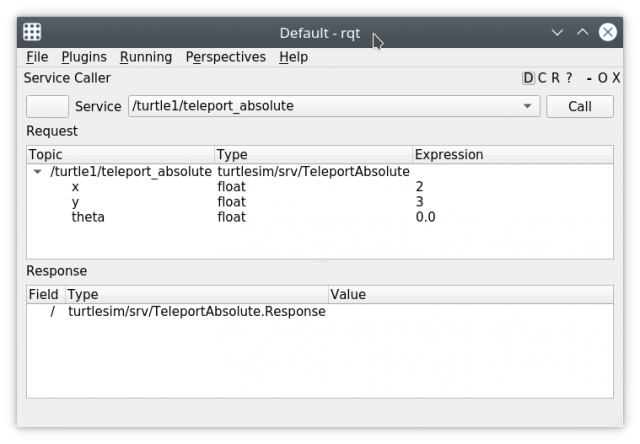

Step2. Control turtle location

Now you can call service to control turtle1 location:

1. From rqt menu, go to “Plugins” > “Services” > “Service Caller”

2. Choose to move turtle1 by choosing (from the Service drop-down list) “”/turtle1/teleport_absolute"

3. Make sure you changed x and y coordinates for the original values.

4. Press “Call”, the turtle should move.

The turtle1 will move to the new location that you changed.

To close this, do one of the following:

1. Type Ctrl-c in the terminal where you did the up command.

2. Close the rqt window.

3. Run this command in another terminal:

CHOOSE_USER=eiforamr docker-compose -f 01_docker_sdk_env/docker_compose/05_tutorials/turtlesim.tutorial.yml down

Object Detection Tutorial

This tutorial tells you how to run inference engine object detection on a pretrained network using the SSD method.

Step1. Run docker-compose to launch tutorial

To start openvino_GPU.tutorial:

CHOOSE_USER=root docker-compose -f 01_docker_sdk_env/docker_compose/05_tutorials/openvino_GPU.tutorial.yml up

Advanced Features

Intel provide a lot of samples for user to understand EI for AMR, user can refer to Intel on-line document and step-by-step walkthroughs to run sample application to learn advanced features of EI for AMR, for more detail information, please refer to Intel EI for AMR develop guide:

https://www.intel.com/content/www/us/en/docs/ei-for-amr/developer-guide/2022-3-1/overview.html